Vector DB: New Database for AI Era

Understanding vector database principles and practical applications through project experience

Understanding vector database principles and practical applications through project experience

The gold mine of AI era, NVIDIA GPUs. Why do we run AI on gaming graphics cards? Learn the difference between workers (CUDA) and matrix geniuses (Tensor Cores).

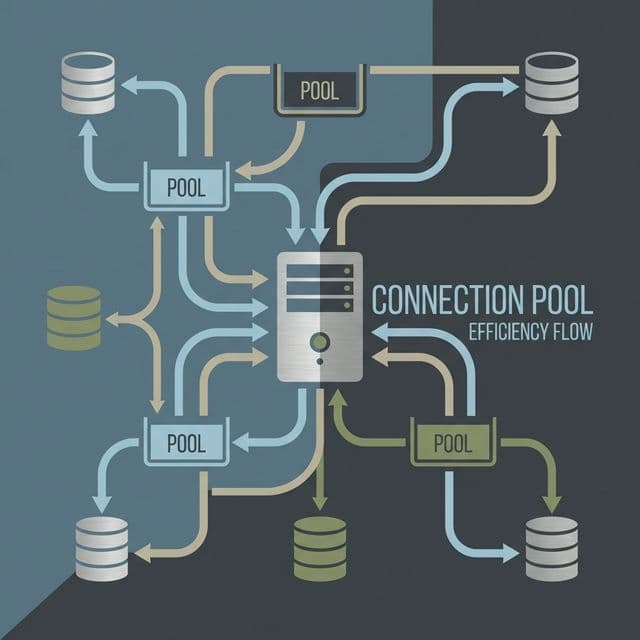

Understanding database connection pooling and performance optimization through practical experience

Understanding database transactions and ACID properties through practical experience

Understanding differences and selection criteria between fine-tuning and prompt engineering for LLM customization

While studying RAG (Retrieval-Augmented Generation) systems, I came across this pattern:

In cases dealing with large volumes of documents, what happens when you store embedding vectors in PostgreSQL and use the pgvector plugin for search?

It works—but as the document count grows, search performance becomes a real problem.

When a user asks a question, it takes several seconds to get an answer. "2.5 seconds just to search the DB?" The more data you add, the worse this gets linearly. At some point, the service becomes unusable.

The explanation I found in the documentation: "RDBs (Relational DBs) are specialized for finding exact values. For vector search, you need to use a Vector Database."

So what happens when you migrate to a dedicated vector DB like Pinecone? According to the benchmarks and case studies I've read, search performance improves dramatically compared to the naive approach. Same data, so why such a huge difference?

"You store data and SELECT it. It's the same mechanism. Why learn a new DB?" We have search engines like Elasticsearch. Why do we need another new thing? I couldn't accept it.

Thinking back to high school math, Dot Product calculations for 100,000 vectors should require massive computation. How is it possible in 0.05 seconds? It was a mystery.

The decisive analogy was "Library."

When you type "Call Number 800.12-34" at the kiosk, the librarian goes to that exact shelf and picks the book.

SELECT * FROM books WHERE id = 123It's like asking the librarian, "Do you have a novel where the protagonist travels to space, but it's kind of sad?" The librarian needs to know the Content (Meaning) of the books, not just numbers.

Find books similar to [0.1, 0.8, 0.3, ...]I understood it with this analogy. Vector DBs are specialized for "Finding the Approximate Nearest Neighbor rather than the exact value."

| Feature | Regular DB (MySQL, PostgreSQL) | Vector DB (Pinecone, Chroma) |

|---|---|---|

| Search Method | Exact Keyword/ID Match | Semantic Similarity (Cosine Similarity) |

| Data Type | Integer, String, Date | 1536-dim Vector (Float Array) |

| Index | B-Tree (Sort-based) | HNSW, IVF (Graph/Cluster-based) |

| Query Example | WHERE content LIKE '%AI%' | vector_search(embedding, top_k=5) |

| Main Use | Payments, User Mgmt, Boards | Chatbots, Recommender Sys, Image Search |

RDB handles "Data that must not be wrong" (Money, Inventory). Vector DB handles "Data where similar is good" (Recommendations, Search).

The secret to Vector DB being dramatically faster than naive approaches lies in an indexing algorithm called HNSW (Hierarchical Navigable Small World). The name is long and scary, but the principle is simple. "Highways and Local Roads."

Suppose we have 1 million data points. The brute-force method (Flat Search) compares my query vector with all 1 million vectors one by one. Naturally, it's slow.

HNSW divides data into Layers.

When searching, you take the highway to jump near the destination instantly, then drop to local roads for a precise search. Thanks to this, you only need to compare a few hundred vectors to find the answer among millions.

No installation or server management required. Just an API Key.

from pinecone import Pinecone

# 1. Initialize

pc = Pinecone(api_key="your-api-key")

# 2. Create Index (Run once)

pc.create_index(

name="my-index",

dimension=1536, # OpenAI embedding dimension

metric="cosine" # Similarity metric

)

# 3. Upsert Data

index = pc.Index("my-index")

index.upsert([

("id1", [0.1, 0.2, ...], {"text": "Delicious Apple"}),

("id2", [0.3, 0.4, ...], {"text": "Red Fruit"})

])

# 4. Query

results = index.query(

vector=[0.15, 0.25, ...], # Vector for 'Apple'

top_k=5,

include_metadata=True

)

for match in results.matches:

print(f"Score: {match.score}, Text: {match.metadata['text']}")

Great for testing locally or if you're worried about costs.

import chromadb

client = chromadb.Client() # Runs in memory

collection = client.create_collection("my_collection")

# Automatically embeds text (Convenient!)

collection.add(

documents=["This is an apple", "This is a banana"],

ids=["id1", "id2"]

)

# Search by text directly

results = collection.query(

query_texts=["Find something similar to apple"],

n_results=1

)

How do we measure "Similarity"? There are three main ways.

1: Same direction, -1: Opposite direction.Most vector DBs default to Cosine Similarity for text search.

Vector DBs are versatile.

Any data that can be turned into numbers (Vectors) can be searched instantly.

pgvector) or a local library (FAISS). Don't increase management points.text-embedding-3-small is 1536 dimensions.all-MiniLM-L6-v2) are 384 dimensions. Smaller dimensions are faster and cheaper.Vector DB is a database specialized for storing high-dimensional vectors and searching them at high speed (HNSW) for semantic similarity.

It is the core storage that allows AI to 'understand' text, images, and audio, and is the heart of RAG (Retrieval-Augmented Generation) systems.

We have moved beyond the era of SQL (SELECT *) to the era of Vectors (Find Similar).