CUDA vs Tensor Cores: NVIDIA GPU Secrets

The gold mine of AI era, NVIDIA GPUs. Why do we run AI on gaming graphics cards? Learn the difference between workers (CUDA) and matrix geniuses (Tensor Cores).

The gold mine of AI era, NVIDIA GPUs. Why do we run AI on gaming graphics cards? Learn the difference between workers (CUDA) and matrix geniuses (Tensor Cores).

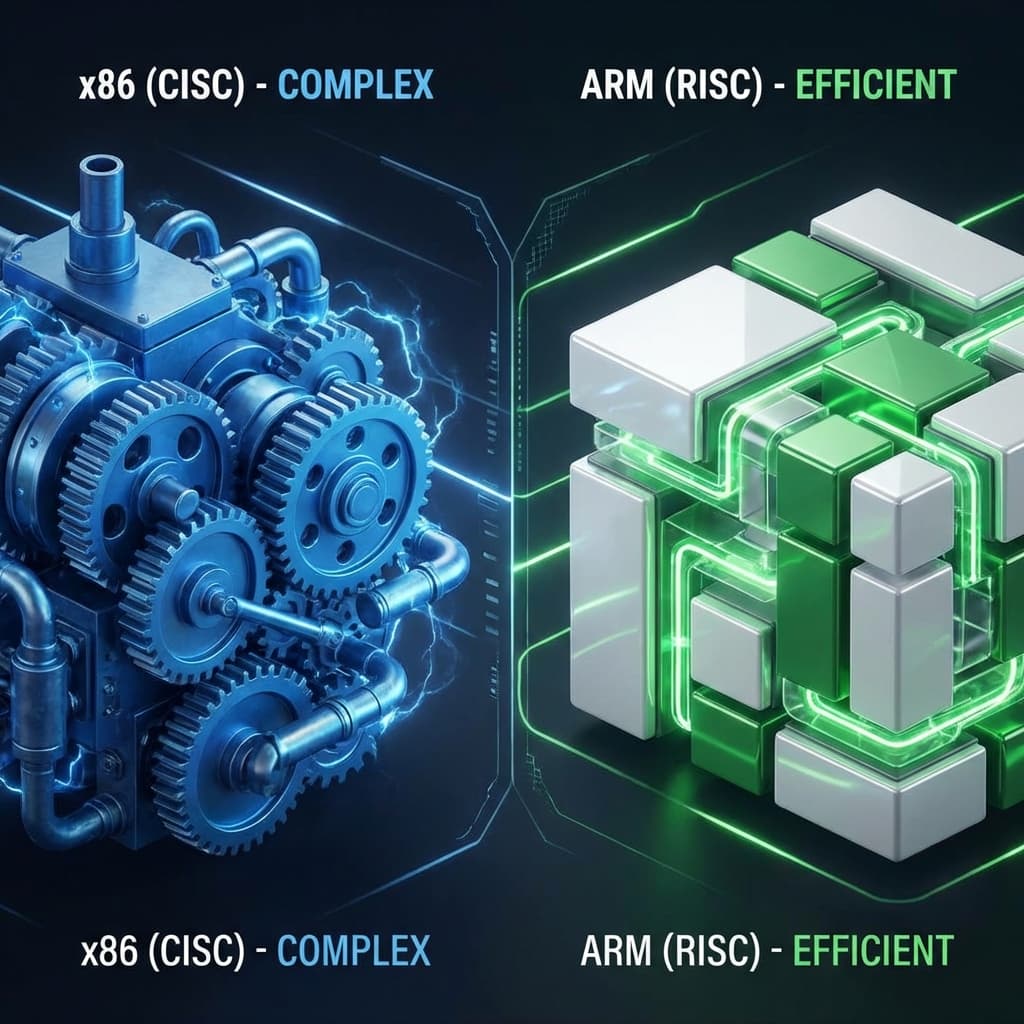

Why does MacBook battery last long? Should I use AWS Graviton? A deep dive into the philosophy of CISC (Complex) vs RISC (Simple) architectures.

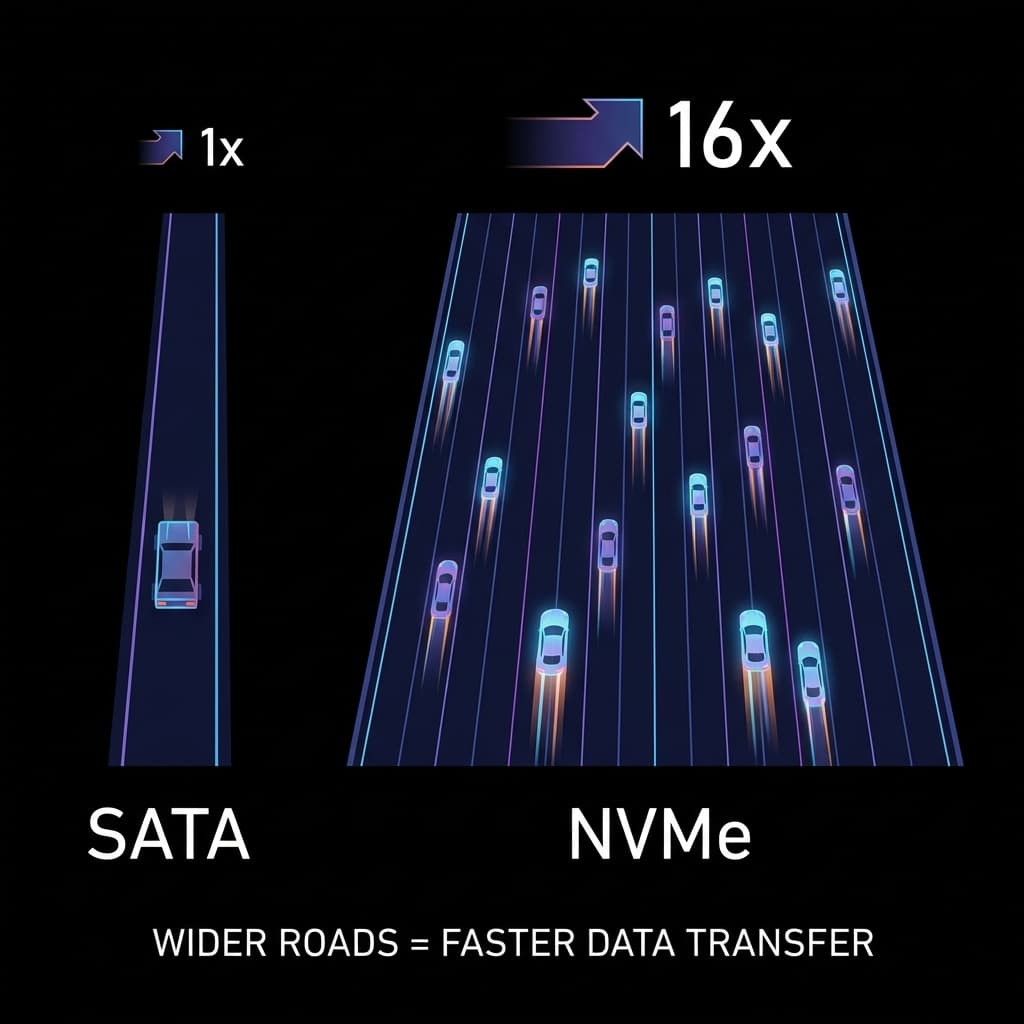

Bought a fast SSD but it's still slow? One-lane country road (SATA) vs 16-lane highway (NVMe).

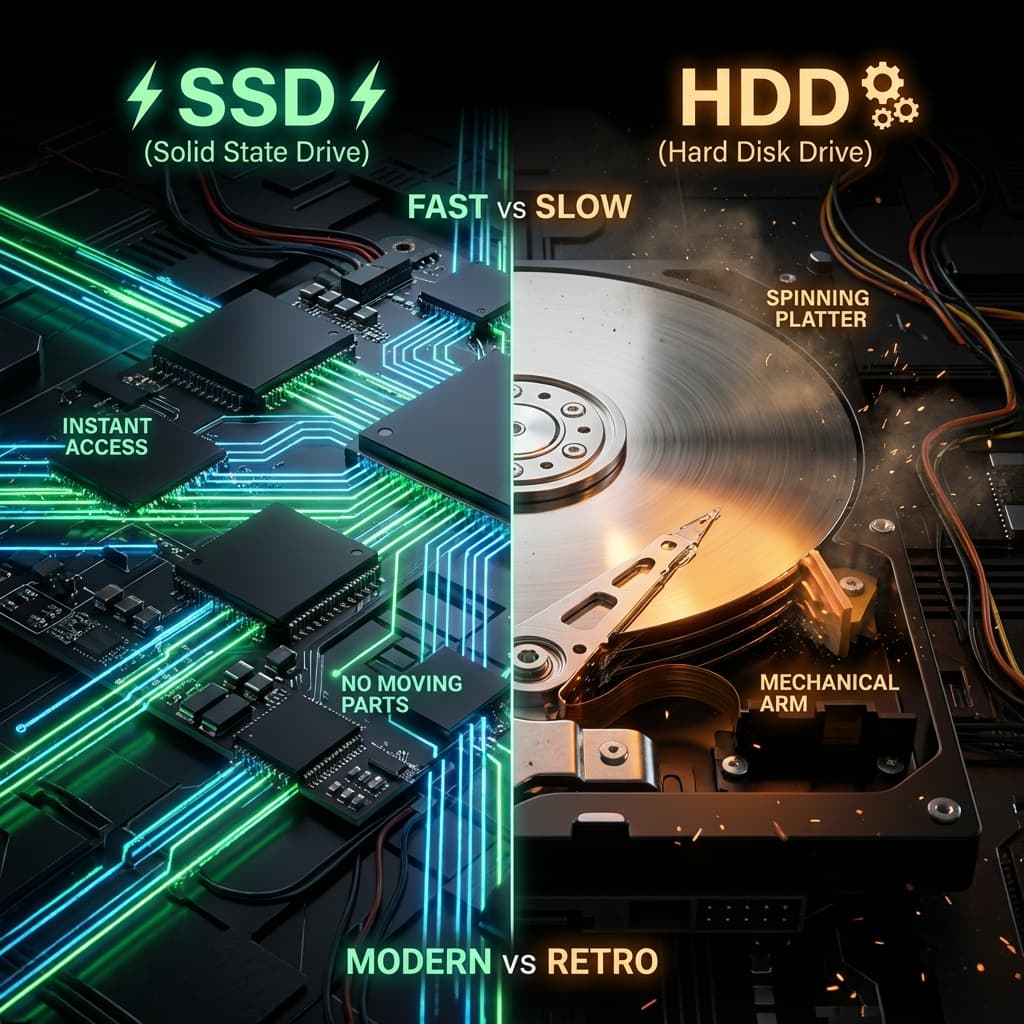

LP records vs USB drives. Why physically spinning disks are inherently slow, and how SSDs made servers 100x faster.

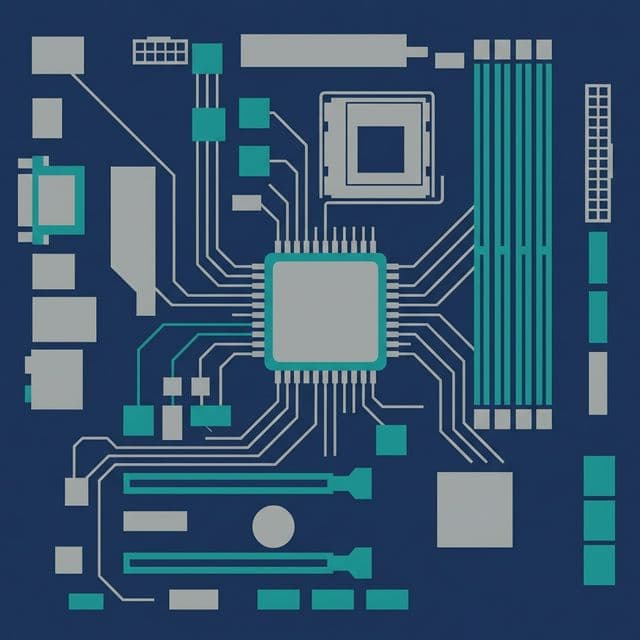

I thought the motherboard was just a board to plug things into. Then I learned what a chipset does.

When the ChatGPT craze inspired me to run Llama-2 locally, I had a rude awakening.

M1 MacBook (CPU only):

>>> "Tell me about quantum physics"

[10s later] "Quantum"

[10s later] "physics"

Then I borrowed an old RTX 3060 (CUDA + Tensor Cores):

>>> "Tell me about quantum physics"

[instantly] "Quantum physics is the study of very small particles..."

[full paragraph in 1 second]

"Why? Why so fast on GPU only?"

That's when GPU architecture fascinated me.

My questions:

The answers revealed NVIDIA's strategic hardware — components deliberately planted for the AI era, years before the boom.

Most importantly: "Why not AMD GPUs?"

A senior engineer explained it like this:

CPU cores (8): 8 PhDs

- Solve complex research papers (single-thread work) blazingly fast

- But there are only 8, so simple repetitive tasks are slow

GPU cores (5,000): 5,000 elementary school students

- Can't solve complex papers

- But 5,000 simultaneous additions are faster than any PhD squad

AI training is essentially:

1 + 1 = ?

2 + 3 = ?

4 + 5 = ?

... (1 billion times)

Obviously the latter wins.

After hearing this, it finally clicked why GPUs are perfect for AI. CPUs are optimized for completing one complex task quickly. GPUs are optimized for processing thousands of simple tasks simultaneously. AI training falls squarely into the latter category.

CUDA (Compute Unified Device Architecture) is NVIDIA's parallel computing platform and the name for the worker cores inside their GPUs.

| GPU Model | CUDA Cores | Price | Use |

|---|---|---|---|

| RTX 3060 | 3,584 | $329 | Gaming + light AI |

| RTX 3090 | 10,496 | $1,499 | Gaming + deep learning |

| A100 (datacenter) | 6,912 | $10,000+ | Large-scale AI |

You might wonder why the A100 has fewer CUDA cores than the RTX 3090. The answer: A100's Tensor core performance, memory bandwidth, and NVLink interconnect are far superior for AI workloads. Raw CUDA core count isn't the whole story.

AMD also makes GPUs (Radeon). But AMD GPUs are rarely used for AI.

Reason: CUDANVIDIA has been distributing CUDA development tools for free since 2006.

// CUDA code example

__global__ void addKernel(int *c, const int *a, const int *b) {

int i = threadIdx.x;

c[i] = a[i] + b[i]; // Each thread calculates simultaneously

}

The key here is the __global__ keyword — it defines a function that runs on the GPU, not the CPU. threadIdx.x lets thousands of threads each process their own piece of data concurrently.

All major AI frameworks (TensorFlow, PyTorch) were built on CUDA. AMD's rival platform ROCm arrived in 2016, and its coverage is still limited.

Result: CUDA = the de facto GPU AI standard.This is NVIDIA's most powerful moat. Even if AMD matches hardware performance, the software ecosystem is locked into CUDA, making it extremely difficult to switch.

In 2017, NVIDIA added Tensor Cores to the Volta architecture.

AI's fundamental operation is Matrix Multiplication.

# Deep learning's core operation

output = weights @ inputs # Matrix multiplication

A single image recognition pass requires:

CUDA cores can do this, but they work stitch by stitch — each core performs one floating-point multiply-add (FMA) per clock cycle.

Tensor cores calculate 4×4 matrix chunks in a single operation.

CUDA cores:

10,000 simultaneous 1×1 calculations

Tensor cores:

4×4 blocks (16 elements) in one shot

→ effectively 16x efficiency per operation

This is possible because Tensor cores natively support MMA (Matrix Multiply-Accumulate) operations at the hardware level: D = A × B + C, where A, B, C, and D are matrices, all processed in a single instruction.

Tensor cores have evolved through each GPU generation:

This hardware evolution is what made monsters like GPT-4 possible.

When I ran Stable Diffusion (AI image generation):

RTX 2080 (no Tensor cores)512×512 image: 22 seconds

512×512 image: 8 seconds (2.75x faster)

Similar CUDA core count (~3,500), but the presence of Tensor cores made a roughly 3x difference. For AI workloads, Tensor cores are game-changers.

pi = 3.141592653589793 # Very precise

Needed for science, physics simulations — anywhere exact decimal precision matters.

pi = 3.14 # Less precise, but sufficient

For AI, this level of precision is more than enough.

"Cat probability: 99.123456%"

vs

"Cat probability: 99.12%"

→ Both result: "Cat"

The key technique is Mixed Precision Training: forward and backward passes use FP16 for speed, while weight updates remain in FP32 for accuracy. You get the best of both worlds — speed and precision.

| Precision | CUDA Core | Tensor Core |

|---|---|---|

| FP32 | 10 TFLOPS | - |

| FP16 | 20 TFLOPS | 80 TFLOPS |

| FP8 (latest) | - | 160 TFLOPS |

For the RTX 4090:

What does TFLOPS mean? One TFLOPS = one trillion floating-point operations per second. The RTX 4090's 330 TFLOPS of FP16 Tensor performance means 330 trillion matrix calculations every second. This is the secret behind AI's rapid training speeds.

# Check CUDA installation

nvcc --version

# Install PyTorch with CUDA support

pip install torch torchvision --index-url https://download.pytorch.org/whl/cu118

import torch

# FP16 mode (activates Tensor cores)

model = MyModel().half().cuda() # .half() = FP16

input = torch.randn(1, 3, 512, 512).half().cuda()

# Inference with automatic mixed precision

with torch.cuda.amp.autocast():

output = model(input)

Calling .half() converts model weights from FP32 to FP16, automatically engaging Tensor cores. autocast() intelligently decides which operations use FP16 and which stay in FP32.

import time

# FP32 (CUDA cores only)

start = time.time()

for _ in range(100):

output = model_fp32(input_fp32)

print(f"FP32: {time.time() - start:.2f}s")

# Output: FP32: 12.34s

# FP16 (Tensor cores engaged)

start = time.time()

for _ in range(100):

output = model_fp16(input_fp16)

print(f"FP16: {time.time() - start:.2f}s")

# Output: FP16: 4.21s (2.9x faster)

One line of code change (.half()) yields a nearly 3x speedup. That's the power of Tensor cores.

AMD has developed similar technology (Matrix Cores). But here's the reality:

# NVIDIA

import torch

torch.cuda.is_available() # True (works instantly)

# AMD

import torch_directml # Separate installation

# Many unsupported operations, frequent errors

When you hit a problem with CUDA, Stack Overflow has the answer in 5 minutes. With ROCm, you might be filing a GitHub issue and waiting days for a response.

OpenAI, Google, and Meta all use NVIDIA A100/H100 GPUs. Cloud providers (AWS, GCP, Azure) predominantly offer NVIDIA GPU instances.

AMD's MI250/MI300 datacenter GPUs are emerging, and ROCm is improving rapidly. But catching up to 15 years of accumulated CUDA ecosystem in a short time remains an enormous challenge.

Why NVIDIA dominates the AI era:

AMD can't catch up easily because it's not just about hardware specs. The developer ecosystem — libraries, tutorials, community knowledge, enterprise integrations — must follow. NVIDIA has a 15-year head start on all of that.

When I first ran AI on a GPU, the experience was unforgettable.

"This is why everyone wants NVIDIA."