Fine-tuning vs Prompt Engineering

Understanding differences and selection criteria between fine-tuning and prompt engineering for LLM customization

Understanding differences and selection criteria between fine-tuning and prompt engineering for LLM customization

The gold mine of AI era, NVIDIA GPUs. Why do we run AI on gaming graphics cards? Learn the difference between workers (CUDA) and matrix geniuses (Tensor Cores).

Why did AI and deep learning abandon CPUs for GPUs? From ALU architecture to CUDA memory hierarchy and generative AI principles.

Understanding neural network principles through practical project experience. From factory line analogies to backpropagation and hyperparameter tuning.

Understanding Transformer architecture through practical experience

I wanted to customize LLM for our service. There were two methods: fine-tuning and prompt engineering. Which should I choose?

Fine-tuning is expensive but performs well, prompt engineering is simple but has limitations. I tried both and understood the difference.

The most confusing part was "when to fine-tune and when to just use prompts?"

Another confusion was "isn't fine-tuning always better?" If you spend cost and time training the model, shouldn't it naturally be better?

And "when is prompt engineering sufficient?" was also unclear.

The decisive analogy was "chef training."

Prompt Engineering = Giving recipe:This analogy helped me understand. Prompt engineering is fast and flexible, but fine-tuning provides specialized performance for specific tasks.

Without changing the model, design input (prompt) well to get desired results.

# Basic prompt

prompt = "Analyze this review: This product is great!"

response = llm(prompt)

# Improved prompt (Few-shot)

prompt = """

Analyze sentiment of these reviews:

Review: "Best product ever"

Sentiment: positive

Review: "Not good"

Sentiment: negative

Review: "This product is great!"

Sentiment:

"""

response = llm(prompt) # "positive"

from openai import OpenAI

client = OpenAI()

# Define role with system prompt

response = client.chat.completions.create(

model="gpt-4",

messages=[

{"role": "system", "content": "You are a friendly customer service chatbot."},

{"role": "user", "content": "I want a refund"}

]

)

print(response.choices[0].message.content)

Retrain the model itself for specific tasks. Update existing model weights.

# Prepare training data

training_data = [

{"prompt": "Review: Great", "completion": "positive"},

{"prompt": "Review: Bad", "completion": "negative"},

# ... hundreds to thousands

]

# Fine-tune

fine_tuned_model = finetune(base_model, training_data)

# Use

response = fine_tuned_model("Review: Good") # "positive"

from openai import OpenAI

client = OpenAI()

# 1. Upload training data

with open("training_data.jsonl", "rb") as f:

training_file = client.files.create(file=f, purpose="fine-tune")

# 2. Start fine-tuning

fine_tune_job = client.fine_tuning.jobs.create(

training_file=training_file.id,

model="gpt-3.5-turbo"

)

# 3. Use fine-tuned model

response = client.chat.completions.create(

model=fine_tune_job.fine_tuned_model,

messages=[{"role": "user", "content": "Review: Good"}]

)

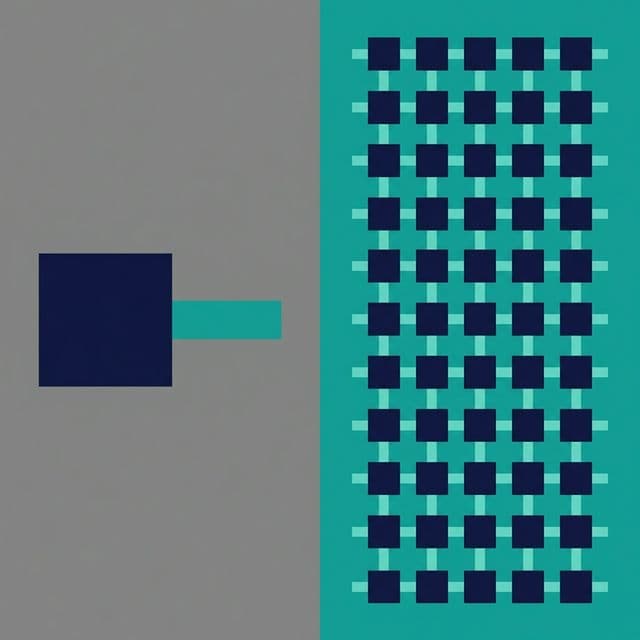

| Feature | Prompt Engineering | Fine-tuning |

|---|---|---|

| Start time | Immediate | Hours to days |

| Cost | API call cost | Training cost + API cost |

| Data | Not needed | Hundreds to thousands needed |

| Performance | Medium | High |

| Consistency | Low | High |

| Flexibility | High | Low |

| Maintenance | Easy | Difficult |

# Example: Multi-purpose chatbot

def chatbot(user_input, task_type):

if task_type == "translation":

prompt = f"Translate to English: {user_input}"

elif task_type == "summary":

prompt = f"Summarize: {user_input}"

elif task_type == "sentiment":

prompt = f"Analyze sentiment: {user_input}"

return llm(prompt)

# Example: Medical diagnosis assistant

# Fine-tune with thousands of medical data

medical_model = finetune(base_model, medical_data)

# Consistent and accurate diagnosis assistance

diagnosis = medical_model("Symptoms: headache, fever, cough")

My actual project experiences.

Goal: Handle various customer inquiries

Choice: Prompt Engineering

Reason:

Implementation:

system_prompt = """

You are a friendly customer service chatbot.

- Always use polite language

- Focus on problem solving

- Connect to agent when needed

"""

response = llm(system_prompt + user_query)

Result: Deployed in 2 weeks, 80% customer satisfaction

Goal: Find risky clauses in contracts

Choice: Fine-tuning

Reason:

Implementation:

# Fine-tune with 1000 contracts

legal_model = finetune(

base_model="gpt-3.5-turbo",

training_data=legal_contracts

)

# Accurate and consistent analysis

risks = legal_model.analyze(contract)

Result: 95% accuracy, 70% reduction in lawyer review time

Goal: Classify reviews as positive/negative

1st attempt: Prompt Engineering

2nd attempt: Fine-tuning

Conclusion: Switched to fine-tuning → improved performance + cost savings

Recently, combining both methods is popular.

Automatically add relevant information to prompt:

# 1. Search relevant documents

relevant_docs = vector_db.search(user_query)

# 2. Include in prompt

prompt = f"""

Reference documents:

{relevant_docs}

Question: {user_query}

Answer:

"""

response = llm(prompt)

Fine-tune prompt on fine-tuned model:

# Fine-tuned model

fine_tuned_model = load_model("my-fine-tuned-model")

# Fine-tune with prompt

prompt = f"""

Format: JSON

Fields: sentiment, confidence, reason

Review: {review}

"""

response = fine_tuned_model(prompt)

# GPT-4 API cost (2024 baseline)

# Input: $0.03 / 1K tokens

# Output: $0.06 / 1K tokens

# Example: 100K requests/month

# Average prompt: 500 tokens

# Average response: 200 tokens

monthly_cost = (

100_000 * 500 / 1000 * 0.03 + # Input

100_000 * 200 / 1000 * 0.06 # Output

) = $1,500 + $1,200 = $2,700

# GPT-3.5 fine-tuning cost

# Training: $0.008 / 1K tokens

# Usage: $0.012 / 1K tokens (input + output)

# Initial training cost (one-time)

training_cost = 5_000 * 500 / 1000 * 0.008 = $20

# Monthly usage cost

# Prompts become shorter (100 tokens)

monthly_cost = (

100_000 * 100 / 1000 * 0.012

) = $120

# Total cost (first month)

total = $20 + $120 = $140

Conclusion: Fine-tuning is much cheaper for high volume!

Try prompts first, consider fine-tuning when hitting limits:

# Step 1: Basic prompt

result = llm("Classify: " + text)

# Step 2: Few-shot prompt

result = llm(few_shot_prompt + text)

# Step 3: Chain-of-Thought

result = llm(cot_prompt + text)

# Step 4: Consider fine-tuning

if accuracy < 90%:

consider_finetuning()

Data quality is most important for fine-tuning:

# Bad example: Noisy data

bad_data = [

{"prompt": "good", "completion": "positive"}, # Too short

{"prompt": "bad product!!!", "completion": "neg"}, # Typo

]

# Good example: Clean and consistent data

good_data = [

{"prompt": "Review: This product is excellent", "completion": "positive"},

{"prompt": "Review: Not satisfied with quality", "completion": "negative"},

]

Don't try to be perfect at once, improve gradually:

# v1: Basic prompt

v1 = basic_prompt(text)

# v2: Add examples

v2 = few_shot_prompt(text)

# v3: Fine-tune with 100 samples

v3 = finetune(model, data_100)

# v4: Fine-tune with 1000 samples

v4 = finetune(model, data_1000)

Track metrics to understand when to switch from prompts to fine-tuning:

# Track accuracy over time

metrics = {

'prompt_v1': 0.70,

'prompt_v2': 0.75,

'prompt_v3': 0.78, # Plateauing

'finetune_v1': 0.92 # Significant jump

}

# Switch when prompt engineering plateaus

if improvement < 0.03:

switch_to_finetuning()

Fine-tuned models need retraining when requirements change. Factor this into your decision.

# Prompt: Easy to update

new_prompt = old_prompt + "\nNew requirement: ..."

# Fine-tuning: Need to retrain

new_model = finetune(base_model, new_training_data) # Time + cost

Here's how I decide between prompt engineering and fine-tuning:

Start

↓

Do you have 500+ labeled examples?

No → Prompt Engineering

Yes ↓

Is consistency critical?

No → Prompt Engineering

Yes ↓

Will you process 10,000+ requests/month?

No → Try Prompt Engineering first

Yes ↓

Is accuracy > 90% required?

No → Prompt Engineering might suffice

Yes → Fine-tuning

# Calculate break-even point

prompt_cost_per_request = 0.027 # $0.027

finetune_cost_per_request = 0.0012 # $0.0012

finetune_training_cost = 20 # $20 one-time

# Break-even at:

requests = finetune_training_cost / (prompt_cost_per_request - finetune_cost_per_request)

# = 20 / 0.0258 = ~775 requests

# If you'll process > 775 requests, fine-tuning is cheaper

Prompt engineering and fine-tuning are two main methods for LLM customization. Prompt engineering is fast and flexible but has limits in performance and consistency. Fine-tuning provides high performance and consistency but requires time and cost.

I start most projects with prompt engineering. Quickly build prototype, identify limits through actual use. Then switch to fine-tuning only when really necessary.

The key is "cost-benefit ratio." If prompts are sufficient, no need to fine-tune. But if high volume, high accuracy, and consistency are important, fine-tuning is the answer.

Remember: start simple, measure everything, and scale complexity only when data proves it's necessary. The best solution isn't always the most sophisticated one—it's the one that solves your problem efficiently.

In my experience, the decision between prompt engineering and fine-tuning often comes down to three factors: time, data, and scale.

If you're just starting out or building a prototype, prompt engineering is almost always the right choice. It's fast, flexible, and requires no training data. You can iterate quickly and validate your idea before investing in fine-tuning.

But if you're building a production system that will process thousands of requests daily, need consistent high-quality outputs, and have the training data available, fine-tuning will pay for itself quickly through better performance and lower operational costs.

The key is to be pragmatic. Don't fine-tune just because it's trendy. Don't stick with prompts just because they're easier. Measure, analyze, and choose the approach that delivers the best results for your specific situation. Your users will thank you for it.

One final piece of advice: document your decision-making process. Whether you choose prompts or fine-tuning, write down why you made that choice, what metrics you're tracking, and what would trigger a switch to the other approach. This documentation will be invaluable as your project evolves and your team grows. Future you (and your teammates) will appreciate the clarity. Start simple, iterate based on data, and always optimize for your users' needs first. The right choice today might change tomorrow, and that's perfectly fine. Stay flexible and data-driven always. Good luck with your implementation journey ahead today and beyond always successfully.