Designing a URL Shortener: Looks Simple, Full of Traps

A URL shortener sounds trivial until you face hash collisions, expiration policies, and redirect latency. A microcosm of system design.

A URL shortener sounds trivial until you face hash collisions, expiration policies, and redirect latency. A microcosm of system design.

Foundation of DB Design. Splitting tables to prevent Anomalies. 1NF, 2NF, 3NF explained simply.

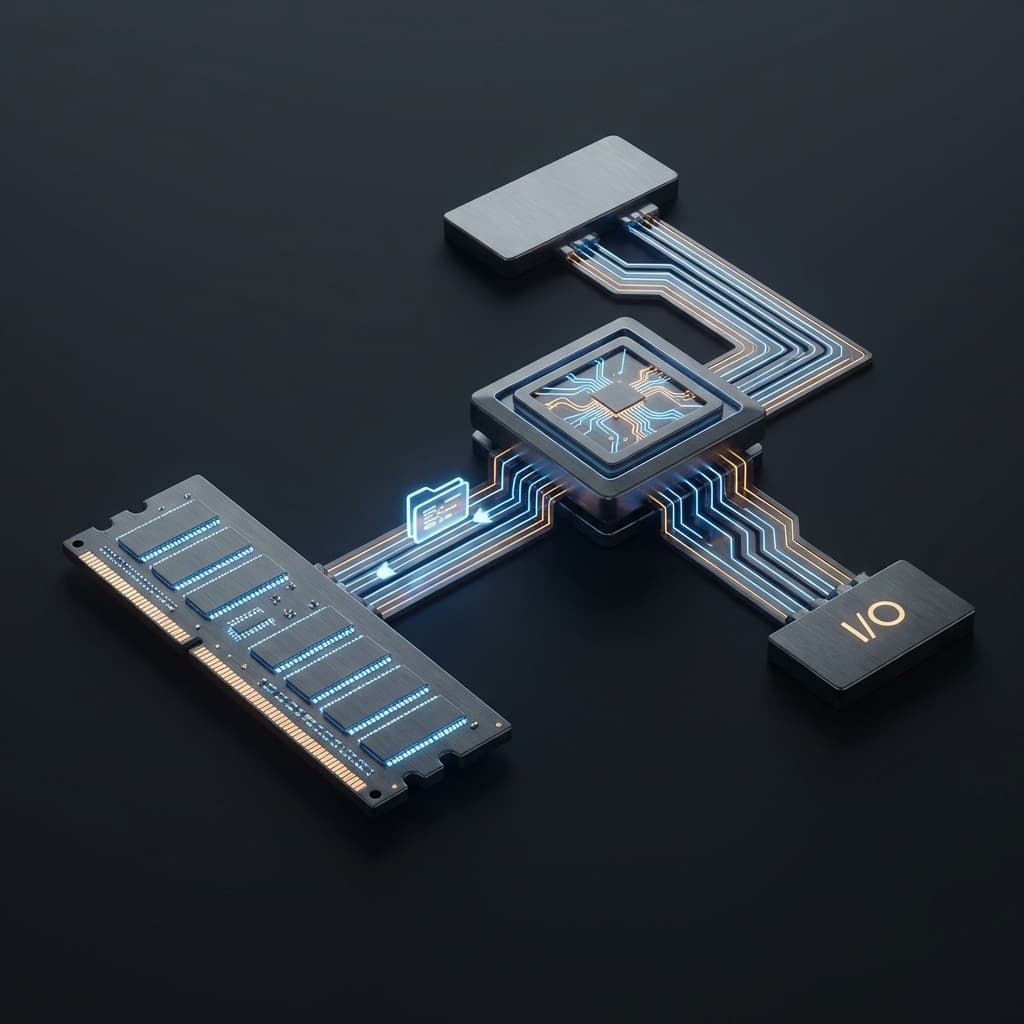

Why is the CPU fast but the computer slow? I explore the revolutionary idea of the 80-year-old Von Neumann architecture and the fatal bottleneck it left behind.

ChatGPT answers questions. AI Agents plan, use tools, and complete tasks autonomously. Understanding this difference changes how you build with AI.

Integrating a payment API is just the beginning. Idempotency, refund flows, and double-charge prevention make payment systems genuinely hard.

When I started my bit.ly clone project, my mental model was dead simple: Take long URL → hash it → store in DB → redirect. One day, tops.

But as I sat down to write the design doc, questions started piling up. What about hash collisions? What if someone submits the same URL twice? How do we store a billion URLs? Click analytics? Expiration? 301 or 302 redirect?

I ended up staring at a whiteboard for two days. A URL shortener looks trivial on the surface, but it's actually a condensed microcosm of every core system design concept. Like a cappuccino - looks like just coffee, milk, and foam, but the ratios and timing make all the difference.

At first I thought this was just "make URLs shorter." But shipping this as an actual service meant accounting for way more.

Functional requirements:That's when it hit me. This wasn't a "simple CRUD app." This was a proper distributed systems design problem.

There are two main approaches to generating short URLs.

This was my first instinct. MD5 or SHA-256 the URL → convert to Base62.

import hashlib

import string

BASE62 = string.digits + string.ascii_lowercase + string.ascii_uppercase

def base62_encode(num):

if num == 0:

return BASE62[0]

result = []

while num:

result.append(BASE62[num % 62])

num //= 62

return ''.join(reversed(result))

def generate_short_url(long_url):

# Generate MD5 hash

hash_bytes = hashlib.md5(long_url.encode()).digest()

# Convert first 8 bytes to integer

hash_int = int.from_bytes(hash_bytes[:8], 'big')

# Base62 encode and truncate to 7 chars

short_code = base62_encode(hash_int)[:7]

return f"https://short.link/{short_code}"

# Test

print(generate_short_url("https://www.example.com/very/long/url"))

# https://short.link/aB3xK9m

Pros:

Traps:

Use database auto-increment ID, convert to Base62.

class URLShortener {

private counter: number = 1000000; // Start at 6 chars

private base62Encode(num: number): string {

const chars = '0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ';

let result = '';

while (num > 0) {

result = chars[num % 62] + result;

num = Math.floor(num / 62);

}

return result || '0';

}

async createShortURL(longURL: string): Promise<string> {

// Get next ID from DB (transactional)

const id = await this.getNextID();

const shortCode = this.base62Encode(id);

await this.db.insert({

id,

short_code: shortCode,

long_url: longURL,

created_at: new Date(),

clicks: 0

});

return `https://short.link/${shortCode}`;

}

private async getNextID(): Promise<number> {

// Use PostgreSQL SEQUENCE

const result = await this.db.query("SELECT nextval('url_id_seq')");

return result.rows[0].nextval;

}

}

Pros:

Traps:

I went with counter-based. Writing collision handling logic felt harder than scaling an ID generator. Plus PostgreSQL SEQUENCE can issue tens of thousands per second, and we can shard by ID ranges later.

Initial schema was straightforward.

CREATE TABLE urls (

id BIGSERIAL PRIMARY KEY,

short_code VARCHAR(10) UNIQUE NOT NULL,

long_url TEXT NOT NULL,

user_id BIGINT,

created_at TIMESTAMP DEFAULT NOW(),

expires_at TIMESTAMP,

clicks BIGINT DEFAULT 0

);

CREATE INDEX idx_short_code ON urls(short_code);

CREATE INDEX idx_expires_at ON urls(expires_at) WHERE expires_at IS NOT NULL;

But adding analytics created a dilemma. We need to store IP, location, referer, user-agent for every click. Same table?

Option 1: Denormalize (clicks as JSON)ALTER TABLE urls ADD COLUMN click_details JSONB;

Pros: Single query, no joins Cons: JSON bloats with billion clicks, slow analytical queries

Option 2: Normalize (separate table)CREATE TABLE clicks (

id BIGSERIAL PRIMARY KEY,

url_id BIGINT REFERENCES urls(id),

clicked_at TIMESTAMP DEFAULT NOW(),

ip_address INET,

country VARCHAR(2),

referer TEXT,

user_agent TEXT

);

CREATE INDEX idx_url_id_clicked_at ON clicks(url_id, clicked_at DESC);

Pros: Scalable, time-series analysis friendly Cons: Join overhead, storage explosion

I chose a hybrid approach:

urls.clicks counter increments real-time (from cache)clicks table gets batch inserted asynchronouslyLike a cafe cash register - money goes in the drawer fast, bookkeeping happens later.

URL shorteners are extremely read-heavy. The same short URL can get thousands of clicks. Hitting the DB every time is suicide.

class URLService {

private redis: RedisClient;

private db: PostgresClient;

async redirect(shortCode: string): Promise<string> {

// Step 1: Check Redis cache

const cached = await this.redis.get(`url:${shortCode}`);

if (cached) {

// Async increment click count

this.incrementClickCount(shortCode);

return cached;

}

// Step 2: Query DB

const url = await this.db.query(

'SELECT long_url, expires_at FROM urls WHERE short_code = $1',

[shortCode]

);

if (!url) {

throw new Error('URL not found');

}

// Check expiration

if (url.expires_at && new Date() > url.expires_at) {

throw new Error('URL expired');

}

// Step 3: Cache with TTL (1 hour)

await this.redis.setex(`url:${shortCode}`, 3600, url.long_url);

this.incrementClickCount(shortCode);

return url.long_url;

}

private async incrementClickCount(shortCode: string) {

// Increment in Redis

await this.redis.incr(`clicks:${shortCode}`);

// Flush to DB every 100 clicks

const count = await this.redis.get(`clicks:${shortCode}`);

if (parseInt(count) % 100 === 0) {

await this.flushClicksToDB(shortCode);

}

}

}

This architecture cut DB load by 90%. Redis handles 100K requests/second easily.

I didn't realize redirect status codes could impact business outcomes.

Initially I wanted 301 for performance. But there were problems:

Verdict: 302. Analytics data mattered way more than 10ms latency.

Though for sponsor links or permanent redirects, we offer 301 as an option.

What happens when you store a billion URLs?

Storage math:Seems fine. But click data is the killer.

What I learned designing a URL shortener:

Building a bit.ly clone wasn't a one-day project - it was a full system design bootcamp. The simpler a problem looks, the more you should respect its hidden complexity. Like those LeetCode Easy problems that are actually Medium in disguise.

Next time someone says "that's easy," I'm showing them my whiteboard photo. It's covered in arrows and question marks.