AI Agents: How Autonomous AI Systems Actually Work

ChatGPT answers questions. AI Agents plan, use tools, and complete tasks autonomously. Understanding this difference changes how you build with AI.

ChatGPT answers questions. AI Agents plan, use tools, and complete tasks autonomously. Understanding this difference changes how you build with AI.

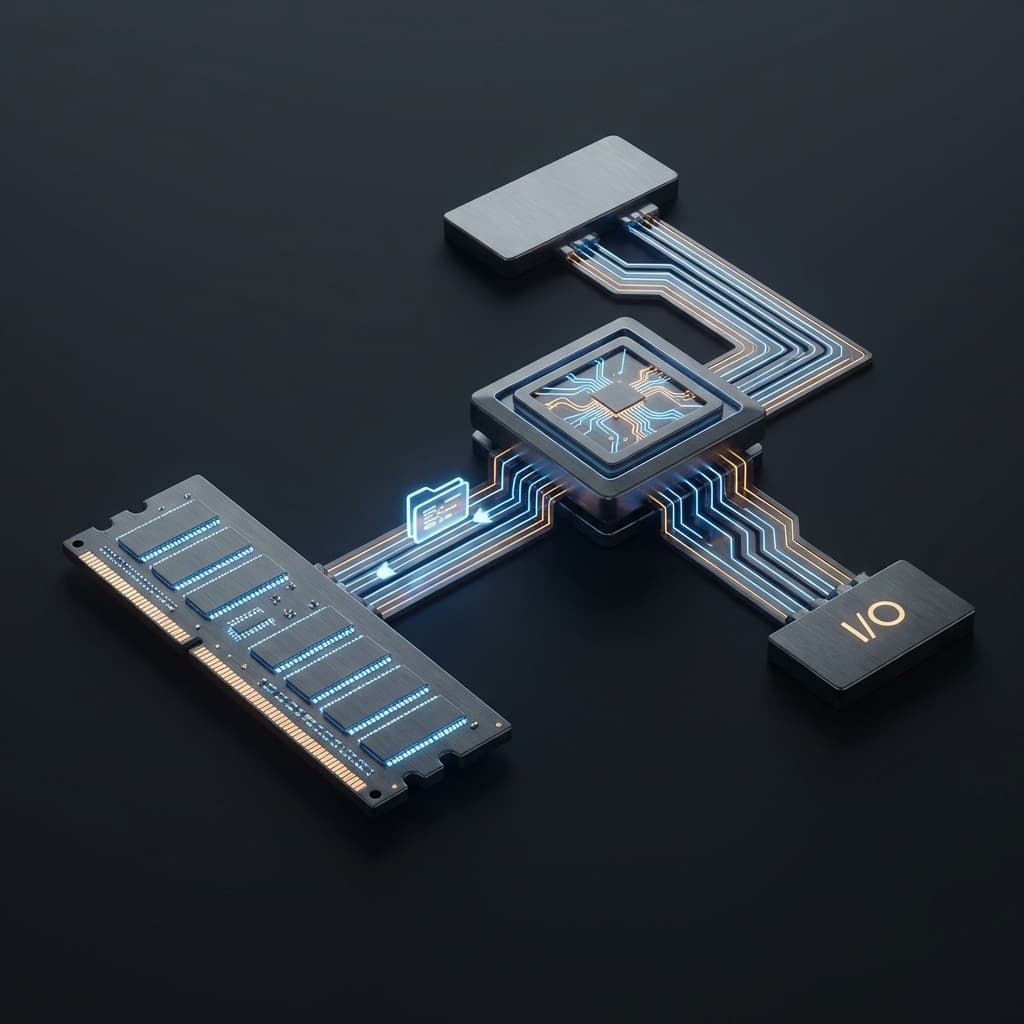

Why is the CPU fast but the computer slow? I explore the revolutionary idea of the 80-year-old Von Neumann architecture and the fatal bottleneck it left behind.

Both are children of Transformer, so why the difference? Using 'Fill-in-the-blank' vs 'Write-next-word' analogies to explain BERT vs GPT. Practical guide based on trial and error.

Integrating a payment API is just the beginning. Idempotency, refund flows, and double-charge prevention make payment systems genuinely hard.

When you don't want to go yourself, the proxy goes for you. Hide your identity with Forward Proxy, protect your server with Reverse Proxy. Same middleman, different loyalties.

When you ask ChatGPT to "write a blog post," it writes one. But that's it. It doesn't save the file, create images, or publish to your blog. It just outputs text in a chat window.

Ask Claude Code the same thing? It creates a file, checks your directory structure, sets up image paths, and even makes a git commit. This difference is what separates chatbots from agents.

The first time I experienced this, it blew my mind. I realized LLMs don't just answer—they can actually do things. That made me curious about the architecture. How do agents plan, use tools, and complete tasks autonomously?

The answer came down to one word: loops. Agents don't respond once and stop. They observe → think → act → observe again, repeating until they achieve their goal. Understanding this structure clarified when agents are powerful and when they're overkill.

The lightbulb moment for me was this: Agents aren't functions. They're while-loops.

A typical LLM call looks like this:

# Chatbot: one call, one response

response = llm.chat("Write a blog post")

print(response) # Done

But an agent works like this:

# Agent: repeat until goal achieved

goal = "Write a blog post and save it as a file"

max_iterations = 10

for i in range(max_iterations):

# 1. Observe: assess current state

observation = get_current_state()

# 2. Think: plan next action

action = llm.plan(goal, observation, history)

# 3. Act: execute tool

result = execute_tool(action)

# 4. Check: goal achieved?

if is_goal_achieved(goal, result):

break

This loop structure is the essence of agents. They're not question-answer systems—they're goal-oriented systems that act iteratively.

Think of it like navigation. A chatbot tells you "take Exit 3 and go straight." An agent actually walks, notices a blocked path, and reroutes in real-time.

The defining difference between agents and chatbots is tool usage. LLMs only output text. Agents call functions, hit APIs, read files, and execute code.

This works through function calling. Providers like OpenAI and Anthropic trained models to output structured JSON specifying which function to call with what parameters.

# Tool definitions provided to the agent

tools = [

{

"name": "write_file",

"description": "Write content to a file",

"parameters": {

"path": "string",

"content": "string"

}

},

{

"name": "search_web",

"description": "Search the web for information",

"parameters": {

"query": "string"

}

}

]

# LLM decides to call a tool

response = llm.chat(

"Write a blog post and save it to post.mdx",

tools=tools

)

# LLM response

{

"tool_call": {

"name": "write_file",

"arguments": {

"path": "post.mdx",

"content": "# My Blog Post\n..."

}

}

}

# Agent framework executes the tool

result = execute_tool(response.tool_call)

The key insight: LLMs don't execute tools directly. They just decide which tools to use. The agent framework handles execution. The LLM is the brain. Tools are the hands and feet.

When you say "build me a blog," an agent can't handle that in one step. It breaks it down:

There are two main approaches to planning.

Approach 1: Zero-shot Planning — LLM creates entire plan upfront

plan = llm.chat("""

Goal: Build a blog

Current state: src/content/posts/ directory exists

List the steps as JSON:

""")

# Response

[

{"step": 1, "action": "read_directory", "args": {"path": "src/content/posts"}},

{"step": 2, "action": "write_file", "args": {"path": "new-post.mdx"}},

...

]

Approach 2: ReAct Pattern — Alternate between Reasoning and Acting

ReAct is my favorite pattern. It makes the agent explain its reasoning at each step.

# ReAct loop

thoughts = []

for step in range(max_steps):

# Reasoning: what should I do next?

thought = llm.chat(f"""

Goal: {goal}

Previous actions: {thoughts}

Thought: Analyze the situation and decide next action.

""")

thoughts.append(thought)

# Acting: execute tool

if thought.contains_action():

action = parse_action(thought)

result = execute_tool(action)

thoughts.append(f"Observation: {result}")

The power of ReAct is explicit reasoning. You can see why the agent made each choice. Incredibly useful for debugging.

A real ReAct log looks like this:

Thought: To write a blog post, I first need to check the format of existing posts.

Action: read_file("src/content/posts/example.mdx")

Observation: Confirmed frontmatter needs title, date, and tags.

Thought: Now I can write a new post with the same format.

Action: write_file("src/content/posts/new.mdx", content)

Observation: File successfully created.

Thought: Goal achieved.

Agents use two types of memory.

Short-term Memory (conversation history)Context for the current task. "What did I just do?"

conversation_history = [

{"role": "user", "content": "Write a blog post"},

{"role": "assistant", "content": "What topic?"},

{"role": "user", "content": "About AI Agents"},

{"role": "assistant", "content": "Thought: Starting post on AI Agents..."}

]

The problem: this grows unbounded. Even with 128k context windows, long tasks fill up fast. So you summarize or drop old messages.

Long-term Memory (vector database)Storage for past tasks and knowledge. Uses RAG (Retrieval Augmented Generation).

# Store past work

vector_db.store({

"content": "Blog post frontmatter format",

"metadata": {"date": "2026-01-10", "task": "blog_writing"}

})

# Retrieve relevant info

relevant_memories = vector_db.search(

query="how to write blog posts",

top_k=3

)

# Provide to agent

context = "\n".join([m.content for m in relevant_memories])

prompt = f"Relevant info:\n{context}\n\nGoal: {goal}"

Long-term memory lets agents reference "how I did this before." Powerful for repeated tasks.

Claude Code (what I'm using now)

Automates coding tasks. Say "add tests" and it:

Software engineer agent. Give it a GitHub issue and it:

General-purpose goal achiever. Say "research competitors and create a report" and it:

The difference is domain specialization. Claude Code uses coding-specific tools (file read/write, git, terminal). AutoGPT uses general tools (web browsing, file system).

To truly understand agents, build one. Here's a minimal agent I created:

import openai

import json

class SimpleAgent:

def __init__(self):

self.tools = {

"search": self.search_web,

"calculate": self.calculate,

"write_file": self.write_file

}

self.history = []

def run(self, goal, max_iterations=5):

"""Agent main loop"""

print(f"Goal: {goal}\n")

for i in range(max_iterations):

print(f"--- Iteration {i+1} ---")

# 1. Think: decide next action

response = openai.chat.completions.create(

model="gpt-4",

messages=[

{"role": "system", "content": self.get_system_prompt()},

*self.history,

{"role": "user", "content": goal}

],

tools=self.get_tool_definitions()

)

message = response.choices[0].message

# 2. Act: execute tool if called

if message.tool_calls:

for tool_call in message.tool_calls:

tool_name = tool_call.function.name

tool_args = json.loads(tool_call.function.arguments)

print(f"Action: {tool_name}({tool_args})")

# Execute tool

result = self.tools[tool_name](**tool_args)

print(f"Result: {result}\n")

# Add to history

self.history.append({

"role": "assistant",

"content": None,

"tool_calls": [tool_call]

})

self.history.append({

"role": "tool",

"content": str(result),

"tool_call_id": tool_call.id

})

else:

# No tool call = task complete

print(f"Final Answer: {message.content}")

break

def get_system_prompt(self):

return """You are an agent that uses tools to achieve goals.

Use the available tools step-by-step to achieve the goal.

When no more tools are needed, provide your final answer."""

def get_tool_definitions(self):

return [

{

"type": "function",

"function": {

"name": "search",

"description": "Search the web for information",

"parameters": {

"type": "object",

"properties": {

"query": {"type": "string"}

},

"required": ["query"]

}

}

},

{

"type": "function",

"function": {

"name": "calculate",

"description": "Calculate a mathematical expression",

"parameters": {

"type": "object",

"properties": {

"expression": {"type": "string"}

},

"required": ["expression"]

}

}

}

]

def search_web(self, query):

# In reality, would call Google API

return f"Search results for '{query}' (mock)"

def calculate(self, expression):

return eval(expression)

def write_file(self, path, content):

with open(path, 'w') as f:

f.write(content)

return f"File saved: {path}"

# Run it

agent = SimpleAgent()

agent.run("Calculate how many times bigger Seoul's population is than Busan's and save to result.txt")

Less than 100 lines, but it contains all the essentials: loop, tool calling, history management. Scale this up and you get sophisticated agents.

Real problems I've hit using agents:

HallucinationLLMs make things up. They try calling functions that don't exist or use wrong parameters.

# Agent tries to call

{"tool": "delete_all_files", "args": {}} # This tool doesn't exist!

Fix: clear tool definitions and validation.

Infinite LoopsIf agents can't achieve goals, they loop forever. "Read file" → "File not found" → "Read file again" → infinite.

Fix: max_iterations and detect repeated actions.

if action in recent_actions[-3:]:

print("Repeating same action. Need different strategy.")

break

Agents are expensive. One task can trigger dozens of LLM calls. Complex GPT-4 tasks burn through dollars fast.

Fix: use smaller models first (GPT-3.5 for planning, GPT-4 for execution) or cache responses.

When to Use (and When NOT to Use)Agents aren't always the answer. My rule of thumb:

Use agents when:Using a calculator agent for "what's 1+1?" is overkill. Just ask the LLM. But "analyze our financial statements and create an investment report"? That's agent territory.

The most important lesson from studying agents:

Agent = LLM + Tools + LoopThe LLM is the brain. It thinks and decides. But it can't act. Tools are the hands and feet. They write files, call APIs, calculate. The loop is persistence. Not one-and-done, but try-until-success.

Combine these three and you go from "write me something" to "write it, save it, and deploy it."

With ChatGPT, I thought "this is convenient." With agents, I realized "AI can actually work now." That difference will drive massive change in the coming years.

Agents aren't perfect. They hallucinate, loop infinitely, and cost money. But the direction is right. Agents will get smarter, cheaper, and more reliable.

And how we design and control these agents will become the engineering discipline of the AI era. I'm convinced of that.