Designing a Real-time Chat System: Is WebSocket Enough?

1:1 chat is straightforward, but group chat, read receipts, and offline messages turn it into a completely different beast.

1:1 chat is straightforward, but group chat, read receipts, and offline messages turn it into a completely different beast.

HTTP is Walkie-Talkie (Over). WebSocket is Phone (Hello). The secret tech behind Chat and Stock Charts.

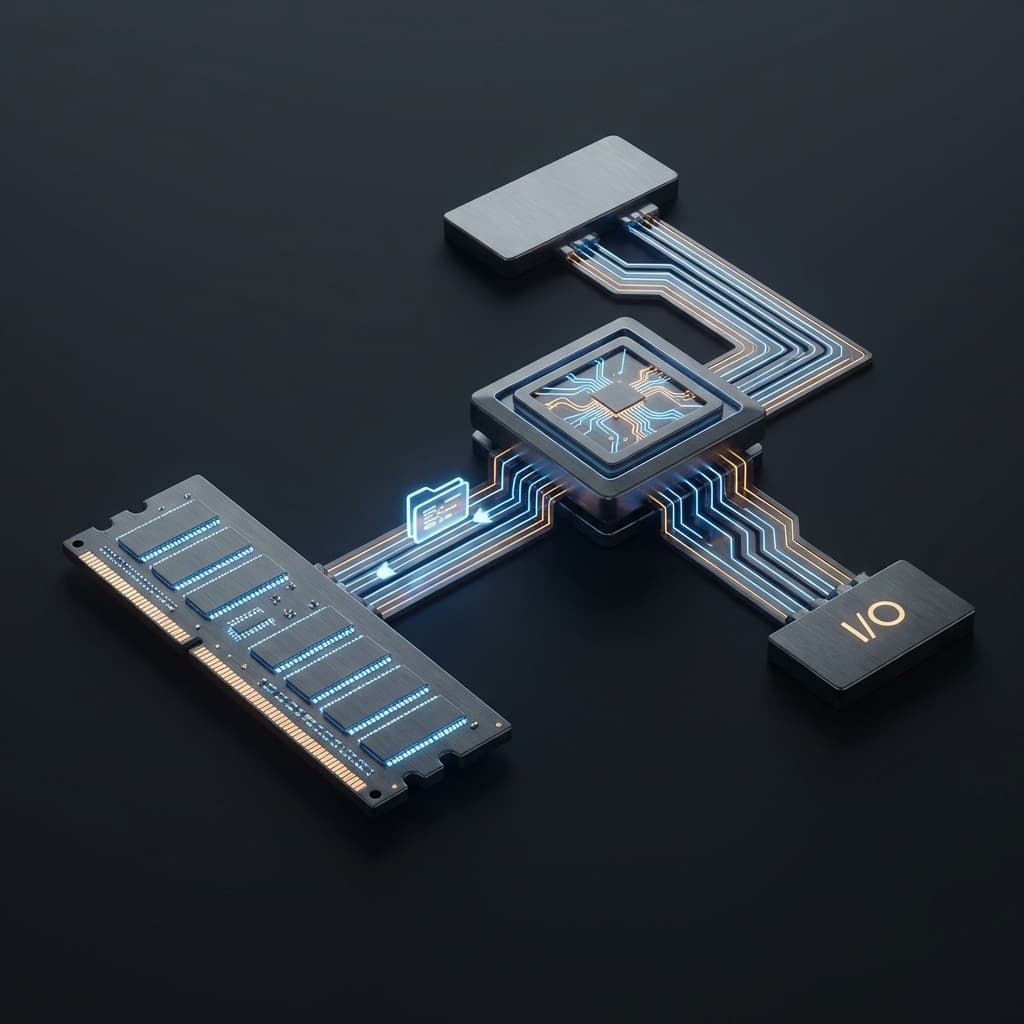

Why is the CPU fast but the computer slow? I explore the revolutionary idea of the 80-year-old Von Neumann architecture and the fatal bottleneck it left behind.

ChatGPT answers questions. AI Agents plan, use tools, and complete tasks autonomously. Understanding this difference changes how you build with AI.

Integrating a payment API is just the beginning. Idempotency, refund flows, and double-charge prevention make payment systems genuinely hard.

When I first built a chat feature, I completely underestimated it. Install a WebSocket library, open a connection, send() messages, listen to onmessage - what could go wrong? The 1:1 chat demo worked in 10 minutes. Type in one browser, see it instantly in another. "Wow, it works!" I felt accomplished.

Then the PM started adding requirements. "Group chat too, right?" "We need read receipts" "Push notifications when the app is closed?" Each addition turned my code into spaghetti. Eventually I realized: chat isn't about real-time connections, it's about state management and synchronization.

It's like thinking you need to build a faucet, but you actually need to design the entire water supply system. Water coming out is easy. Reliably supplying 100 houses simultaneously, tracking usage, maintaining pressure, detecting leaks - that's the real problem.

At first, I didn't even understand why WebSocket was necessary. Can't we just check for new messages every second via HTTP? Old chat systems actually did this. That's Polling.

// Polling: Keep asking the server

setInterval(() => {

fetch('/api/messages/new')

.then(res => res.json())

.then(messages => updateUI(messages));

}, 1000); // Request every second

The problem? You keep requesting even when there are no messages. 1000 users means 1000 requests per second. Most get "no new messages" responses. Like checking your mailbox every second when 99% of the time it's empty.

Long Polling is smarter. The server holds the response until there's a new message.

// Long Polling: Wait until there's something new

function longPoll() {

fetch('/api/messages/wait') // Server holds until message arrives

.then(res => res.json())

.then(messages => {

updateUI(messages);

longPoll(); // Wait again

});

}

This reduces wasteful requests, but you still have HTTP overhead. Each time you open a new connection, exchange headers, then close it.

WebSocket is a completely different approach. Once you handshake, the connection stays open. Like a TCP socket, bidirectional communication is possible.

// WebSocket: Connect once, use continuously

const ws = new WebSocket('ws://chat.example.com');

ws.onopen = () => {

console.log('Connected');

};

ws.onmessage = (event) => {

const message = JSON.parse(event.data);

updateUI(message);

};

ws.send(JSON.stringify({ text: 'Hello!' }));

What about SSE (Server-Sent Events)? That's server-to-client only. For bidirectional needs like chat, SSE isn't enough. Use it for notifications, stock tickers - when you only need server→client.

What clicked for me: Polling is asking, Long Polling is waiting, WebSocket is keeping a phone line open, SSE is listening to a broadcast.

If you send() via WebSocket, does the message arrive? What if the network drops? Server restarts? This is where delivery guarantees come in.

At-most-once: Maximum once. Send and don't confirm. Fast but can be lost.

At-least-once: Minimum once. Resend until you get ACK. Can duplicate.

Exactly-once: Exactly once. Really hard. Usually requires message ID deduplication.

For chat, at-least-once is typical. Duplicates are okay (UI shows one per ID), but loss is unacceptable.

// Client: Resend until ACK received

const pendingMessages = new Map();

function sendMessage(text) {

const msgId = generateId();

const msg = { id: msgId, text, timestamp: Date.now() };

pendingMessages.set(msgId, msg);

ws.send(JSON.stringify(msg));

// Resend if no ACK within 5 seconds

setTimeout(() => {

if (pendingMessages.has(msgId)) {

ws.send(JSON.stringify(msg));

}

}, 5000);

}

ws.onmessage = (event) => {

const data = JSON.parse(event.data);

if (data.type === 'ack') {

pendingMessages.delete(data.msgId); // Confirmed

} else if (data.type === 'message') {

// Message received, send ACK to server

ws.send(JSON.stringify({ type: 'ack', msgId: data.id }));

displayMessage(data);

}

};

Understanding this made me realize why message queues like Kafka and RabbitMQ emphasize these concepts. In distributed systems, "delivered" is far more complex than it seems.

1:1 chat is simple. Alice sends to Bob, forward to Bob's WebSocket, done.

But in a 100-person group chat, if Alice sends a message, you need to deliver to 99 people. This is the fan-out problem. Writes get amplified.

// Naive group chat: Loop through all members

function broadcastToRoom(roomId, message) {

const room = rooms.get(roomId);

const members = room.members; // 100 people

members.forEach(userId => {

const ws = connections.get(userId);

if (ws && ws.readyState === WebSocket.OPEN) {

ws.send(JSON.stringify(message));

}

});

}

100 is fine, but what about 10,000? One message means 10,000 send() calls. Server explodes.

I learned two optimizations here.

1. Pub/Sub Pattern with RedisWith multiple WebSocket servers, users connect to different servers. If Alice is on ServerA and Bob on ServerB, servers need to relay messages. Use Redis Pub/Sub.

// ServerA: Alice sends message

function onMessageReceived(userId, roomId, message) {

// Save to DB

db.saveMessage(message);

// Publish to Redis (all servers subscribed)

redis.publish(`room:${roomId}`, JSON.stringify(message));

}

// All servers: Subscribe to room channel

redis.subscribe(`room:${roomId}`);

redis.on('message', (channel, data) => {

const message = JSON.parse(data);

const roomId = channel.split(':')[1];

// Send only to members connected to this server

broadcastToLocalConnections(roomId, message);

});

Now even with 10 servers, Alice's message reaches Bob. Like national radio stations receiving signal from central transmitter and broadcasting to their regions.

2. Send Only to Online Users10,000-person group but only 50 online right now? Send to just the 50. The other 9,950 can fetch history when they open the app.

// Track online members

const onlineUsers = new Set();

ws.on('open', () => {

onlineUsers.add(userId);

});

ws.on('close', () => {

onlineUsers.delete(userId);

});

// Filter to online only

function broadcastToRoom(roomId, message) {

const members = rooms.get(roomId).members;

members

.filter(userId => onlineUsers.has(userId))

.forEach(userId => {

const ws = connections.get(userId);

ws.send(JSON.stringify(message));

});

}

This drastically reduced write amplification. Tracking online/offline was the key.

How do you know "Bob is online"? WebSocket connected means online? But what if network dropped and server doesn't know yet? Or app went to background but connection is alive?

Use Heartbeat (ping/pong). Periodically send signals to check if alive.

// Server: Ping every 30s, consider disconnected if no pong within 45s

const PING_INTERVAL = 30000;

const PONG_TIMEOUT = 45000;

const heartbeats = new Map();

setInterval(() => {

connections.forEach((ws, userId) => {

if (!heartbeats.has(userId) ||

Date.now() - heartbeats.get(userId) > PONG_TIMEOUT) {

// Timeout, connection dead

ws.terminate();

onlineUsers.delete(userId);

broadcastPresence(userId, 'offline');

} else {

ws.ping();

}

});

}, PING_INTERVAL);

ws.on('pong', () => {

heartbeats.set(userId, Date.now());

});

// Client: Pong is automatic, or explicitly

ws.on('ping', () => {

ws.pong();

});

Now "Bob is online" is reliable. Features like read receipts and typing indicators work on top of this.

Read receipts send a "Bob read the message" event.

// Client: Send read event when message is visible on screen

observer.observe(messageElement, {

threshold: 1.0, // When 100% visible

callback: () => {

ws.send(JSON.stringify({

type: 'read',

messageId: msg.id,

roomId: msg.roomId

}));

}

});

// Server: Broadcast read event to room members

function onReadEvent(userId, roomId, messageId) {

db.markAsRead(userId, messageId);

redis.publish(`room:${roomId}`, JSON.stringify({

type: 'read_receipt',

userId,

messageId,

timestamp: Date.now()

}));

}

That's how WhatsApp's blue checkmarks work. Message sent → 1 check (server received) → 2 checks (delivered to recipient) → blue checks (read by recipient).

Storing all messages in DB is slow. Keeping only in memory means losing them on server restart. Ultimately, hybrid was the answer.

Recent messages in cache (Redis), old ones in DB (PostgreSQL)// Message storage strategy

async function saveMessage(roomId, message) {

// 1. Persist in DB (async, don't await)

db.messages.insert(message);

// 2. Cache recent 100 in Redis (fast lookups)

await redis.lpush(`room:${roomId}:recent`, JSON.stringify(message));

await redis.ltrim(`room:${roomId}:recent`, 0, 99); // Keep only 100

// 3. Real-time send to online users

broadcastToRoom(roomId, message);

}

// Message retrieval

async function getMessages(roomId, limit = 50) {

// 1. Check cache first

const cached = await redis.lrange(`room:${roomId}:recent`, 0, limit - 1);

if (cached.length >= limit) {

return cached.map(JSON.parse);

}

// 2. Fetch from DB if insufficient

const messages = await db.messages

.where({ roomId })

.orderBy('timestamp', 'desc')

.limit(limit);

return messages;

}

This handled 90%+ requests from Redis. DB load dropped significantly. Like keeping frequently used tools on the workbench, rarely used ones in storage.

As users grow, you spin up multiple WebSocket servers. Here's where Sticky Session matters.

If Alice connected to ServerA, disconnected, then reconnects, she shouldn't go to ServerB. Why? ServerA's memory has Alice's state. Load balancer must route the same user to the same server.

# nginx IP-based sticky session

upstream websocket_servers {

ip_hash; # Same IP to same server

server ws1.example.com:8080;

server ws2.example.com:8080;

server ws3.example.com:8080;

}

Or share state via Redis. Any server can read state from Redis.

// Store WebSocket connection info in Redis

ws.on('open', () => {

redis.hset('ws:connections', userId, serverInstanceId);

redis.hset('ws:sessions', userId, JSON.stringify(sessionData));

});

// Check which server when delivering message

async function sendToUser(userId, message) {

const serverId = await redis.hget('ws:connections', userId);

if (serverId === currentServerInstanceId) {

// Connected to this server, send directly

const ws = connections.get(userId);

ws.send(JSON.stringify(message));

} else {

// Connected to other server, relay via Redis Pub/Sub

redis.publish(`server:${serverId}`, JSON.stringify({

userId,

message

}));

}

}

Now users receive messages regardless of which server they're connected to.

What if Bob closed the app? WebSocket is disconnected, but messages must arrive. Push Notification is needed.

// Check online status when delivering message

async function deliverMessage(userId, message) {

if (onlineUsers.has(userId)) {

// Online: Send via WebSocket

const ws = connections.get(userId);

ws.send(JSON.stringify(message));

} else {

// Offline: Send push notification

const deviceToken = await db.getDeviceToken(userId);

await sendPushNotification({

token: deviceToken,

title: message.senderName,

body: message.text,

data: {

roomId: message.roomId,

messageId: message.id

}

});

// Increment unread count in DB

await db.incrementUnread(userId, message.roomId);

}

}

When app reopens, check unread count and fetch history.

// On app launch

async function onAppLaunch() {

const unreadRooms = await db.getUnreadRooms(userId);

for (const room of unreadRooms) {

// Fetch messages after last read

const newMessages = await getMessages(

room.id,

{ after: room.lastReadMessageId }

);

displayMessages(newMessages);

}

// Reconnect WebSocket

connectWebSocket();

}

That's how Slack and Discord work. Instant if online, push notification + history sync if offline.

Chat systems aren't just about WebSocket connections. Here's the summary:

1. Connection Method: Evolved from Polling → Long Polling → WebSocket. For bidirectional real-time, WebSocket is optimal.

2. Delivery Guarantees: Go with at-least-once, but client must deduplicate. ACK and retry logic are essential.

3. Group Chat: Solve fan-out with Redis Pub/Sub, filter to online users only to reduce write amplification.

4. Online Status: Verify with heartbeat (ping/pong), build read receipts and typing indicators on top.

5. Message Storage: Recent in Redis cache, old in DB. Handle 90%+ requests from cache.

6. Scaling: With multiple servers, use sticky session or Redis state sharing. Pub/Sub for inter-server communication.

7. Offline: When WebSocket drops, send push notification, sync history on app restart.

The biggest lesson: Real-time systems must consider not just "people currently connected" but also "people who just left or will soon join." State boundaries are fuzzy, networks drop anytime. Creating reliable experience on top of this uncertainty is the essence of system design.

Making water come out of a faucet is easy. Building a system that reliably supplies water to an entire city 24/7 is a completely different problem. Chat was exactly the same.