Notification System Design: Sending Alerts to Millions of Users

Sending one notification is easy. As notifications scale up, preventing duplicates, respecting preferences, and handling retries becomes a completely different engineering problem.

Sending one notification is easy. As notifications scale up, preventing duplicates, respecting preferences, and handling retries becomes a completely different engineering problem.

Why is the CPU fast but the computer slow? I explore the revolutionary idea of the 80-year-old Von Neumann architecture and the fatal bottleneck it left behind.

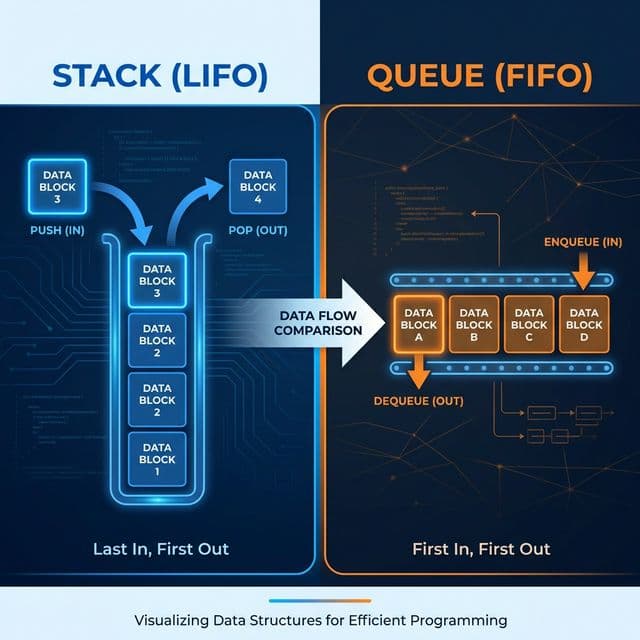

Pringles can (Stack) vs Restaurant line (Queue). The most basic data structures, but without them, you can't understand recursion or message queues.

ChatGPT answers questions. AI Agents plan, use tools, and complete tasks autonomously. Understanding this difference changes how you build with AI.

Integrating a payment API is just the beginning. Idempotency, refund flows, and double-charge prevention make payment systems genuinely hard.

Implementing a notification feature seems trivial at first. User triggers an event → send notification to another user. Done. Maybe 10 lines of code.

But once you open that box, it's a completely different world. Sending one notification to one person is easy. But as notifications scale up, the complexity compounds: some users want push, others want email, some notifications need to be immediate, others should be batched, failures need retries, duplicates must be prevented. In large-scale notification systems, the list is endless.

A notification system isn't just about sending messages. It's about designing a massive logistics operation.

The first question I faced was "how do we deliver notifications?" because every user has different preferences.

Push Notifications: The most immediate and powerful channel for mobile apps. FCM (Firebase Cloud Messaging) for Android, APNs (Apple Push Notification service) for iOS. Timing is everything—delay kills the value.

Email: Can carry long content and be reviewed later. Using services like SendGrid or AWS SES. Better suited for less urgent notifications.

SMS: Highest delivery rate but most expensive. Using services like Twilio. Reserved for truly critical notifications (payment confirmations, security alerts).

In-app Notifications: Only visible inside the app. Accumulates in a notification center until the user opens the app. The least intrusive channel.

The key insight: the same event needs different channels for different users. When someone comments on my post, one user wants push, another wants email, and another might not want any notification at all.

interface NotificationChannel {

type: 'push' | 'email' | 'sms' | 'in_app';

enabled: boolean;

provider?: string; // 'fcm', 'apns', 'sendgrid', 'twilio'

}

interface UserPreferences {

userId: string;

channels: {

comment: NotificationChannel[];

like: NotificationChannel[];

follow: NotificationChannel[];

marketing: NotificationChannel[];

};

quietHours?: {

start: string; // "22:00"

end: string; // "08:00"

timezone: string;

};

}

The second wall is "how do you send notifications at scale?"

The naive approach is simple: event happens → fetch target user list → loop through and send notifications. This works fine with a handful of users.

But as follower counts grow into the tens of thousands or more, the loop becomes a bottleneck—the server freezes while iterating, and if one notification fails (network error, API limit), everything blocks.

The answer was a message queue. Think of it like the postal system. People who want to send letters (Producers) drop them in mailboxes, then postal workers (Consumers) each take letters and deliver them independently.

[Event Occurs]

↓

[Producer: Create Notification]

↓

[Message Queue: RabbitMQ/Kafka/SQS]

↓ ↓ ↓

[Consumer 1][Consumer 2][Consumer 3] ... [Consumer N]

↓ ↓ ↓

[FCM] [SendGrid] [Twilio]

Producers just drop messages into the queue and move on. Consumers process messages independently. If one fails, others continue working. Load increases? Spin up more consumers. Perfect distributed processing.

# Producer (FastAPI example)

from celery import Celery

import redis

celery_app = Celery('notifications', broker='redis://localhost:6379/0')

@app.post("/api/post/{post_id}/like")

async def like_post(post_id: str, user_id: str):

# Handle like

post = await db.posts.find_one({"_id": post_id})

author_id = post["author_id"]

# Add message to notification queue (async)

celery_app.send_task('send_notification', args=[{

'type': 'like',

'recipient_id': author_id,

'actor_id': user_id,

'post_id': post_id,

'timestamp': datetime.utcnow().isoformat()

}])

return {"status": "success"}

# Consumer (Celery Worker)

@celery_app.task(bind=True, max_retries=3)

def send_notification(self, event):

try:

recipient_id = event['recipient_id']

prefs = get_user_preferences(recipient_id)

# Generate message from template

message = render_template('like_notification', event)

# Send via appropriate channels based on user settings

for channel in prefs.get_enabled_channels(event['type']):

if channel == 'push':

send_push(recipient_id, message)

elif channel == 'email':

send_email(recipient_id, message)

except Exception as exc:

# Retry on failure (exponential backoff)

raise self.retry(exc=exc, countdown=2 ** self.request.retries)

The third realization: "same event, different message format per channel."

Push notifications need to be short and punchy: "John Doe liked your post" Emails need to be long and detailed: HTML templates with images and multiple links. SMS needs to be ultra-brief: "New comment. Check: app.com/p/123"

Initially, I hardcoded messages for each notification, but changing copy meant modifying code. Multi-language support was a nightmare.

Introducing a template system changed everything. Templates are managed in DB or files, code just fills in variables.

interface NotificationTemplate {

id: string;

type: 'like' | 'comment' | 'follow' | 'mention';

channel: 'push' | 'email' | 'sms' | 'in_app';

locale: string;

subject?: string; // For email

title: string; // For push/in-app

body: string;

htmlBody?: string; // HTML for email

variables: string[]; // ['actor_name', 'post_title']

}

// Template stored in DB

const templates = {

like_push_en: {

title: "New Like",

body: "{{actor_name}} liked your {{post_title}}",

variables: ['actor_name', 'post_title']

},

like_email_en: {

subject: "{{actor_name}} liked your post",

htmlBody: `

<h2>Hello!</h2>

<p><strong>{{actor_name}}</strong> liked

your post <a href="{{post_url}}">{{post_title}}</a>.</p>

`,

variables: ['actor_name', 'post_title', 'post_url']

}

}

function renderTemplate(templateId: string, variables: Record<string, string>) {

const template = getTemplate(templateId);

let rendered = template.body;

for (const [key, value] of Object.entries(variables)) {

rendered = rendered.replace(new RegExp(`{{${key}}}`, 'g'), value);

}

return rendered;

}

The fourth trap: "too many notifications and users turn them off."

In an active community, dozens of notifications can arrive daily. If a post gets 50 comments, send 50 pushes? Users will delete the app.

Batching was the answer. Collect similar notifications over a time window and send them together.

interface NotificationBatch {

userId: string;

type: 'comment' | 'like' | 'follow';

events: Array<{

actorId: string;

timestamp: Date;

metadata: any;

}>;

firstEventTime: Date;

shouldSendAt: Date; // First event + 5 minutes

}

// Batching logic

async function handleNewEvent(event) {

const existingBatch = await redis.get(`batch:${event.userId}:${event.type}`);

if (existingBatch) {

// Add to existing batch

existingBatch.events.push(event);

await redis.set(`batch:${event.userId}:${event.type}`, existingBatch);

} else {

// Create new batch

const batch = {

userId: event.userId,

type: event.type,

events: [event],

firstEventTime: new Date(),

shouldSendAt: new Date(Date.now() + 5 * 60 * 1000) // 5 minutes later

};

await redis.set(`batch:${event.userId}:${event.type}`, batch);

// Schedule send after 5 minutes

scheduleNotification(batch.shouldSendAt, batch);

}

}

// Batch message example

// Individual: "John Doe commented on your post"

// Batched: "John Doe and 12 others commented on your post"

Rate limiting was equally important. Even crucial notifications become spam if you send 10 per minute.

from datetime import datetime, timedelta

import redis

redis_client = redis.Redis()

def check_rate_limit(user_id: str, channel: str, limit: int, window_seconds: int):

"""

Token bucket algorithm

Example: Max 20 push notifications per hour

"""

key = f"ratelimit:{user_id}:{channel}"

current = redis_client.get(key)

if current is None:

# First request

redis_client.setex(key, window_seconds, 1)

return True

if int(current) >= limit:

return False # Limit exceeded

redis_client.incr(key)

return True

# Usage

if check_rate_limit(user_id, 'push', limit=20, window_seconds=3600):

send_push_notification(user_id, message)

else:

# Queue for batch send later

queue_for_batch(user_id, message)

The fifth lesson: "not all notifications are equal."

A payment failure alert and someone viewing your profile can't be the same priority. Security warnings need immediate delivery, but marketing notifications can wait days.

We introduced a priority system.

enum NotificationPriority {

CRITICAL = 0, // Security, payment failure - immediate send, aggressive retry

HIGH = 1, // Mentions, direct messages - send within minutes

NORMAL = 2, // Likes, follows - batching allowed

LOW = 3 // Marketing, recommendations - batch + send only during quiet hours

}

interface NotificationJob {

id: string;

userId: string;

type: string;

priority: NotificationPriority;

payload: any;

createdAt: Date;

maxRetries: number;

retryCount: number;

}

// Different queues by priority

const queues = {

critical: new Queue('notifications:critical', {

defaultJobOptions: { attempts: 5, backoff: { type: 'exponential', delay: 1000 } }

}),

high: new Queue('notifications:high', {

defaultJobOptions: { attempts: 3, backoff: { type: 'exponential', delay: 2000 } }

}),

normal: new Queue('notifications:normal', {

defaultJobOptions: { attempts: 2, backoff: { type: 'fixed', delay: 60000 } }

}),

low: new Queue('notifications:low', {

defaultJobOptions: { attempts: 1 } // Give up if failed

})

};

The sixth reality: "external services fail all the time."

FCM throws 503 errors, SendGrid APIs timeout, networks disconnect. Can't give up after one failure.

Retry with exponential backoff. Increase wait time after each failure, giving services time to recover.

async function sendWithRetry(

sendFn: () => Promise<void>,

maxRetries: number = 3,

baseDelay: number = 1000

) {

for (let attempt = 0; attempt < maxRetries; attempt++) {

try {

await sendFn();

return { success: true };

} catch (error) {

const isLastAttempt = attempt === maxRetries - 1;

// Non-retriable errors (invalid token, user deleted app)

if (isNonRetriableError(error)) {

await handlePermanentFailure(error);

return { success: false, reason: 'non_retriable' };

}

if (isLastAttempt) {

await logFailure(error);

return { success: false, reason: 'max_retries' };

}

// Exponential backoff: 1s, 2s, 4s, 8s...

const delay = baseDelay * Math.pow(2, attempt);

await sleep(delay);

}

}

}

function isNonRetriableError(error: any): boolean {

// FCM: Invalid registration token

if (error.code === 'messaging/invalid-registration-token') return true;

// SendGrid: Invalid email

if (error.code === 400 && error.message.includes('invalid email')) return true;

// Twilio: Invalid phone number

if (error.code === 21211) return true;

return false;

}

The final realization: "sending notifications isn't the end."

Did it actually reach the user? Did they read it? Click it? Which notifications are most effective? No data means no answers.

Metrics to track:

interface NotificationMetrics {

notificationId: string;

userId: string;

type: string;

channel: string;

priority: NotificationPriority;

// Delivery stages

queuedAt: Date;

sentAt?: Date;

deliveredAt?: Date; // Confirmed by external service

// User actions

viewedAt?: Date;

clickedAt?: Date;

actionTaken?: string;

// Result

status: 'queued' | 'sent' | 'delivered' | 'failed' | 'bounced';

failureReason?: string;

// Metadata

deviceType?: string;

osVersion?: string;

appVersion?: string;

}

// Analytics query example

async function getNotificationStats(type: string, days: number) {

const results = await db.metrics.aggregate([

{

$match: {

type: type,

queuedAt: { $gte: new Date(Date.now() - days * 86400000) }

}

},

{

$group: {

_id: "$channel",

total: { $sum: 1 },

sent: { $sum: { $cond: [{ $ne: ["$sentAt", null] }, 1, 0] } },

delivered: { $sum: { $cond: [{ $ne: ["$deliveredAt", null] }, 1, 0] } },

viewed: { $sum: { $cond: [{ $ne: ["$viewedAt", null] }, 1, 0] } },

clicked: { $sum: { $cond: [{ $ne: ["$clickedAt", null] }, 1, 0] } }

}

}

]);

return results.map(r => ({

channel: r._id,

deliveryRate: (r.sent / r.total * 100).toFixed(2) + '%',

reachRate: (r.delivered / r.sent * 100).toFixed(2) + '%',

openRate: (r.viewed / r.delivered * 100).toFixed(2) + '%',

ctr: (r.clicked / r.viewed * 100).toFixed(2) + '%'

}));

}

Looking back, designing a notification system wasn't about sending messages. It was operating a massive logistics center.

When a single-line notification became millions, every layer of design mattered. No queue means crashes, no templates means management hell, no priority means critical notifications get buried, no data means no improvement.

The core was user experience. Technically perfect delivery means nothing if users don't want it or timing is wrong. Success isn't measured by "how many we sent" but "how many valuable notifications we sent at the right moment."

Now I know: sending one notification and designing a system are completely different dimensions of the problem.