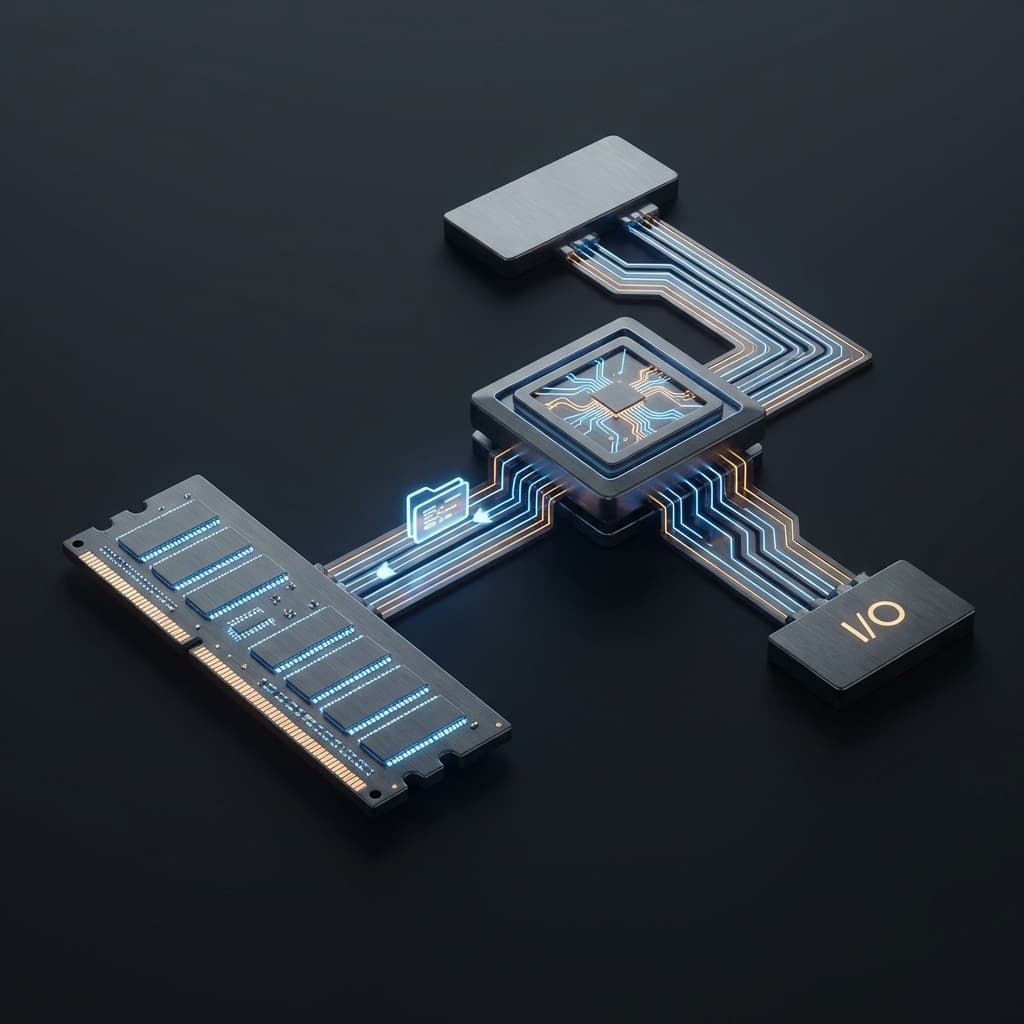

File Upload System Design: Handling Large Files Safely

10MB images upload fine but 2GB videos timeout. Chunked uploads, presigned URLs, and retry logic for robust file upload systems.

10MB images upload fine but 2GB videos timeout. Chunked uploads, presigned URLs, and retry logic for robust file upload systems.

Why is the CPU fast but the computer slow? I explore the revolutionary idea of the 80-year-old Von Neumann architecture and the fatal bottleneck it left behind.

ChatGPT answers questions. AI Agents plan, use tools, and complete tasks autonomously. Understanding this difference changes how you build with AI.

Integrating a payment API is just the beginning. Idempotency, refund flows, and double-charge prevention make payment systems genuinely hard.

When you don't want to go yourself, the proxy goes for you. Hide your identity with Forward Proxy, protect your server with Reverse Proxy. Same middleman, different loyalties.

When I first implemented file uploads, it seemed straightforward. User selects an image, sends it to the server, server uploads to S3. Worked perfectly for files up to 10MB.

Then one day, a user tried uploading a 2GB video. Everything timed out. Looking at the logs, server memory spiked and network bandwidth doubled. The problem was clear: data was moving twice—client → server → S3.

I finally understood. File uploads are like package delivery. Instead of the server receiving packages at the door and then carrying them to the warehouse, customers should ship directly to the warehouse.

The most elegant solution I discovered was presigned URLs. Think of it as S3 creating a "temporary access pass."

// Server: Generate temporary upload URL

import { S3Client, PutObjectCommand } from '@aws-sdk/client-s3';

import { getSignedUrl } from '@aws-sdk/s3-request-presigner';

async function generateUploadURL(filename: string, contentType: string) {

const s3Client = new S3Client({ region: 'ap-northeast-2' });

const command = new PutObjectCommand({

Bucket: 'my-uploads',

Key: `uploads/${Date.now()}-${filename}`,

ContentType: contentType,

});

// URL valid for 15 minutes only

const uploadURL = await getSignedUrl(s3Client, command, {

expiresIn: 900

});

return {

uploadURL,

key: command.input.Key,

};

}

The flow:

The server no longer handles file data. Like a delivery service issuing tracking numbers while customers drop packages directly in the locker.

// Client: Upload directly using presigned URL

async function uploadFile(file: File) {

// 1. Get upload URL from server

const { uploadURL, key } = await fetch('/api/upload/presign', {

method: 'POST',

body: JSON.stringify({

filename: file.name,

contentType: file.type,

}),

}).then(r => r.json());

// 2. Upload directly to S3 (bypassing server!)

const uploadResponse = await fetch(uploadURL, {

method: 'PUT',

body: file,

headers: {

'Content-Type': file.type,

},

});

if (!uploadResponse.ok) {

throw new Error('Upload failed');

}

// 3. Notify server of completion

await fetch('/api/upload/complete', {

method: 'POST',

body: JSON.stringify({ key }),

});

return key;

}

Sending a 2GB file in one shot means restarting from scratch if the network drops. Like loading a truck with all your belongings—if it breaks down halfway, you have to reload everything.

Multipart Upload solves this. Split the file into chunks, upload each separately, then combine them at the end.

// Multipart upload implementation

import {

CreateMultipartUploadCommand,

UploadPartCommand,

CompleteMultipartUploadCommand,

} from '@aws-sdk/client-s3';

async function uploadLargeFile(file: File) {

const s3Client = new S3Client({ region: 'ap-northeast-2' });

const chunkSize = 10 * 1024 * 1024; // 10MB per chunk

const chunks = Math.ceil(file.size / chunkSize);

// 1. Initialize multipart upload

const createResponse = await s3Client.send(

new CreateMultipartUploadCommand({

Bucket: 'my-uploads',

Key: `large/${file.name}`,

})

);

const uploadId = createResponse.UploadId!;

const uploadedParts = [];

// 2. Upload each chunk sequentially

for (let i = 0; i < chunks; i++) {

const start = i * chunkSize;

const end = Math.min(start + chunkSize, file.size);

const chunk = file.slice(start, end);

const partResponse = await s3Client.send(

new UploadPartCommand({

Bucket: 'my-uploads',

Key: `large/${file.name}`,

UploadId: uploadId,

PartNumber: i + 1,

Body: chunk,

})

);

uploadedParts.push({

PartNumber: i + 1,

ETag: partResponse.ETag,

});

console.log(`Uploaded part ${i + 1}/${chunks}`);

}

// 3. Combine all pieces

await s3Client.send(

new CompleteMultipartUploadCommand({

Bucket: 'my-uploads',

Key: `large/${file.name}`,

UploadId: uploadId,

MultipartUpload: {

Parts: uploadedParts,

},

})

);

console.log('Upload complete!');

}

Each chunk uploads independently. If one fails, retry just that piece. Like shipping multiple boxes separately instead of one giant container.

In unstable network conditions, uploads can get interrupted. The tus protocol concept clicked for me here—tracking "how far did we get?"

// Resumable chunked upload with retry logic

async function resumableUpload(file: File, onProgress?: (percent: number) => void) {

const chunkSize = 5 * 1024 * 1024; // 5MB

const chunks = Math.ceil(file.size / chunkSize);

// Store upload state in localStorage

const uploadKey = `upload_${file.name}_${file.size}`;

const savedState = localStorage.getItem(uploadKey);

let completedChunks = savedState ? JSON.parse(savedState) : [];

for (let i = 0; i < chunks; i++) {

// Skip already-uploaded chunks

if (completedChunks.includes(i)) {

onProgress?.((completedChunks.length / chunks) * 100);

continue;

}

const start = i * chunkSize;

const end = Math.min(start + chunkSize, file.size);

const chunk = file.slice(start, end);

let uploaded = false;

let retries = 0;

// Retry up to 3 times

while (!uploaded && retries < 3) {

try {

await uploadChunk(chunk, i);

completedChunks.push(i);

localStorage.setItem(uploadKey, JSON.stringify(completedChunks));

uploaded = true;

onProgress?.((completedChunks.length / chunks) * 100);

} catch (error) {

retries++;

console.log(`Chunk ${i} failed, retry ${retries}/3`);

await new Promise(resolve => setTimeout(resolve, 1000 * retries));

}

}

if (!uploaded) {

throw new Error(`Failed to upload chunk ${i} after 3 retries`);

}

}

// Clean up state after completion

localStorage.removeItem(uploadKey);

}

Even if the network drops, you can resume later. Like video game checkpoints.

Validate files before accepting them. On both client and server.

// Client: Pre-upload validation

function validateFile(file: File) {

// 1. File size limit (100MB)

const maxSize = 100 * 1024 * 1024;

if (file.size > maxSize) {

throw new Error('File too large. Max 100MB');

}

// 2. File type validation

const allowedTypes = ['image/jpeg', 'image/png', 'image/webp', 'video/mp4'];

if (!allowedTypes.includes(file.type)) {

throw new Error('Invalid file type');

}

// 3. File extension validation (prevent MIME type spoofing)

const extension = file.name.split('.').pop()?.toLowerCase();

const allowedExtensions = ['jpg', 'jpeg', 'png', 'webp', 'mp4'];

if (!extension || !allowedExtensions.includes(extension)) {

throw new Error('Invalid file extension');

}

}

// Server: Validate before generating presigned URL

async function validateUploadRequest(filename: string, contentType: string, size: number) {

// Same validation logic on server

if (size > 100 * 1024 * 1024) {

throw new Error('File too large');

}

// Verify MIME type matches extension

const extension = filename.split('.').pop()?.toLowerCase();

const mimeToExt: Record<string, string[]> = {

'image/jpeg': ['jpg', 'jpeg'],

'image/png': ['png'],

'video/mp4': ['mp4'],

};

if (!mimeToExt[contentType]?.includes(extension || '')) {

throw new Error('File extension and MIME type mismatch');

}

}

For virus scanning, process asynchronously after upload. On AWS, you can use Lambda + ClamAV.

Images need processing after upload. Keep the original, generate thumbnails and multiple sizes.

// S3 upload event → Lambda trigger

import sharp from 'sharp';

async function processUploadedImage(s3Key: string) {

const s3Client = new S3Client({});

// 1. Fetch original image

const { Body } = await s3Client.send(

new GetObjectCommand({

Bucket: 'my-uploads',

Key: s3Key,

})

);

const imageBuffer = await Body!.transformToByteArray();

// 2. Resize to multiple dimensions

const sizes = [

{ name: 'thumb', width: 150, height: 150 },

{ name: 'small', width: 400 },

{ name: 'medium', width: 800 },

{ name: 'large', width: 1200 },

];

for (const size of sizes) {

const resized = await sharp(imageBuffer)

.resize(size.width, size.height, {

fit: size.height ? 'cover' : 'inside',

withoutEnlargement: true,

})

.webp({ quality: 85 }) // Convert to WebP

.toBuffer();

const newKey = s3Key.replace(/\.[^.]+$/, `-${size.name}.webp`);

await s3Client.send(

new PutObjectCommand({

Bucket: 'my-uploads',

Key: newKey,

Body: resized,

ContentType: 'image/webp',

})

);

}

// 3. Record processing completion in DB

await db.files.update({

where: { s3Key },

data: {

processed: true,

thumbnailKey: s3Key.replace(/\.[^.]+$/, '-thumb.webp'),

},

});

}

Image processing is CPU-intensive, so running it asynchronously in serverless functions is efficient.

Long uploads make users anxious. Show progress.

// Track upload progress with XMLHttpRequest

function uploadWithProgress(file: File, url: string, onProgress: (percent: number) => void) {

return new Promise<void>((resolve, reject) => {

const xhr = new XMLHttpRequest();

xhr.upload.addEventListener('progress', (e) => {

if (e.lengthComputable) {

const percent = (e.loaded / e.total) * 100;

onProgress(percent);

}

});

xhr.addEventListener('load', () => {

if (xhr.status === 200) {

resolve();

} else {

reject(new Error(`Upload failed: ${xhr.status}`));

}

});

xhr.addEventListener('error', () => reject(new Error('Network error')));

xhr.addEventListener('abort', () => reject(new Error('Upload aborted')));

xhr.open('PUT', url);

xhr.setRequestHeader('Content-Type', file.type);

xhr.send(file);

});

}

// Usage in React component

function FileUploader() {

const [progress, setProgress] = useState(0);

const handleUpload = async (file: File) => {

const { uploadURL } = await getPresignedURL(file.name, file.type);

await uploadWithProgress(file, uploadURL, setProgress);

};

return (

<div>

<input type="file" onChange={(e) => handleUpload(e.target.files[0])} />

{progress > 0 && <progress value={progress} max={100} />}

</div>

);

}

Each storage solution has distinct characteristics.

AWS S3// Supabase Storage example

import { createClient } from '@supabase/supabase-js';

const supabase = createClient(SUPABASE_URL, SUPABASE_KEY);

async function uploadToSupabase(file: File) {

const { data, error } = await supabase.storage

.from('uploads')

.upload(`public/${Date.now()}-${file.name}`, file, {

cacheControl: '3600',

upsert: false,

});

if (error) throw error;

// Public URL (includes CDN)

const { data: urlData } = supabase.storage

.from('uploads')

.getPublicUrl(data.path);

return urlData.publicUrl;

}

My choice was R2 + CDN. Free egress was the deciding factor.

Once files are in S3, put a CDN in front. Essential for fast delivery to global users.

// CloudFront distribution config (Terraform example)

resource "aws_cloudfront_distribution" "uploads_cdn" {

origin {

domain_name = aws_s3_bucket.uploads.bucket_regional_domain_name

origin_id = "S3-uploads"

s3_origin_config {

origin_access_identity = aws_cloudfront_origin_access_identity.uploads.cloudfront_access_identity_path

}

}

enabled = true

is_ipv6_enabled = true

default_cache_behavior {

allowed_methods = ["GET", "HEAD", "OPTIONS"]

cached_methods = ["GET", "HEAD"]

target_origin_id = "S3-uploads"

forwarded_values {

query_string = false

cookies {

forward = "none"

}

}

viewer_protocol_policy = "redirect-to-https"

min_ttl = 0

default_ttl = 86400 # 1 day

max_ttl = 31536000 # 1 year

}

restrictions {

geo_restriction {

restriction_type = "none"

}

}

viewer_certificate {

cloudfront_default_certificate = true

}

}

You can add transformation parameters to image URLs via query strings.

// Cloudflare Images-style transformations

const imageUrl = 'https://cdn.example.com/image.jpg';

const thumbnail = `${imageUrl}?width=300&height=300&fit=cover`;

const webp = `${imageUrl}?format=webp&quality=85`;

File uploads can be security vulnerabilities. Several defenses needed.

1. Rate Limiting// Per-user upload limits

const uploadLimiter = new Map<string, number[]>();

function checkRateLimit(userId: string) {

const now = Date.now();

const userUploads = uploadLimiter.get(userId) || [];

// Keep only uploads from last hour

const recentUploads = userUploads.filter(time => now - time < 3600000);

if (recentUploads.length >= 50) {

throw new Error('Too many uploads. Try again later.');

}

recentUploads.push(now);

uploadLimiter.set(userId, recentUploads);

}

// Verify actual file type using magic numbers

function verifyFileType(buffer: Buffer): string {

const magicNumbers: Record<string, string> = {

'ffd8ff': 'image/jpeg',

'89504e47': 'image/png',

'47494638': 'image/gif',

'52494646': 'video/webm', // RIFF (WebM/AVI)

};

const header = buffer.slice(0, 4).toString('hex');

for (const [magic, mimeType] of Object.entries(magicNumbers)) {

if (header.startsWith(magic)) {

return mimeType;

}

}

throw new Error('Unknown or invalid file type');

}

// Short expiration for presigned URLs

const uploadURL = await getSignedUrl(s3Client, command, {

expiresIn: 900 // 15 minutes

});

// Track for single-use

await db.uploadTokens.create({

data: {

token: uploadURL.split('?')[1], // query params

userId,

expiresAt: new Date(Date.now() + 900000),

used: false,

},

});

In the end, file upload systems are about "how to efficiently move data, store it safely, and serve it fast." The key principles: minimize server involvement, distribute work, and prepare for failures. Those three things were the essence.