Circuit Breaker Pattern: Preventing Cascading Failures and Thundering Herds

1. Don't Let the Fire Spread

Imagine an electrical short circuit happens in a neighbor’s apartment.

If the entire building's wiring was directly connected without safety fuses, that single spark could travel through the wires and burn down your electronics too.

To prevent this, every house has a Circuit Breaker.

When it detects a power surge, it physically breaks the connection ("Trips"). It sacrifices one small connection to save the whole building from burning down.

In Microservices Architecture, we face the same risk.

If Service A calls Service B, and B is hanging (unresponsive) due to high load, Service A's threads will wait for B until they timeout.

Eventually, all of Service A's threads are stuck waiting. Service A stops responding because it has no more threads to handle new requests. Service C, which calls A, also gets stuck.

This is called a Cascading Failure. Within seconds, one bad service can take down your entire platform like a domino effect.

The Circuit Breaker Pattern is the software equivalent of that electrical fuse. It detects failures and wraps protected function calls.

2. The 3 States of a Circuit Breaker

A Circuit Breaker acts as a proxy that monitors failures. It works like a Finite State Machine with 3 distinct states:

2.1. Closed (Normal Operation)

- Condition: Everything is fine. The circuit is closed, allowing current (requests) to flow.

- Behavior: Requests pass through to the downstream service normally.

- Monitoring: It counts failures. If the failure rate exceeds a threshold (e.g., 50% errors in the last 10 seconds), it trips and switches to Open.

- Sliding Window: It usually keeps a buffer (Count-based or Time-based) to calculate the error rate. Old errors drop off.

2.2. Open (Failure Mode)

- Condition: The threshold was exceeded. The "fuse" has blown.

- Behavior: Requests are blocked immediately. It does NOT send the request to the downstream service. Instead, it returns a Fail-Fast error or a Fallback response.

- Purpose: To give the failing service time to recover. Bombarding a dying server with more requests is the worst thing you can do.

- Transition: After a configurable sleep window (e.g., 5 seconds), it switches to Half-Open.

2.3. Half-Open (Testing Mode)

- Condition: The sleep window has passed. We need to check if the service is back online.

- Behavior: It allows a limited number of probe requests (e.g., 1 request) to pass through.

- Success: The service seems healthy! Switch back to Closed and reset failure counters.

- Failure: Still dead. Switch back to Open and reset the sleep timer to wait again.

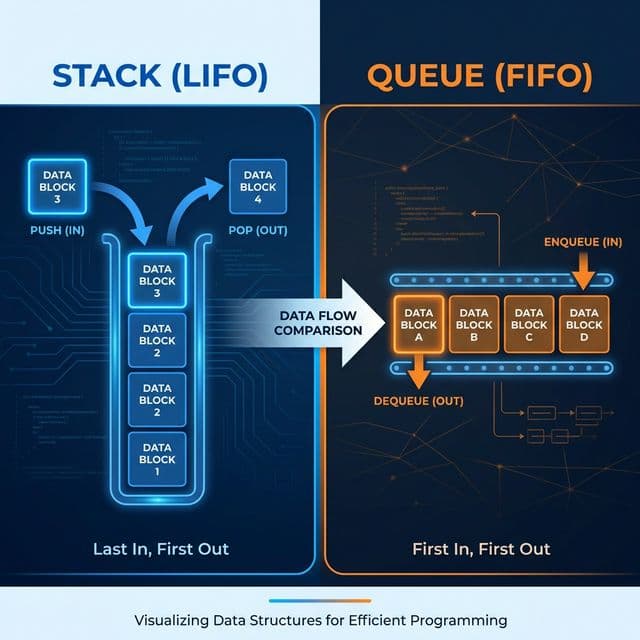

3. The Danger of Retries and Thundering Herds

A common mistake is adding infinite Retries (retry(3)) without a Circuit Breaker.

This leads to the Thundering Herd problem.

Scenario: Inventory Service is slow because the Database is locking up.

- Order Service requests Inventory. Time out (3s).

- Order Service retries immediately thinking it was a glitch.

- User sees a spinner, gets frustrated, and clicks "Buy" again.

- Inventory Service, already struggling, now receives 3x to 5x the normal traffic load due to automatic retries and user retries.

- Inventory Service crashes completely.

Circuit Breakers stop this. They say, "Stop hitting him, he's already dead!"

By blocking requests, they reduce the load to zero, allowing the Database to clear its locks and recover naturally.

4. Resilience Patterns: More than just Circuit Breakers

Ideally, Circuit Breakers are paired with other patterns.

4.1. The Bulkhead Pattern

Ships are divided into watertight compartments (Bulkheads). If the hull is breached, water fills only one compartment, and the ship stays afloat.

In software, use separate Thread Pools or Semaphores for different downstream services. If the Payment Service is slow, it consumes all threads in Pool-Payment, but Pool-Inventory remains free for other users.

4.2. Rate Limiter

Controls the rate of traffic sent to or received from a service. It protects against DDoS attacks or simply being a noisy neighbor.

4.3. Time Limiter

Sets a strict time limit on the duration of an execution. It interrupts the thread if it takes too long, freeing up resources faster than waiting for a TCP timeout.

5. Implementation (Java / Resilience4j)

Resilience4j is a lightweight fault tolerance library designed for Java 8 and functional programming. It is the modern replacement for Netflix Hystrix.

// Configuration

CircuitBreakerConfig config = CircuitBreakerConfig.custom()

.failureRateThreshold(50) // Trip if 50% fail

.waitDurationInOpenState(Duration.ofMillis(1000)) // Wait 1s

.permittedNumberOfCallsInHalfOpenState(2) // Allow 2 probes

.slidingWindowSize(10) // Look at last 10 calls

.build();

// Usage

CircuitBreaker circuitBreaker = CircuitBreaker.of("backendService", config);

Supplier<String> decoratedSupplier = CircuitBreaker

.decorateSupplier(circuitBreaker, () -> backendService.doSomething());

// Execute with Fallback

String result = Try.ofSupplier(decoratedSupplier)

.recover(throwable -> "Fallback Response").get();

In a Spring Boot application:

@CircuitBreaker(name = "backendA", fallbackMethod = "fallback")

public String remoteCall() {

return restTemplate.getForObject("/api", String.class);

}

6. Service Mesh (Istio/Linkerd) vs Application Level

You have two choices for implementing this pattern:

- Application Level (Resilience4j, Polly):

- Pros: Fine-grained control, custom fallback logic (e.g., return cached data).

- Cons: Language specific, requires code changes.

- Infrastructure Level (Istio, Envoy):

- Pros: Language agnostic (works for Go, Py, Java), centralized management, no code changes.

- Cons: Course-grained (usually just returns 503 error), adds network hop latency.

Recommendation: Use Service Mesh for global safety and traffic control. Use Application Level when you need a smart Business Fallback (e.g., "Main recommendation engine is down? Show 'Trending Now' list instead").

7. Advanced Configuration Guides

Setting the right numbers is harder than writing the code.

7.1. Failure Rate Threshold

Don't set it to 100%. Usually, 50% is safe. If half your requests fail, the service is effectively dead.

7.2. Slow Call Duration Threshold

Sometimes a service isn't failing (500 Error), but it's just incredibly slow.

You can configure the breaker to consider any call taking longer than 2 seconds as a failure, even if it eventually succeeds. This prevents thread starvation.

7.3. Sliding Window Size

If set to 10, one single failure means a 10% failure rate. This might be too sensitive (jitter).

If set to 100, it takes a long time to detect a failure.

Start with 50-100 for high-throughput services.

8. Testing Chaos

How do you know if your Circuit Breaker actually works? Use Chaos Engineering.

Tools like Gremlin or Chaos Mesh can artificially inject latency or errors into your network.

Run a "Game Day" where you intentionally slow down the Inventory Service and observe the Order Service.

Does the Circuit Breaker trip? Does the Fallback trigger? Or does the whole system hang?

It is better to find out in testing than in production on Black Friday.

9. Conclusion

In a distributed system, failure is inevitable.

Hardware fails, networks have jitter, and people write bugs.

You cannot prevent failure, but you can manage it.

The Circuit Breaker pattern is essential for Resiliency.

It isolates the failing component, prevents cascading failures, and gives your system the breathing room it needs to heal itself.

Don't build Microservices without it.