Caching Strategies: Pattern Guide for High Performance

1. Why Do We Need Caching?

Imagine you run an e-commerce giant like Amazon.

Every time a user visits the homepage, if your server queries the Master Database to fetch the product list, user reviews, and recommendations, your database will melt down under the load of millions of requests per second.

Database calls are expensive. They involve disk I/O and network latency.

Caching is the art of storing frequently accessed data in a fast, temporary storage layer (usually RAM, like Redis or Memcached).

Retrieving data from RAM is nanoseconds fast, compared to milliseconds for Disk.

But using a cache introduces complexity: "How do we keep the Cache and the Database in sync?"

If the cache has old data (Stale Data), customers might buy an out-of-stock item.

2. Reading Patterns

2.1. Cache-Aside (Lazy Loading)

This is the most common pattern.

- Application receives a request for Item A.

- It checks the Cache.

- Hit: Returns data immediately.

- Miss: Application queries the Database, gets the data, returns it to the user, and populates the Cache for next time.

- Pros: Resilient to cache failure. If Redis dies, the app can still fetch from DB (though slower). Control stays in the application.

- Cons: Latency penalty on the first request (Cache Miss). Risk of stale data if DB is updated but Cache isn't.

2.2. Read-Through

Similar to Cache-Aside, but the application only talks to the Cache.

The Cache library/provider is responsible for fetching data from the DB if it's missing.

- Pros: Simplifies application code (Transparent sourcing).

- Cons: Not all caching solutions support this out-of-the-box.

3. Writing Patterns

3.1. Write-Back (Write-Behind)

Performance is priority #1.

- Application writes data to the Cache only.

- Returns success to user immediately.

- The Cache asynchronously writes the data to the Database later (e.g., in batches, every 5 seconds).

- Best Use Case: Write-heavy workloads where slight data loss is acceptable (e.g., YouTube view counts, Analytics logs).

- Danger: High risk of data loss. If the Cache server crashes before syncing to DB, the data is gone forever.

3.2. Write-Through

Data consistency is priority #1.

- Application writes to the Cache.

- Cache writes synchronously to the Database.

- Returns success only when both are complete.

- Best Use Case: Critical data where consistency is key (e.g., Financial transactions).

- Pros: Cache never has stale data. Data is safe in DB.

- Cons: Highest latency for write operations (writing to two places).

3.3. Write-Around

Optimize the cache for reading.

- Application writes directly to the Database, bypassing the cache.

- Cache is only populated when the data is Read (via Cache-Aside or Read-Through).

- Best Use Case: Data that is written once but rarely read immediately (e.g., Archival data, Log storage).

- Pros: Prevents "Cache Pollution" (filling cache with useless data).

4. The Three Cache Nightmares

These are advanced concepts essential for Senior Engineering interviews.

4.1. Cache Penetration

The Problem: A malicious user requests a Key that does not exist in the DB (e.g., user_id = -1).

Since it's not in the DB, it's never added to the Cache. So every request goes straight to the DB.

The attacker spams this to crash your DB.

The Solution:

- Cache Null Values: If DB returns "Not Found", store

key: -1, value: null in Cache with a short TTL (e.g., 5 min).

- Bloom Filters: Use a Bloom Filter to quickly check if an ID might exist. If it definitely doesn't, reject the request before hitting the storage.

4.2. Cache Breakdown (Thundering Herd)

The Problem: A "Hot Key" (very popular item) expires at 12:00:00.

At 12:00:01, 10,000 users request that key.

Since it's missing in Cache, all 10,000 requests hit the DB at once.

The Solution:

- Mutex Locks: When a Cache Miss occurs, try to acquire a lock. Only the winner goes to DB; the rest wait for the cache to update.

- Soft TTL: Store the expiry time inside the value. If it's "about to expire", let one background thread update it while serving the old value to others.

4.3. Cache Avalanche

The Problem: You restart your server or cache nodes, and all keys are empty. Or you set the TTL of all keys to 1 hour, and at 1:00 PM, everything expires simultaneously.

Massive spike in DB load.

The Solution:

- Randomize TTL: Don't set TTL to exactly 60 minutes. Set it to

60 min + random(0~5 min). This spreads out the expiration times.

- High Availability: Use Redis Cluster / Sentinel to prevent a single node failure from wiping out the whole cache.

5. Eviction Policies: What to Delete?

Memory is finite. When your Redis is full, you must decide what to delete (evict).

- LRU (Least Recently Used): Delete items that haven't been used for the longest time. This is the most popular strategy. It assumes that if you read it recently, you will read it again soon.

- LFU (Least Frequently Used): Delete items that are rarely used (low count). Good for items that are accessed in a stable pattern.

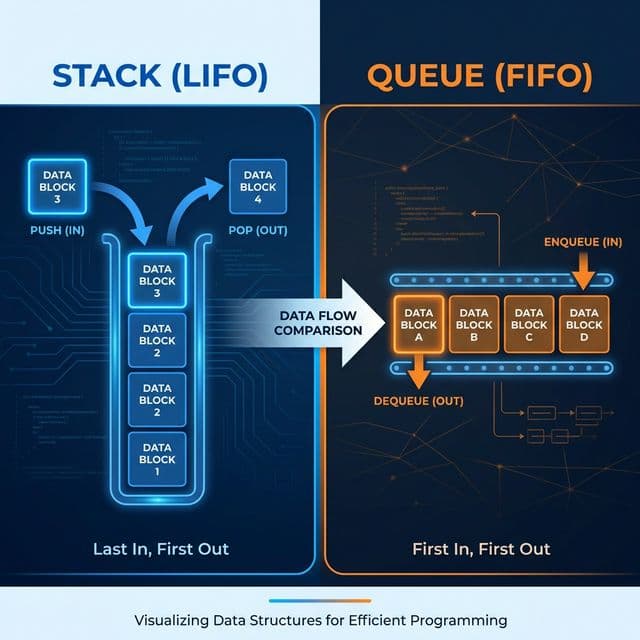

- FIFO (First In, First Out): Delete the oldest items first, regardless of usage.

6. Distributed vs Local Caching

When designing a system, you have to decide where to put the cache.

6.1 Local Caching (In-Memory)

The cache sits inside your application process (e.g., ConcurrentHashMap in Java, or lru-cache npm package in Node.js).

- Pros: Zero network latency. It is the fastest possible cache.

- Cons:

- Data Inconsistency: If you have 3 server instances, Server A might have

key=1 while Server B has key=2.

- Memory Limit: Limited by the server's heap size.

- Loss on Restart: If the instance restarts, cache is gone.

6.2 Distributed Caching (Remote)

The cache sits on a separate server (e.g., Redis, Memcached).

- Pros:

- Consistency: All server instances see the same data.

- Scalability: You can add more Redis nodes to handle terabytes of data.

- Persistence: Redis can save data to disk.

- Cons: Network latency (though usually < 1ms).

Best Practice: Use a Two-Level Cache. Use a small Local Cache for static configuration data (super fast), and a Distributed Cache for shared user data.

7. Redis vs Memcached

Often asked: "Which one should I use?"

- Memcached:

- Simple Key-Value store.

- Multi-threaded (good for scaling up).

- No persistence (purely in-memory).

- Redis:

- Supports complex data structures (Lists, Sets, Sorted Sets, Hashes).

- Single-threaded (but extremely optimized).

- Persistence (RDB, AOF).

- Pub/Sub, Lua Scripting, Geo-spatial support.

Verdict: In 2024, Redis is the de-facto standard for 99% of use cases. Use Memcached only if you have a very specific legacy requirement for multi-threading optimization on a single massive node.

8. Summary Table

| Pattern | Read Speed | Write Speed | Consistency | Data Safety |

|---|

| Cache-Aside | Medium (Miss penalty) | Fast (DB direct) | Low (Stale risk) | High |

| Read-Through | Medium | N/A | Low | High |

| Write-Back | Fast | Fastest | Low | Low (Risk) |

| Write-Through | Fast | Slow | High | High |

Choosing a strategy is about tradeoffs.

For a social media feed? Cache-Aside + Random TTL.

For a view counter? Write-Back.

For a bank ledger? Write-Through without Cache (or strictly consistent cache).

Design wisely!