BERT vs GPT: Two Faces of AI (Understanding vs Generation)

Both are children of Transformer, so why the difference? Using 'Fill-in-the-blank' vs 'Write-next-word' analogies to explain BERT vs GPT. Practical guide based on trial and error.

Both are children of Transformer, so why the difference? Using 'Fill-in-the-blank' vs 'Write-next-word' analogies to explain BERT vs GPT. Practical guide based on trial and error.

ChatGPT answers questions. AI Agents plan, use tools, and complete tasks autonomously. Understanding this difference changes how you build with AI.

My AI chatbot was hallucinating wild answers to customers. Here's how I implemented RAG (Retrieval-Augmented Generation) to fix it, covering Vector DBs, Embeddings, and Hybrid Search.

Overcoming RNN's 'dementia' and how Google flipped the world with 'Attention Is All You Need'. From Query-Key-Value library analogies to Multi-Head Attention and Vision Transformers.

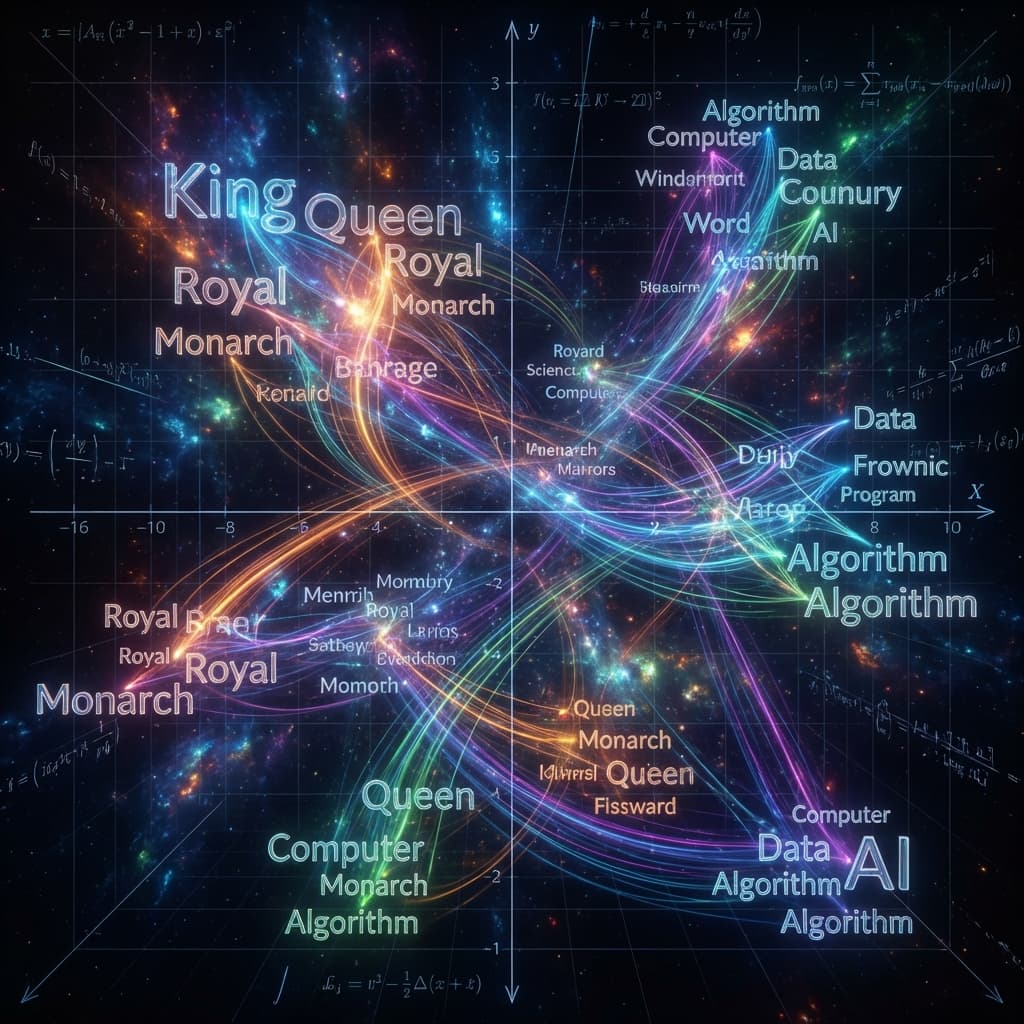

How do computers understand that 'King' - 'Man' + 'Woman' = 'Queen'? We dive deep into the evolution of NLP embeddings, from One-Hot Encoding to Word2Vec and Transformer-based models. Learn about Vector Databases, Cosine Similarity math, and how RAG (Retrieval-Augmented Generation) is reshaping modern AI applications.

When I first took on an NLP project, the most common advice I heard was: "Use BERT for text classification, and use GPT for text generation." I questioned it. "They are both based on Google's Transformer architecture, so why are their uses so polarized?"

Out of curiosity, I tried the opposite. I used GPT-2 for sentiment analysis (classification) and BERT for sentence generation. The results were disastrous. BERT churned out jumbled nonsense, and GPT's classification accuracy was all over the place.

That's when I realized. "Ah, their brains are wired differently from birth." BERT was born to Understand, and GPT was born to Create (Generate). Knowing this difference clearly is the starting point of AI Engineering.

The most confusing part was the terminology: "Bidirectional vs Unidirectional". "I get that they read text in different directions, but why does that create a difference in intelligence?"

Also, the role division between "Encoder" and "Decoder" was confusing. I knew that in a translator model (Transformer), the Encoder reads the source sentence and the Decoder spits out the translation. But I couldn't imagine what would happen if you ripped them apart.

The decisive analogy was "Types of Exam Questions".

[MASK]. It was red and sweet."[MASK] is "apple".BERT consists only of the Encoder stack from the Transformer model. The role of the Encoder is to compress information into "Numbers (Vector/Embedding)".

GPT consists only of the Decoder stack from the Transformer model. The role of the Decoder is to spit things out.

The fundamental architectural difference lies in Attention Masking.

BERT uses "No Masking" in its self-attention mechanism (except for padding). This means when it looks at the word "Apple" in "I ate an Apple yesterday," it can simultaneously see "I", "ate", "an", and "yesterday". It creates a Contextual Embedding by aggregating information from all directions. This is why it knows that "Apple" here acts as an object of "ate" and is likely a fruit.

GPT uses "Causal Masking" (or Look-ahead Masking). This is a triangular mask that prevents the model from seeing future tokens. When predicting "Apple" in "I ate an [?]", it can ONLY see "I", "ate", and "an". It is physically blinded to the word "yesterday". This constraints force the model to become an expert at probability and prediction, rather than just holistic understanding. This "Masking" is what decides their destiny as a Writer vs an Analyst.

Choice: BERT Because you need to read the entire review to judge.

from transformers import BertTokenizer, BertForSequenceClassification

import torch

# BERT vectorizes the whole sentence at once.

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertForSequenceClassification.from_pretrained('bert-base-uncased')

text = "The movie was not bad at all."

inputs = tokenizer(text, return_tensors="pt")

outputs = model(**inputs)

# Understands the relation between 'not' and 'bad' -> Classified as 'Positive'

Choice: GPT Because the goal is natural sentence generation.

from transformers import GPT2LMHeadModel, GPT2Tokenizer

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

model = GPT2LMHeadModel.from_pretrained('gpt2')

prompt = "Subject: Meeting Invitation\nHi Team, I would like to"

inputs = tokenizer(prompt, return_tensors="pt")

# Generates next words controlling Creativity (Temperature)

outputs = model.generate(inputs['input_ids'], max_length=50, temperature=0.7)

print(tokenizer.decode(outputs[0]))

Since 2023, with the era of LLMs (Large Language Models), the boundary is fading.

However, the distinction is still valid in terms of Cost/Performance. Using a massive GPT-4 for simple classification is like using a sledgehammer to crack a nut. A fast, lightweight DistilBERT can be much more efficient.

It can (via Gibbs Sampling, etc.), but it's incredibly slow and unnatural. It fills one blank, resets, fills another... It's the peak of inefficiency.

Because GPT is trained to say "the plausible next word," not "the truth." It spits out the word with the highest probability in that context, without verifying if it's a fact. (RAG technology rose to fix this).

As a developer, remember this criterion when choosing an AI model:

Of course, the "Smart Writer (GPT-4)" is threatening the Analyst's job these days, but the moment for a dedicated Analyst (BERT) still comes (Speed, Security, Cost). Knowing your tools and using them in the right place is the mark of a true engineer.