Transformer: Foundation of Modern AI

Understanding Transformer architecture through practical experience

Understanding Transformer architecture through practical experience

The gold mine of AI era, NVIDIA GPUs. Why do we run AI on gaming graphics cards? Learn the difference between workers (CUDA) and matrix geniuses (Tensor Cores).

Understanding differences and selection criteria between fine-tuning and prompt engineering for LLM customization

Why did AI and deep learning abandon CPUs for GPUs? From ALU architecture to CUDA memory hierarchy and generative AI principles.

Understanding neural network principles through practical project experience. From factory line analogies to backpropagation and hyperparameter tuning.

Do you remember the news in late 2016 that Google Translate suddenly became so smart that people jokingly suspected they were torturing aliens for technology? Before that, it translated "The father enters the room" into broken English. Suddenly, it started spitting out fluent, natural sentences. Behind that revolution was the Transformer architecture.

Why "Transformer"? It's not about the robots in disguise. It refers to the model's ability to transform one sequence of symbols into another sequence (e.g., French to English) with unprecedented efficiency and accuracy. Until then, sequence transduction was dominated by Recurrent Neural Networks (RNNs) or Convolutional Neural Networks (CNNs). Transformer changed the game entirely.

At the time, I was building a chatbot using LSTM (Long Short-Term Memory) at work, struggling with the issue that it turned into an idiot whenever the input sentence got long. "It just forgets everything mentioned at the beginning." A senior colleague told me, "Hey, Google published a paper. 'Attention Is All You Need'. Check it out."

The title was provocative, and that paper completely changed my AI career.

When I first opened the paper, I was bewildered.

Until then, the rule of thumb for NLP (Natural Language Processing) was RNN. To process "I go to school", you naturally read it in the order "I" -> "go" -> "to" -> "school", right? But Transformer said, "Who needs order? Just shove it all in at once (Parallel)." "Wait, how can language exist without order?"

"Q, K, V... Query, Key, Value?"

Are these database terms? Why are they here?

The formula was alien stuff like softmax(QK^T / sqrt(d)), and I couldn't intuitively grasp how this led to "understanding context".

The analogies that finally made it click were "Blind Date" and "Library Search".

Imagine a meeting (sentence) with several people. "Cheolsu gave Yeonghui an apple."

Each word exchanges glances (Attention) asking, "Who is related to me?" It doesn't matter how far apart they are. You just have to look. This was the secret to breaking RNN's limitation (inability to remember distant words).

'Cheolsu' (Query) scans the entire sentence, finds the keyhole that fits 'gave' (Key) perfectly, and pulls that word's meaning (Value) to reinforce its own meaning. In the end, 'Cheolsu' is no longer just 'Cheolsu', but is reborn as a vector with rich context: 'Cheolsu who gave an apple to Yeonghui'.

Transformer is largely divided into Encoder and Decoder. Let's take "Translating English to Korean" as an example.

Since Transformer processes in parallel, it doesn't know the order. (It treats "School I go to" and "I go to school" the same.) So, we attach a number tag to each word. "You are No.1, you are No.2..." Mathematically, this is done elegantly using Sine/Cosine functions.

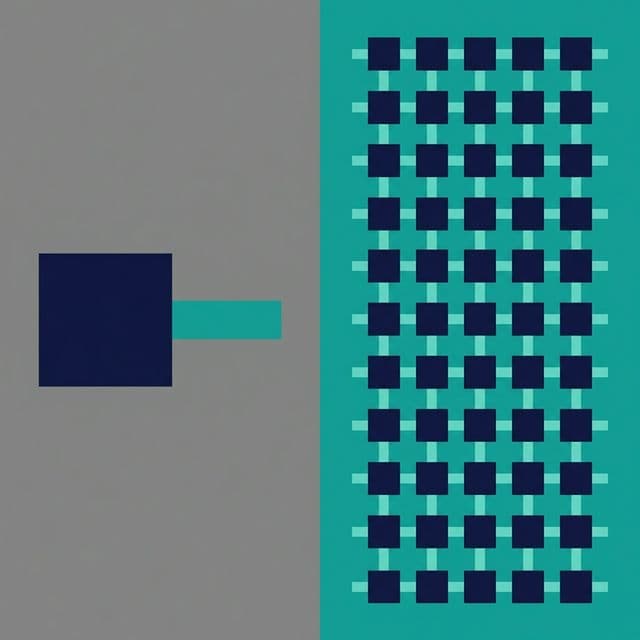

Using only one Attention narrows the field of view. So, we create 8 Eyes (Heads).

Each analyzes the sentence from a different perspective and combines the findings later. This is Multi-Head Attention.

Information gained from Attention is mixed well (Feed Forward) and added to the original information (Residual Connection). "Original Me" + "Me who understood context" = "Smarter Me".

Implementing the whole structure is complex, so let's look at the core Attention part.

import torch

import torch.nn as nn

import math

class MultiHeadAttention(nn.Module):

def __init__(self, d_model=512, num_heads=8):

super().__init__()

self.num_heads = num_heads

self.d_k = d_model // num_heads # Dimension of each head

# Linear layers for Q, K, V, O

self.W_q = nn.Linear(d_model, d_model)

self.W_k = nn.Linear(d_model, d_model)

self.W_v = nn.Linear(d_model, d_model)

self.W_o = nn.Linear(d_model, d_model)

def forward(self, x):

batch_size, seq_len, d_model = x.shape

# 1. Generate Q, K, V (Linear Projection)

Q = self.W_q(x)

K = self.W_k(x)

V = self.W_v(x)

# 2. Split Heads

Q = Q.view(batch_size, seq_len, self.num_heads, self.d_k).transpose(1, 2)

K = K.view(batch_size, seq_len, self.num_heads, self.d_k).transpose(1, 2)

V = V.view(batch_size, seq_len, self.num_heads, self.d_k).transpose(1, 2)

# 3. Calculate Attention Score (Scaled Dot-Product)

# Multiply Q and K to find similarity

scores = torch.matmul(Q, K.transpose(-2, -1)) / math.sqrt(self.d_k)

# 4. Convert to probability with Softmax

attention_weights = torch.softmax(scores, dim=-1)

# 5. Multiply with Value to extract final info

context = torch.matmul(attention_weights, V)

# 6. Concat Heads

context = context.transpose(1, 2).contiguous().view(batch_size, seq_len, d_model)

output = self.W_o(context)

return output

When I first wrote this code, I cried a lot because of Dimension errors in transpose and view.

But once I saw it running, I got goosebumps thinking, "Wow, this really understands sentences just with matrix multiplications, without a single for-loop?" I understood why GPUs love it.

This single paper rewrote the history of AI.

In the end, we are living in the era of Transformers.

Transformer is a revolutionary architecture that grasps the relationship of all words in a sentence at once (Parallel) through a mechanism called 'Attention'. It solved RNN's chronic diseases of 'Amnesia' and 'Slowness' simultaneously, and is the ancestor of all Large Language Models (LLMs) today.