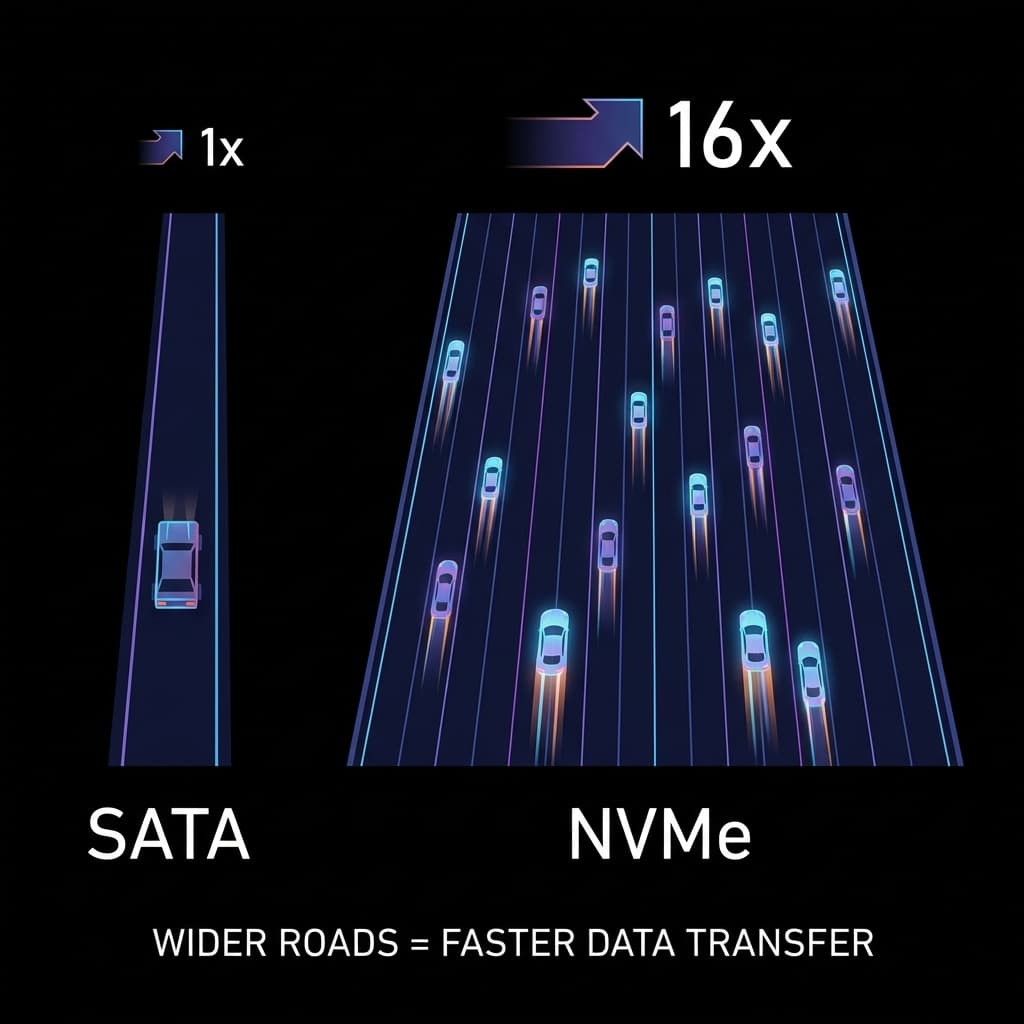

NVMe vs SATA: Wider Roads Are Better

Bought a fast SSD but it's still slow? One-lane country road (SATA) vs 16-lane highway (NVMe).

Bought a fast SSD but it's still slow? One-lane country road (SATA) vs 16-lane highway (NVMe).

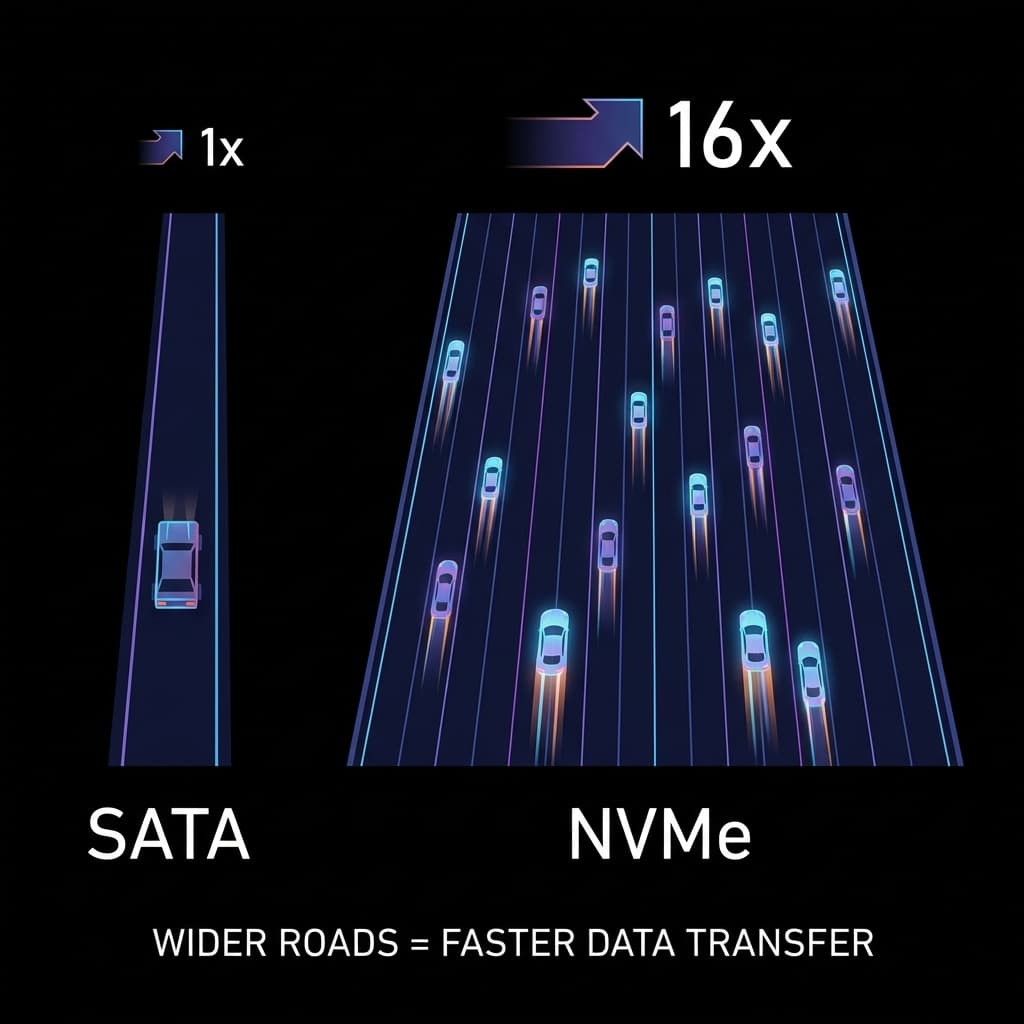

Why does MacBook battery last long? Should I use AWS Graviton? A deep dive into the philosophy of CISC (Complex) vs RISC (Simple) architectures.

The gold mine of AI era, NVIDIA GPUs. Why do we run AI on gaming graphics cards? Learn the difference between workers (CUDA) and matrix geniuses (Tensor Cores).

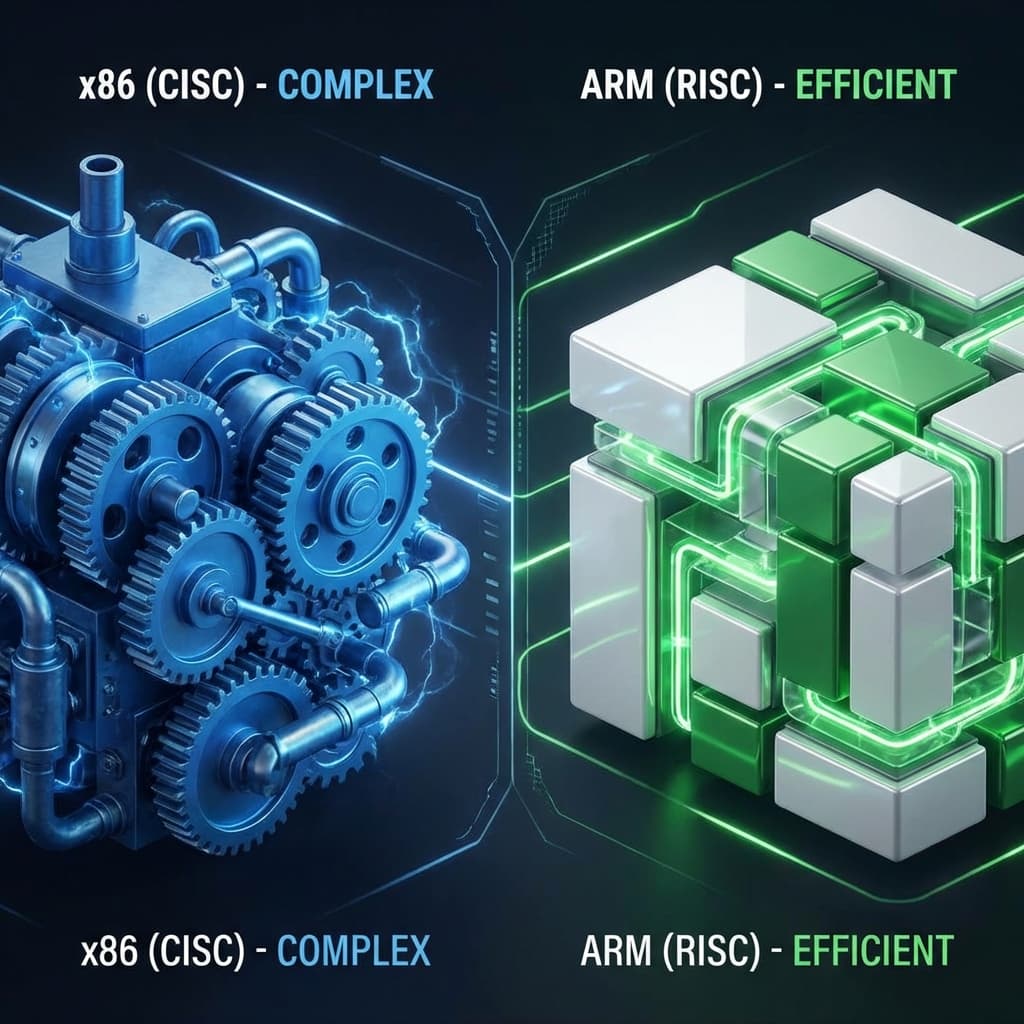

LP records vs USB drives. Why physically spinning disks are inherently slow, and how SSDs made servers 100x faster.

I thought the motherboard was just a board to plug things into. Then I learned what a chipset does.

My friend bought an external SSD, feeling excited. "This will make everything instant!" But after moving some files, he was disappointed. "It's not that fast."

Turns out, that SSD was connected via an old SATA cable. He bought a Ferrari (SSD) but was driving on a country road (SATA). If you want true speed, you need the Autobahn (NVMe).

I didn't get this at first either. "Aren't all SSDs fast?" Nope. I learned that the device's speed and the connection's speed are two separate things. If one is slow, the whole thing is slow.

Storage performance is determined by two factors:

Think of it like water pipes. Even if the water source (SSD) can send 10 liters per second, if the pipe (Interface) can only handle 500ml per second, you only get 500ml at the tap. That's a Bottleneck.

I experienced this on my server. I bought a fast SSD but database queries were still slow. Turns out, the server only supported SATA. Great hardware, but the connection was choking it.

SATA (Serial ATA) was built in the era of Hard Drives (HDDs). In the early 2000s, HDDs read data from spinning platters, which made them physically slow. The connection was designed to match that speed.

SATA has gone through multiple versions:

SATA 3.0 is the most common today, and that's the ceiling. No matter how fast your SSD is, it's capped at 600MB/s.

I felt SATA's limits when editing 4K video. Loading video files from the SSD caused timeline stutters. The SSD itself could read at 3,500MB/s, but the SATA cable limited it to 600MB/s.

That's when the 1-lane country road metaphor clicked for me. You've got a Ferrari that wants to fly, but the road forces you to crawl.

NVMe (Non-Volatile Memory express) was designed from the ground up for flash memory (SSDs). If SATA is a legacy from the HDD era, NVMe is "SSD-native."

The key to NVMe is that it uses the PCIe (Peripheral Component Interconnect Express) bus. SATA goes through a separate SATA controller to talk to the CPU, but NVMe connects directly to the CPU.

Think of it like a company org chart:

Cutting out the middleman drastically reduces latency.

PCIe has generations too, and each generation doubles the speed.

| PCIe Gen | Per-Lane Speed | x4 Total Speed | Released |

|---|---|---|---|

| PCIe 3.0 | ~1GB/s | ~4,000MB/s | 2010 |

| PCIe 4.0 | ~2GB/s | ~8,000MB/s | 2017 |

| PCIe 5.0 | ~4GB/s | ~16,000MB/s | 2022 |

Most consumer NVMe SSDs use PCIe 3.0 x4 or PCIe 4.0 x4. "x4" means 4 lanes. More lanes = more data transmitted simultaneously.

When I upgraded from PCIe 3.0 to PCIe 4.0, the difference hit me during large file copies. Copying a 50GB video file took 15 seconds on PCIe 3.0, and 7 seconds on PCIe 4.0. On paper it's double the speed, but in practice it's the difference between "wait a sec" and "instant."

NVMe isn't just faster—it also has massively higher Queue Depth.

Think of it like train station ticket windows:

More windows mean more people get served at once, reducing wait times.

This matters in production when you're running services with heavy traffic. As a service grows, disk I/O often becomes the bottleneck. Once traffic exceeds a certain threshold, database queries start piling up in the queue—and that wait time becomes noticeable. Switching to NVMe significantly reduces this problem.

Modern motherboards have M.2 slots. You plug a thin SSD stick into the slot. But here's the trap.

M.2 is just the shape. Whether it's SATA or NVMe under the hood is a separate thing.

They look almost identical. You have to check the specs carefully.

M.2 slots have different notch (Key) types.

When I first bought an M.2 SSD, I thought "M.2 = fast, right?" Wrong. Turns out it was a B+M Key model, so it ran at SATA speeds. Since then, I always check if the product name says "NVMe".

NVMe SSDs run fast, so they run hot. Especially with PCIe 4.0+, temperatures can hit 70~80°C under heavy load.

When temperatures go too high, the SSD automatically slows down. That's thermal throttling.

I noticed this while copying large files continuously. Started at 7,000MB/s, then after 5 minutes it dropped to 2,000MB/s. Checked the temp: 82°C. Heat was choking the speed.

Modern motherboards come with heatsinks on M.2 slots. If yours doesn't have one, buy one separately and stick it on. Especially in laptops where space is tight, heat management is critical.

After adding a heatsink to my NVMe SSD, temps dropped by about 15°C. Even under heavy load, it stayed below 60°C. One heatsink made the difference in sustained performance.

In datacenters and server setups, they don't use M.2. They use other NVMe form factors.

U.2 is a 2.5-inch form factor with NVMe speeds—an enterprise-grade standard. Looks like a SATA SSD, but uses PCIe internally.

I was reading cloud server specs and saw "U.2 NVMe" as an option. Didn't know what it was at first. Turns out, servers use U.2 for stability and easy replacement.

There's also a technology to access remote NVMe SSDs over a network. NVMe over Fabrics (NVMe-oF) uses high-speed networks (RDMA, RoCE) to maintain NVMe's low latency while sharing storage over the network.

This is mainly used in large datacenters. Multiple servers share one massive NVMe storage pool.

Here's how to check on Linux.

lsblk -d -o NAME,SIZE,TYPE,TRAN

NAME SIZE TYPE TRAN

sda 1TB disk sata

nvme0n1 512GB disk nvme

sudo nvme list

Node SN Model Namespace Usage Format FW Rev

---------------- -------------------- ---------------------------------------- --------- -------------------------- ---------------- --------

/dev/nvme0n1 S5XXXXXXXXXX Samsung SSD 980 PRO 512GB 1 500.11 GB / 512.11 GB 512 B + 0 B 5B2QGXA7

This shows PCIe version, max speed, temperature, etc.

fio is a Linux tool for measuring disk I/O performance.

# Sequential read speed test

sudo fio --name=seq_read \

--filename=/dev/nvme0n1 \

--rw=read \

--bs=1M \

--size=1G \

--ioengine=libaio \

--direct=1 \

--numjobs=1 \

--runtime=30 \

--time_based

When I first ran this test, I thought "These aren't just numbers—I can feel this." SATA gave me 550MB/s, then I switched to NVMe and got 6,800MB/s. Same task, 10x faster, visible to the naked eye.

For me, the difference showed up in my CI/CD pipeline. Building Docker images and running tests took 8 minutes on SATA. After switching to NVMe, it dropped to 3 minutes. Deploy 10 times a day, that's 50 minutes saved.

In the end, it's about cost vs. benefit. NVMe costs 1.5~2x more than SATA. If the speed difference doesn't translate to real-world gains in your workflow, there's no reason to pay extra.

Here's what it came down to.

No matter how fast your car (SSD) is, if you're driving on a narrow road (SATA), you'll be slow.When I first used an SSD, I thought "SSDs are all fast, right?" But after hitting walls in production, I learned that the interface (connection method) can be the real bottleneck.

If you buy a Ferrari, you need the Autobahn. NVMe is that Autobahn.

| Type | SATA SSD | NVMe SSD |

|---|---|---|

| Analogy | 1-Lane Road | 16-Lane Highway |

| Max Speed | ~600 MB/s (Capped) | ~8,000+ MB/s (PCIe 4.0) |

| Connection | Via Controller | Direct CPU (PCIe) |

| Queue Depth | Max 32 commands | Max 64,000 commands |

| Heat | Low | High (heatsink required) |

| Use Case | Budget PC, Secondary Storage, Backup | High-End Gaming, Servers, DB, AI, Video Editing |

Just because it fits in the M.2 slot doesn't mean it's fast. Checking if it's SATA or NVMe—that's what separates pros from amateurs.

When choosing server specs or buying an SSD, I now always check: "Does it support NVMe?" That was the first step to unlocking performance bottlenecks.