Load Balancing: Traffic Distribution

Understanding load balancing principles and practical applications through project experience

Understanding load balancing principles and practical applications through project experience

Postmortem purpose and writing method

Understanding Terraform principles and practical applications through project experience

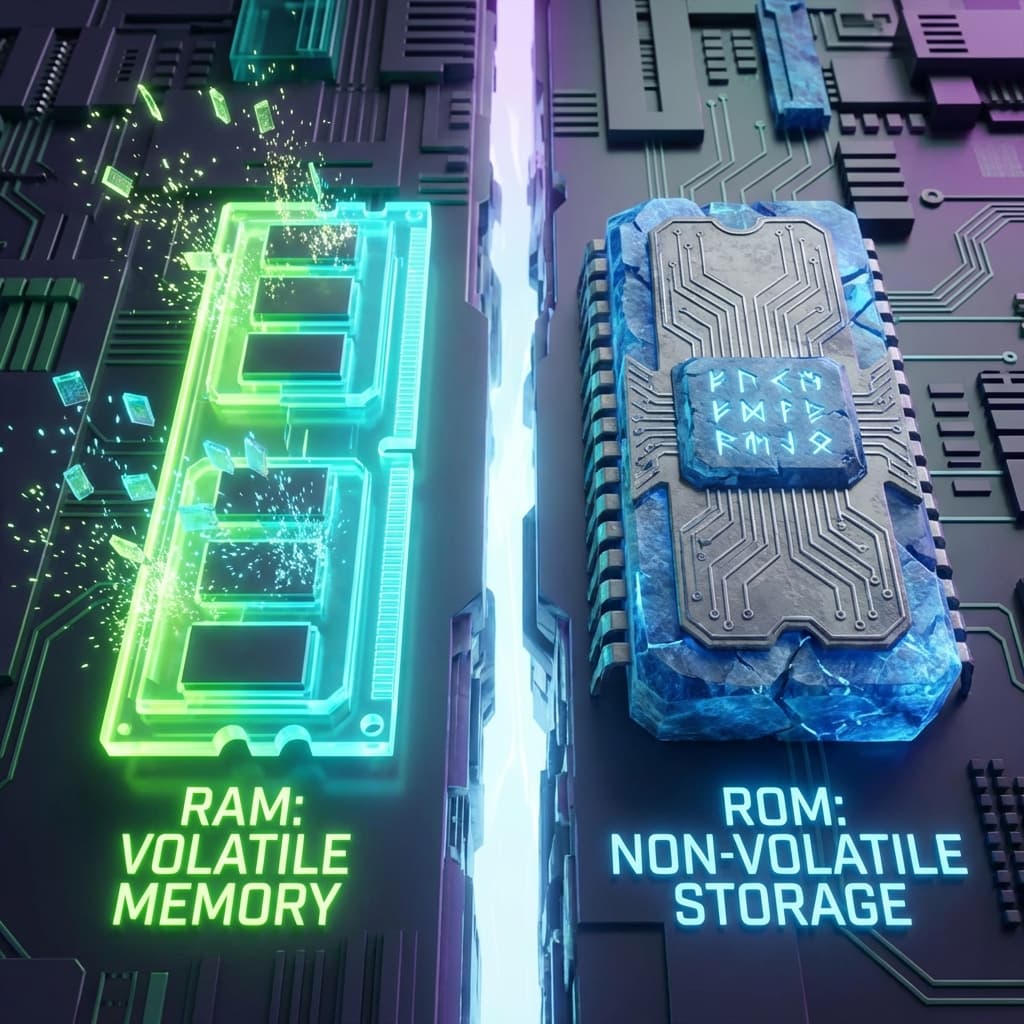

From DRAM leakage currents to ECC, HBM, and real benchmarks (fio, sysbench). A developer's guide going beyond simple analogies.

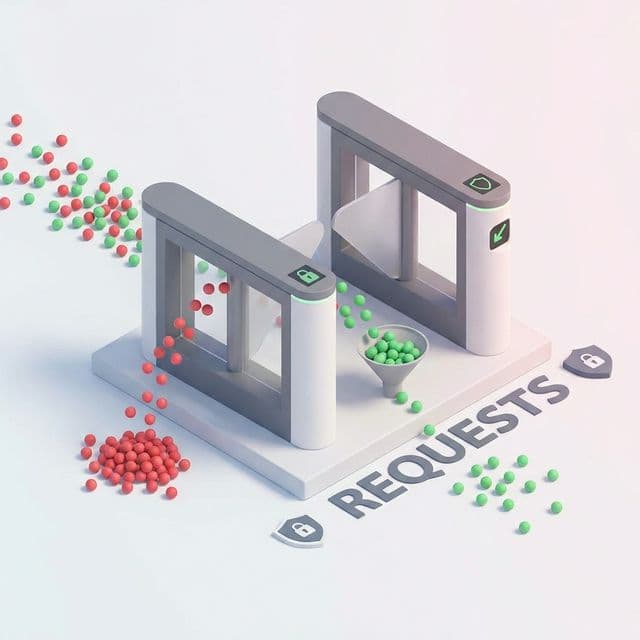

Public APIs face unexpected traffic floods without proper protection. Rate limiting, API key management, and IP restrictions to protect your API.

Running service with one server, it crashed when traffic spiked. Increased to 2 servers, but how to distribute traffic?

At first, I tried using DNS for traffic distribution. But traffic kept going to dead servers.

After implementing a load balancer, I understood the difference. Load balancers intelligently distribute traffic and detect failures.

The most confusing part was "load balancer is also a server, what if it dies?"

Another confusion was "which algorithm to use?" Round Robin, Least Connections, IP Hash... what's the difference?

And "what are L4 and L7?" was also unclear.

The decisive analogy was "restaurant waiter."

Load Balancer = Waiter:This analogy helped me understand. Load balancer receives traffic and sends it to the most appropriate server.

Distribute traffic across multiple servers to reduce load on single server and increase availability.

Client → Load Balancer → Server1

→ Server2

→ Server3

Distribute requests in order:

upstream backend {

server server1.example.com;

server server2.example.com;

server server3.example.com;

}

Pros: Simple and fair Cons: Ignores server performance differences

Distribute to server with fewest connections:

upstream backend {

least_conn;

server server1.example.com;

server server2.example.com;

}

Pros: Considers server load Cons: Judges load only by connection count

Select server based on client IP:

upstream backend {

ip_hash;

server server1.example.com;

server server2.example.com;

}

Pros: Can maintain sessions Cons: Possible traffic imbalance

Assign weights according to server performance:

upstream backend {

server server1.example.com weight=3;

server server2.example.com weight=2;

server server3.example.com weight=1;

}

Pros: Reflects server performance differences Cons: Requires weight configuration

Distribute at TCP/UDP level:

Client → L4 Load Balancer (IP, Port based) → Server

Pros:

Cons:

Distribute at HTTP level:

http {

upstream api_servers {

server api1.example.com;

server api2.example.com;

}

upstream web_servers {

server web1.example.com;

server web2.example.com;

}

server {

location /api/ {

proxy_pass http://api_servers;

}

location / {

proxy_pass http://web_servers;

}

}

}

Pros:

Cons:

Check server status with actual requests:

upstream backend {

server server1.example.com max_fails=3 fail_timeout=30s;

server server2.example.com max_fails=3 fail_timeout=30s;

}

Periodically check server status:

upstream backend {

server server1.example.com;

server server2.example.com;

check interval=3000 rise=2 fall=5 timeout=1000 type=http;

check_http_send "HEAD / HTTP/1.0\r\n\r\n";

check_http_expect_alive http_2xx http_3xx;

}

http {

upstream app_servers {

least_conn;

server app1.example.com:8080 weight=3 max_fails=3 fail_timeout=30s;

server app2.example.com:8080 weight=2 max_fails=3 fail_timeout=30s;

server app3.example.com:8080 weight=1 max_fails=3 fail_timeout=30s;

keepalive 32;

}

server {

listen 80;

server_name example.com;

location / {

proxy_pass http://app_servers;

proxy_http_version 1.1;

proxy_set_header Connection "";

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_connect_timeout 5s;

proxy_send_timeout 60s;

proxy_read_timeout 60s;

}

}

}

Always route specific client to same server:

upstream backend {

ip_hash;

server server1.example.com;

server server2.example.com;

}

Share sessions with Redis:

const session = require('express-session');

const RedisStore = require('connect-redis')(session);

const redis = require('redis');

const redisClient = redis.createClient({

host: 'redis.example.com',

port: 6379

});

app.use(session({

store: new RedisStore({ client: redisClient }),

secret: 'your-secret-key',

resave: false,

saveUninitialized: false

}));

Configure VRRP with Keepalived:

# Master

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

virtual_ipaddress {

192.168.1.100

}

}

# Backup

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 90

virtual_ipaddress {

192.168.1.100

}

}

log_format upstreamlog '$remote_addr - $remote_user [$time_local] '

'"$request" $status $body_bytes_sent '

'"$http_referer" "$http_user_agent" '

'upstream: $upstream_addr '

'upstream_response_time: $upstream_response_time '

'request_time: $request_time';

access_log /var/log/nginx/access.log upstreamlog;

Prometheus + Grafana:

# prometheus.yml

scrape_configs:

- job_name: 'nginx'

static_configs:

- targets: ['nginx-exporter:9113']

# API servers: Least Connections

upstream api {

least_conn;

server api1:8080;

server api2:8080;

}

# Static files: Round Robin

upstream static {

server static1:80;

server static2:80;

}

# Session required: IP Hash

upstream session {

ip_hash;

server session1:3000;

server session2:3000;

}

proxy_connect_timeout 5s; # Connection timeout

proxy_send_timeout 60s; # Send timeout

proxy_read_timeout 60s; # Read timeout

proxy_buffer_size 4k;

proxy_buffers 8 4k;

proxy_busy_buffers_size 8k;

Load balancing is a core technology for improving server scalability and availability. Various algorithms exist like Round Robin, Least Connections, and IP Hash, operating at L4 and L7 levels. Health checks detect failures, and session management maintains user experience.

I choose algorithms based on project characteristics. Least Connections for API servers, Round Robin for static files, IP Hash when sessions are needed. I also redundant load balancers themselves to eliminate single points of failure.

The key is "understanding traffic patterns." Analyze traffic, choose appropriate algorithms and settings, and you can operate a stable service. Monitor everything, adjust based on real data, and continuously improve your load balancing strategy.

Situation: Increased users causing API server response time degradation

Solution:

upstream api_backend {

least_conn;

server api1:8080 weight=3;

server api2:8080 weight=2;

server api3:8080 weight=1;

}

Result: 70% reduction in average response time

Situation: Login sessions not shared between servers

Solution:

upstream web_backend {

ip_hash;

server web1:3000;

server web2:3000;

}

Result: Session persistence problem solved

Situation: Serving static files without CDN

Solution:

upstream static_backend {

server static1:80;

server static2:80;

server static3:80;

}

Result: Even traffic distribution

Sticky sessions can cause server load imbalance:

# Bad: Only using IP Hash

upstream backend {

ip_hash;

server server1;

server server2;

}

# Good: Share sessions with Redis

# All servers can access sessions

Too short causes server load, too long delays failure detection:

# Appropriate setting

check interval=3000 rise=2 fall=5 timeout=1000;

Configure according to application characteristics:

# API: Short timeout

proxy_read_timeout 30s;

# File upload: Long timeout

proxy_read_timeout 300s;

Cause: IP Hash + Traffic concentration from specific IP range

Solution: Use Consistent Hashing or Least Connections

Cause: Application startup time > Health check timeout

Solution: Increase rise value or timeout

Cause: Session loss during server restart

Solution: Use external session store like Redis

Begin with Round Robin, then optimize based on actual traffic patterns:

# Start with this

upstream backend {

server server1;

server server2;

}

# Optimize later based on metrics

upstream backend {

least_conn;

server server1 weight=3;

server server2 weight=2;

}

Track key metrics:

Always have redundancy:

Test failure scenarios:

Load balancing is not just about distributing traffic—it's about building resilient, scalable systems. Choose the right algorithm for your use case, monitor continuously, and be prepared to adjust as your traffic patterns evolve. The best load balancing strategy is one that adapts to your actual needs, not theoretical perfection.

Remember: start simple, measure everything, and optimize based on real data. Your users will thank you for the reliability and performance.

If you're just starting with load balancing:

Load balancing is a journey, not a destination. As your application evolves, your load balancing strategy should evolve with it. Stay flexible, keep learning, and always prioritize your users' experience. The best load balancing setup is one that grows and adapts with your needs, providing consistent reliability and performance at every stage of your application's lifecycle. In production environments, unexpected situations can occur, so it's crucial to maintain monitoring and establish systems for rapid response. Load balancing is not just about distributing traffic—it's a core technology for building stable and scalable systems that can handle growth and change gracefully. Through continuous learning and improvement, you can provide better service to your users always.