Terraform: Infrastructure as Code

Understanding Terraform principles and practical applications through project experience

Understanding Terraform principles and practical applications through project experience

Understanding load balancing principles and practical applications through project experience

Postmortem purpose and writing method

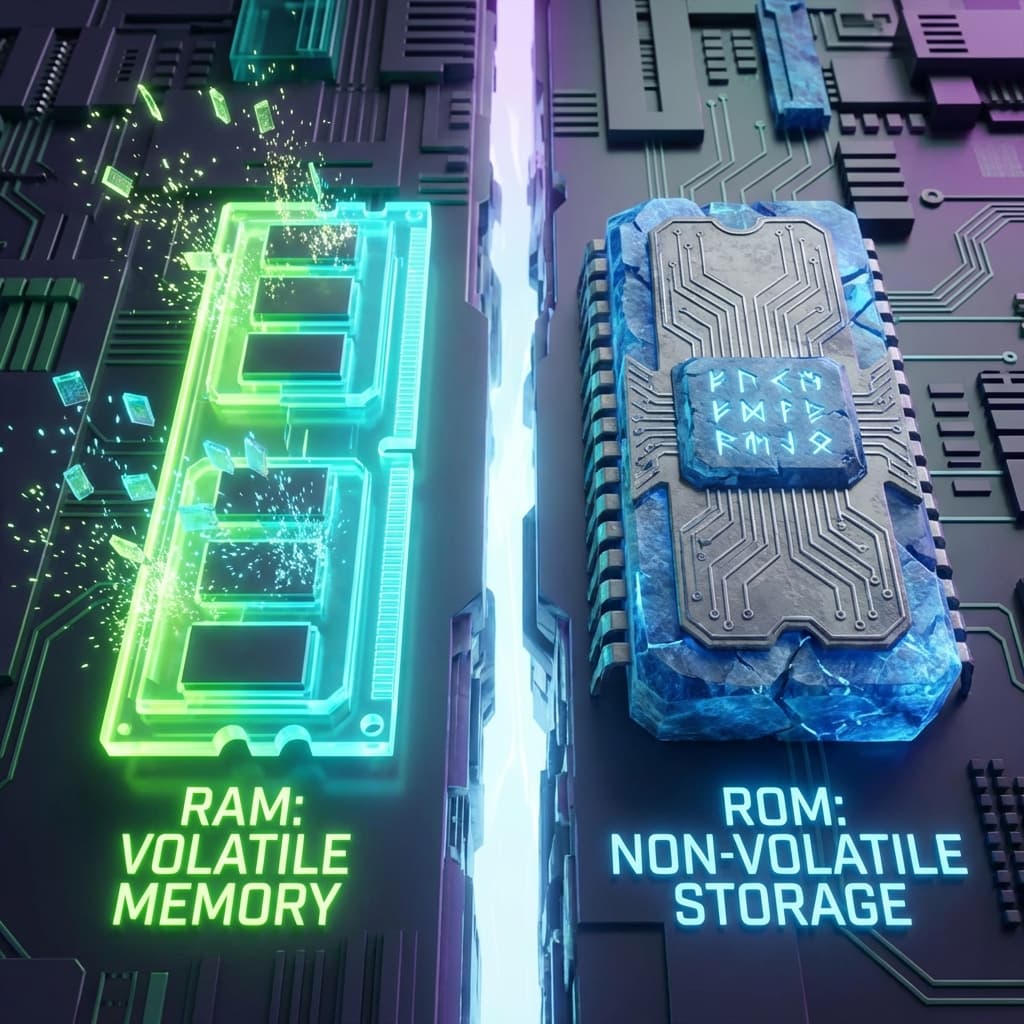

From DRAM leakage currents to ECC, HBM, and real benchmarks (fio, sysbench). A developer's guide going beyond simple analogies.

I tried to save money by deploying to AWS S3 instead of Vercel, but ended up with a broken site. I share the three nightmares of Static Export (Image Optimization, API Routes, Dynamic Routing) and how to fix them.

I used to configure EC2, RDS, and VPCs manually in the AWS Console. It was a nightmare.

The tipping point came during a team project. A teammate asked me to spin up a staging environment that matched production exactly. I spent hours clicking through the AWS Console trying to reproduce what I'd built weeks earlier. The security group rules were slightly off, the subnet CIDRs didn't match, and eventually those small differences caused bugs that took days to track down. It was maddening.

I was advised to try Terraform. It changed everything. I could manage infrastructure as code, version control it with Git, and automate creation/deletion!

At first, I didn't understand. "Why write code instead of just clicking buttons?"

The decisive analogy was "Architectural Blueprint."

Manual Configuration = Building a House by Hand:Terraform is not just a script; it's a State Manager for your cloud.

The mental model that clicked for me was comparing it to Git. When I use Git, I never think about "what changed" — Git tracks it for me. Terraform does the same thing for infrastructure. terraform plan is like git diff. terraform apply is like git push to your cloud. Once that analogy landed, everything started making sense.

# main.tf

provider "aws" {

region = "us-east-1"

}

resource "aws_instance" "web" {

ami = "ami-0c55b159cbfafe1f0" // Amazon Linux 2

instance_type = "t2.micro" // Free tier

tags = {

Name = "MyWebServer"

}

}

# 1. Initialize (Download plugins)

terraform init

# 2. Plan (Dry Run - Shows what will happen)

terraform plan

# 3. Apply (Execute - Actually creates resources)

terraform apply

# 4. Destroy (Cleanup)

terraform destroy

Pro Tip: Always run plan before apply. It's your safety net.

Hardcoding values is bad practice. Use variables.tf.

# variables.tf

variable "instance_type" {

description = "EC2 instance size"

type = string

default = "t2.micro"

}

variable "environment" {

description = "Deployment environment (dev/prod)"

type = string

}

# main.tf

resource "aws_instance" "web" {

instance_type = var.instance_type

tags = {

Environment = var.environment

}

}

terraform apply -var="environment=production"

This was the most confusing part for me. "What is terraform.tfstate?"

Terraform needs to know the mapping between your code and the real world resources.

resource "aws_instance" "web"i-0123456789abcdef0 (AWS ID)The State File stores this mapping.

The state file contains sensitive data (DB passwords, Keys). Store it remotely (Remote Backend).

Using S3 for storage and DynamoDB for Locking (preventing two people from running apply at the same time).

terraform {

backend "s3" {

bucket = "my-company-tf-state"

key = "prod/terraform.tfstate"

region = "us-east-1"

dynamodb_table = "terraform-locks" // Prevents race conditions

encrypt = true

}

}

I learned this lesson the hard way. I accidentally deleted my local terraform.tfstate file, and Terraform had no idea that my EC2 instance already existed. It tried to create a brand new one. My heart sank. From that day on, remote backends became non-negotiable for me.

Don't copy-paste code. Use Modules to create reusable components.

# modules/web-server/main.tf

resource "aws_instance" "this" { ... }

# main.tf (Production)

module "web_server_prod" {

source = "./modules/web-server"

instance_type = "m5.large"

name = "prod-web"

}

# main.tf (Staging)

module "web_server_stage" {

source = "./modules/web-server"

instance_type = "t2.micro"

name = "stage-web"

}

This ensures consistency across environments. Think of modules as the functions of infrastructure — they let you define behavior once and call it with different parameters. Teams often maintain a shared module repository that multiple projects pull from, which enforces consistent patterns across the organization.

Environment Isolation: Never mix Dev and Prod in the same state file. Use separate folders or workspaces.

terraform/

├── environments/

│ ├── dev/

│ └── prod/

└── modules/

Secrets Management:

Never put secrets in *.tf files.

Use terraform.tfvars (GitIgnored) or Environment Variables (TF_VAR_db_password).

Plan Automation:

Use CI/CD (GitHub Actions) to run terraform plan on Pull Requests.

This lets the team review infrastructure changes before they happen.

Lock Your Provider Versions:

Without version constraints, a provider upgrade can break your configuration silently.

Always pin provider versions in required_providers.

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

"Drift" happens when someone manually executes changes in the AWS Console, bypassing Terraform. Now your code and reality are out of sync.

How to fix it:terraform plan. It will show "Changes outside of Terraform".terraform import to bring existing resources into your state.Terraform is a discipline. It only works if everyone agrees to follow the rules. I've seen teams where one developer kept making "quick fixes" in the console, and every Terraform run became a battle against drift. The fix isn't technical — it's cultural. Everyone needs to agree that the .tf files are the single source of truth.

You might hear about Pulumi.

But Terraform is still the industry standard. Learn Terraform first.

Just like application code, Terraform code rots.

If you have a main.tf with 5000 lines, you are doing it wrong.

modules/s3-bucket/main.tf.module "bucket_1" { ... } in your main code.terraform mv: Use the moved block or terraform state mv command to tell Terraform that aws_s3_bucket.old is now module.bucket_1.aws_s3_bucket.this. This prevents deletion and recreation!1. State Lock Error:

Error: Error acquiring the state lock

terraform force-unlock <LOCK_ID>.2. Provider Error:

Error: Plugin initialization failed

m1-terraform-provider-helper.3. Cycle Error:

Error: Cycle: aws_security_group.sg -> aws_instance.web -> aws_security_group.sg

aws_security_group_rule separate resource instead of inline blocks.Terraform is great, but these tools make it better:

terraform plan will cost in $$$ before you apply.terraform plan might miss.Infracost was a revelation for me. Before deploying a new EKS node group, I ran Infracost and saw the monthly cost estimate right in the PR. That kind of visibility prevents expensive surprises at the end of the month.

Terraform gave me the confidence to tear down and rebuild my entire infrastructure in minutes. It turned "Fear of Touching Infrastructure" into "Infrastructure as Code."

The shift in mindset is the real win. Before Terraform, I treated infrastructure like a fragile artifact — something precious that must never be touched. After Terraform, I understood that infrastructure should be disposable and reproducible. If something breaks, destroy it and rebuild from code. That confidence changes how you work.

Start small with a single EC2 instance.

Once you experience the magic of terraform apply, you'll never go back to the AWS Console.