API Security in Practice: Rate Limiting, API Keys, and IP Restrictions

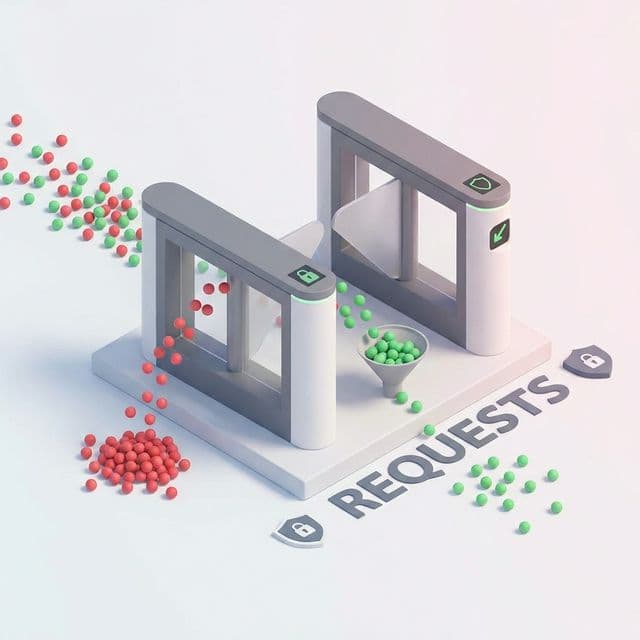

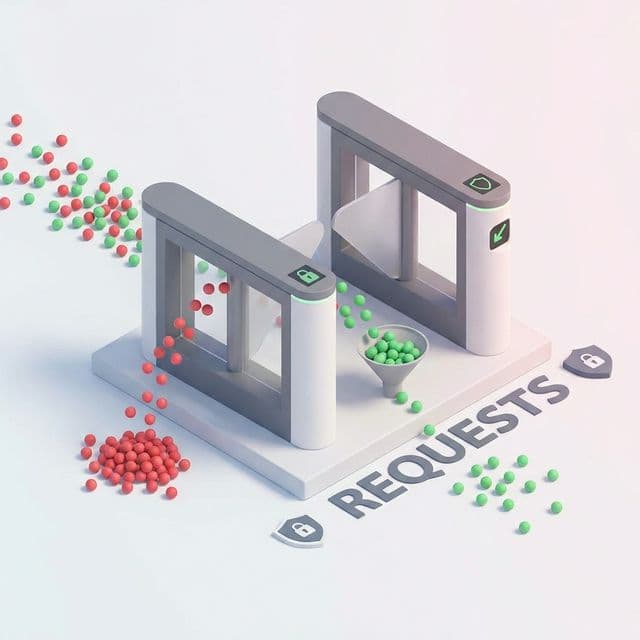

Public APIs face unexpected traffic floods without proper protection. Rate limiting, API key management, and IP restrictions to protect your API.

Public APIs face unexpected traffic floods without proper protection. Rate limiting, API key management, and IP restrictions to protect your API.

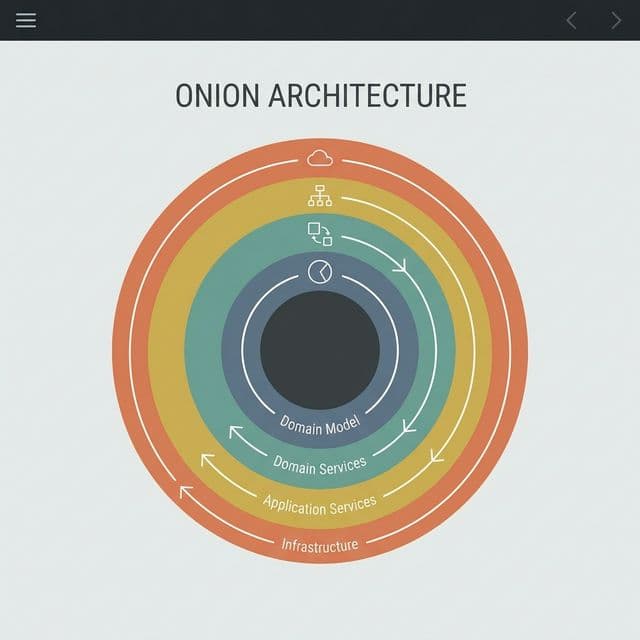

A deep dive into Robert C. Martin's Clean Architecture. Learn how to decouple your business logic from frameworks, databases, and UI using Entities, Use Cases, and the Dependency Rule. Includes Screaming Architecture and Testing strategies.

A comprehensive deep dive into client-side storage. From Cookies to IndexedDB and the Cache API. We explore security best practices for JWT storage (XSS vs CSRF), performance implications of synchronous APIs, and how to build offline-first applications using Service Workers.

App crashes only in Release mode? It's likely ProGuard/R8. Learn how to debug obfuscated stack traces, use `@Keep` annotations, and analyze `usage.txt`.

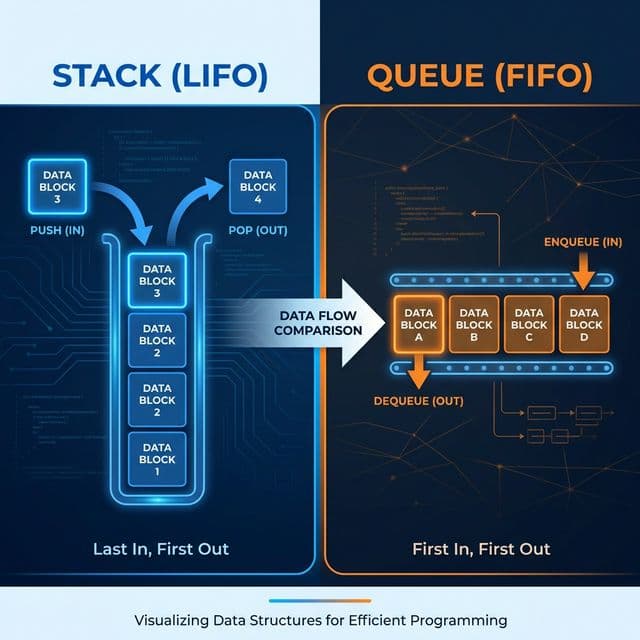

Pringles can (Stack) vs Restaurant line (Queue). The most basic data structures, but without them, you can't understand recursion or message queues.

What happens when you make an API public without rate limiting? Stories of APIs getting hammered with tens of thousands of requests within hours are surprisingly common. A single IP sending hundreds of requests per second to crawl your data, or a buggy client stuck in an infinite loop—these things happen more often than you'd think. Cloud bills skyrocketing overnight is a story you'll hear again and again.

These cases make one thing clear: making an API public means opening your server's door to the entire world. Rate limiting is essential.

There's a helpful analogy for understanding rate limiting.

Think of an API as a water faucet. Users line up to fill their bottles. But what if someone brings a massive tank and keeps filling it endlessly? Everyone else can't get water. The water bill (AWS costs) explodes.

Three solutions emerged:

Initially overwhelming, but this metaphor makes it clear: "Who can use the resources, how much, and for how long?"

Here's a comparison of four main algorithms and their trade-offs.

Simplest approach. Divide time into fixed intervals and count requests in each.

// Fixed Window with Redis

import { Redis } from '@upstash/redis'

const redis = new Redis({

url: process.env.UPSTASH_REDIS_REST_URL!,

token: process.env.UPSTASH_REDIS_REST_TOKEN!,

})

async function fixedWindowRateLimit(

identifier: string,

limit: number,

windowSeconds: number

): Promise<{ allowed: boolean; remaining: number }> {

const now = Date.now()

const window = Math.floor(now / (windowSeconds * 1000))

const key = `ratelimit:${identifier}:${window}`

const count = await redis.incr(key)

if (count === 1) {

await redis.expire(key, windowSeconds)

}

const allowed = count <= limit

const remaining = Math.max(0, limit - count)

return { allowed, remaining }

}

// Usage: limit to 60 requests per minute per user

const { allowed, remaining } = await fixedWindowRateLimit(

`user:${userId}`,

60,

60

)

if (!allowed) {

return new Response('Too Many Requests', {

status: 429,

headers: {

'X-RateLimit-Limit': '60',

'X-RateLimit-Remaining': '0',

'Retry-After': '60'

}

})

}

The problem is edge cases. If you make 60 requests at 00:59 and 60 more at 01:01, that's 120 requests in 2 minutes, but the system won't block it because the counter resets when the window changes.

Solves the Fixed Window problem. Counts requests in the last exact N seconds.

// Sliding Window with Redis Sorted Set

async function slidingWindowRateLimit(

identifier: string,

limit: number,

windowSeconds: number

): Promise<{ allowed: boolean; remaining: number; reset: number }> {

const now = Date.now()

const windowStart = now - (windowSeconds * 1000)

const key = `ratelimit:sliding:${identifier}`

// 1. Remove old requests

await redis.zremrangebyscore(key, 0, windowStart)

// 2. Count requests in current window

const count = await redis.zcard(key)

if (count < limit) {

// 3. Add new request

await redis.zadd(key, { score: now, member: `${now}-${Math.random()}` })

await redis.expire(key, windowSeconds)

return {

allowed: true,

remaining: limit - count - 1,

reset: now + (windowSeconds * 1000)

}

}

return {

allowed: false,

remaining: 0,

reset: now + (windowSeconds * 1000)

}

}

More accurate, but requires Redis Sorted Sets which increase memory usage. Can get expensive with high traffic.

Bucket holds tokens, each request consumes one. Tokens refill at a constant rate.

import { Ratelimit } from '@upstash/ratelimit'

import { Redis } from '@upstash/redis'

const redis = new Redis({

url: process.env.UPSTASH_REDIS_REST_URL!,

token: process.env.UPSTASH_REDIS_REST_TOKEN!,

})

// Token Bucket configuration

const ratelimit = new Ratelimit({

redis,

limiter: Ratelimit.tokenBucket(10, '10s', 3), // 10 tokens per 10s, burst of 3

analytics: true,

prefix: '@upstash/ratelimit',

})

// Use in API route

export async function POST(request: Request) {

const ip = request.headers.get('x-forwarded-for') ?? 'anonymous'

const { success, limit, remaining, reset } = await ratelimit.limit(ip)

if (!success) {

return new Response('Too Many Requests', {

status: 429,

headers: {

'X-RateLimit-Limit': limit.toString(),

'X-RateLimit-Remaining': remaining.toString(),

'X-RateLimit-Reset': reset.toString(),

},

})

}

return new Response('OK', {

headers: {

'X-RateLimit-Limit': limit.toString(),

'X-RateLimit-Remaining': remaining.toString(),

'X-RateLimit-Reset': reset.toString(),

},

})

}

Why Token Bucket is preferred: allows bursts while limiting average rate. Users can occasionally send many requests, but sustained high volume gets blocked.

Rate limiting alone isn't enough. You need to know "who" is using the API. That's where API keys come in.

import crypto from 'crypto'

import { hash, verify } from '@node-rs/argon2'

// Generate API key (show to user only once)

export async function generateApiKey(userId: string) {

const apiKey = `sk_${crypto.randomBytes(32).toString('base64url')}`

// Hash for database storage (never store plaintext)

const hashedKey = await hash(apiKey, {

memoryCost: 19456,

timeCost: 2,

outputLen: 32,

parallelism: 1,

})

await db.apiKey.create({

data: {

userId,

keyHash: hashedKey,

keyPrefix: apiKey.substring(0, 10), // for identification only

scopes: ['read', 'write'],

createdAt: new Date(),

lastUsedAt: null,

expiresAt: new Date(Date.now() + 365 * 24 * 60 * 60 * 1000), // 1 year

},

})

return apiKey // show only once

}

// Verify API key (in middleware)

export async function verifyApiKey(apiKey: string) {

if (!apiKey.startsWith('sk_')) {

return null

}

const prefix = apiKey.substring(0, 10)

const storedKey = await db.apiKey.findFirst({

where: { keyPrefix: prefix },

include: { user: true },

})

if (!storedKey) {

return null

}

const isValid = await verify(storedKey.keyHash, apiKey)

if (!isValid || (storedKey.expiresAt && storedKey.expiresAt < new Date())) {

return null

}

// Update last used (async, fire-and-forget)

db.apiKey.update({

where: { id: storedKey.id },

data: { lastUsedAt: new Date() },

}).catch(() => {})

return {

userId: storedKey.userId,

scopes: storedKey.scopes,

user: storedKey.user,

}

}

Key principle: never store the original key. Like passwords, only store hashes. Database breach won't expose keys.

API keys help, but sometimes you need to restrict access to specific IPs.

export async function checkIpAllowlist(request: Request, userId: string) {

const ip = request.headers.get('x-forwarded-for')?.split(',')[0] ??

request.headers.get('x-real-ip') ??

'unknown'

const allowlist = await db.ipAllowlist.findMany({

where: { userId },

})

if (allowlist.length > 0) {

const isAllowed = allowlist.some(entry => {

if (entry.cidr) {

return isIpInCidr(ip, entry.cidr)

}

return entry.ip === ip

})

if (!isAllowed) {

return { allowed: false, reason: 'IP not in allowlist' }

}

}

const isBlocked = await redis.sismember('ip:blocklist', ip)

if (isBlocked) {

return { allowed: false, reason: 'IP blocked' }

}

return { allowed: true, ip }

}

The final piece is monitoring. You need to detect suspicious patterns quickly.

export async function detectSuspiciousActivity(userId: string, ip: string) {

const now = Date.now()

const key = `suspicious:${userId}:${ip}`

const failCount = await redis.incr(`${key}:fail`)

await redis.expire(`${key}:fail`, 60)

if (failCount >= 5) {

await sendAlert({

type: 'suspicious_activity',

userId,

ip,

message: `${failCount} failed attempts in 1 minute`,

})

await redis.setex(`ip:blocked:${ip}`, 3600, '1') // block for 1 hour

}

}

Can't solve API security all at once. Build it layer by layer.

Network Level: Cloudflare WAF for DDoS and bot protection

Application Level: Rate limiting, API keys, IP restrictions, CORS

Data Level: Request validation, input sanitization, output filtering

Monitoring Level: Anomaly detection, real-time alerts, log analysis

And most importantly, use HTTP headers properly:

X-RateLimit-Limit: 60

X-RateLimit-Remaining: 42

X-RateLimit-Reset: 1708041600

Retry-After: 60

When users know when they can retry, unnecessary retries decrease.

Making an API public means opening your server to the world. From that moment, security isn't optional—it's essential. Rate limiting is the first step.