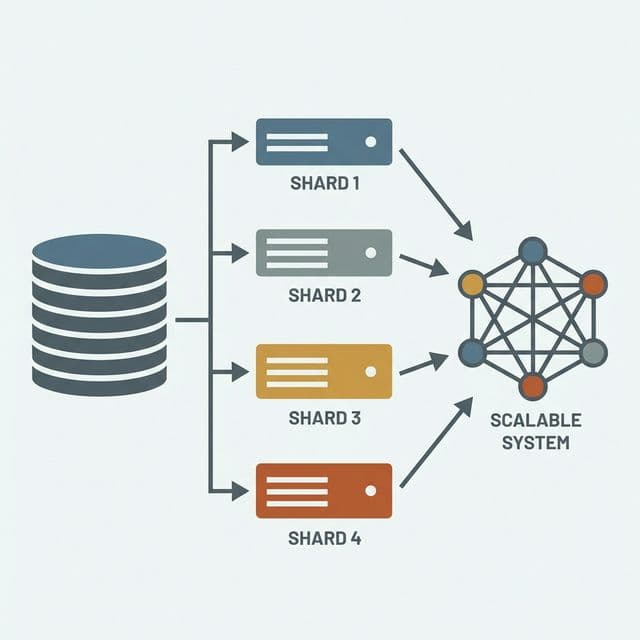

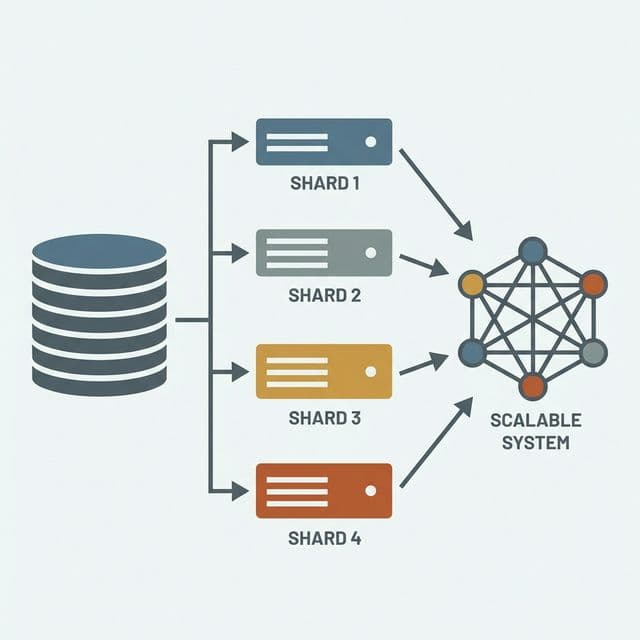

Database Sharding: How to Scale Beyond Limits

1. Prologue: "The Library is Full"

Imagine a local library so stuffed with books that the floor is cracking under the weight.

There are two solutions:

- Scale-Up: Renovate the building to 100 floors. Expensive, and the elevators will be jammed.

- Scale-Out (Sharding): Build a second and third library across town, and split the books among them.

Sharding is the technique of partitioning a massive database into pieces (shards) and distributing them across multiple servers. When data reaches petabyte scale, it's no longer optional — it's mandatory.

Why I Started Studying Sharding

At first, I thought "If the DB is slow, just add an index." And it worked — queries sped up, and I was satisfied.

But when the table crossed 100 million rows, things changed. Even indexed queries were slow. EXPLAIN showed full table scans sneaking back in. A Redis cache in front helped for cache hits, but cache misses still fell through to the database, and each one took 3 seconds to respond.

Digging through the documentation, the answer kept coming up: "It's time to consider sharding."

My initial reaction: "Split the data? Use multiple DBs?" It didn't click immediately. But studying it deeply, I understood why it's called the last resort — and why sometimes it's the only option.

2. Partitioning Types: How to Split

Vertical Partitioning (Splitting by Columns)

- Concept: Split a table by columns.

- Example: When a

User table is bloated.

- Frequently accessed columns:

ID, Name, Email → high-performance SSD server.

- Rarely accessed columns:

Biography, ProfilePicBlob → cheaper HDD server.

- Analogy: It's not like splitting a library into "Science" and "Literature" wings. It's more like keeping popular books at the front counter on the first floor and storing the rest in the basement.

- Benefit: I/O for frequently accessed data decreases, improving cache efficiency.

- Limitation: If the number of rows itself is too large, vertical partitioning alone can't solve it.

Horizontal Sharding (Splitting by Rows) — True "Sharding"

- Concept: Split a table by rows. Same schema, different data subsets.

- Example:

- Shard A: Users 1–1,000,000.

- Shard B: Users 1,000,001–2,000,000.

- Shard C: Users 2,000,001–3,000,000.

- Analogy: Building multiple library branches — authors A–G go to Branch 1, H–N to Branch 2, O–Z to Branch 3.

3. Sharding Strategies (The Key Decision)

"What criteria should we use to split the data?" This is the Shard Key decision, and it's the most critical architectural choice in sharding.

1) Range Based Sharding

- Method:

UserID 1–1000 goes to Server A, 1001–2000 to Server B.

- Pros: Easy to implement. Great for range queries (

SELECT ... WHERE date BETWEEN ...).

- Cons: Data skew (Hotspots). If new signups surge, the server handling the "latest IDs" melts while old-ID servers sit idle. During peak hours, one shard might drown while others are barely used.

2) Hash Based Sharding

- Method:

Hash(UserID) % N → determines the shard.

- Pros: Uniform data distribution. Virtually eliminates hotspot problems.

- Cons: Adding or removing servers (resharding) means the hash function changes, requiring nearly all data to be relocated. Going from 3 to 4 servers means

% 3 becomes % 4, and data that was happily sitting on the right server now needs to move elsewhere.

- Solution: Consistent Hashing (see below).

3) Geo Based Sharding

- Method: US users → US DB, EU users → EU DB, Asia users → Asia DB.

- Pros: Low latency (physically closer servers). Compliance with regulations like GDPR that require data to stay in specific regions.

- Cons: If US traffic is 10x that of EU, you get imbalance. Cross-region queries become painful.

4) Directory Based Sharding (Lookup Table)

- Method: Maintain a separate mapping table. (

UserID 1 → Shard A, UserID 55 → Shard B).

- Pros: Maximum flexibility — you can reassign any key to any shard.

- Cons: The mapping table itself becomes a bottleneck and single point of failure (SPOF). Every query must first look up where the data lives.

4. Consistent Hashing: Solving the Resharding Nightmare

The algorithm designed to fix hash-based sharding's reassignment problem. Used by DynamoDB, Cassandra, Discord, and many others.

Concept: The Hash Ring

- Arrange numbers from 0 to $2^-1$ in a circle (ring).

- Hash each server onto a point on the ring. (S1, S2, S3).

- Hash each data key onto a point on the ring. (K1, K2...).

- Each key is assigned to the first server found clockwise from its position.

The Magic

When a new server S4 joins, only the keys that fall between S4 and its predecessor need to move to S4. All other servers' data stays untouched.

In regular hash sharding, adding a server could require relocating nearly 100% of the data. With consistent hashing, on average only 1/N of the data moves (where N is the number of servers). This enables zero-downtime scaling.

Virtual Nodes

In practice, each physical server is represented by multiple "virtual nodes" on the ring. If you only have 3 servers and 3 points on the ring, distribution might be uneven. By placing 100–200 virtual nodes per server, you get much more uniform data distribution.

5. The Three Great Pain Points of Sharding

Sharding is not a silver bullet. "Introducing sharding = 10x development complexity."

1. No Cross-Shard JOINs

- Need to

JOIN a User on Shard A with an Order on Shard B? At the database level, this is impossible.

- You must query each shard separately in application code and merge the results yourself.

- This is more painful than it sounds — sorting, pagination, and aggregation all become application-level responsibilities.

2. Distributed Transactions

- Withdrawing money from Shard A and depositing into Shard B? Guaranteeing ACID across shards is extremely hard.

- You'd need slow, complex protocols like 2-Phase Commit (2PC).

- In practice, most systems accept Eventual Consistency and use compensation patterns like the Saga Pattern — a sequence of local transactions with compensating actions if one step fails.

3. No Auto-Increment IDs

- If each shard independently increments IDs (1, 2, 3...), Shard A and Shard B will both produce

ID=100. Collision!

- Solution: Use globally unique ID generators like Twitter Snowflake, which combines timestamp + machine ID + sequence number to produce IDs that are globally unique and chronologically sortable.

6. Lab: Building a Simple Sharding Router

Here's a basic hash sharding router:

class ShardingRouter {

constructor(shardMap) {

this.shards = shardMap; // { 0: 'DB_A', 1: 'DB_B', 2: 'DB_C' }

this.totalShards = Object.keys(shardMap).length;

}

getShard(key) {

const hash = this.simpleHash(key);

const shardIndex = hash % this.totalShards;

return this.shards[shardIndex];

}

simpleHash(str) {

let hash = 0;

for (let i = 0; i < str.length; i++) {

hash += str.charCodeAt(i);

}

return hash;

}

}

const router = new ShardingRouter({ 0: 'Server 1', 1: 'Server 2', 2: 'Server 3' });

console.log(router.getShard("user_100")); // Server 2

console.log(router.getShard("user_101")); // Server 1

In production, you'd replace simpleHash with a proven hash function like CRC32 or MurmurHash. A simple ASCII sum produces too many collisions for uniform distribution.

7. Architecture Pattern: The Router (Proxy)

Applications shouldn't need to know about sharding. Place a Router (Proxy) in between.

- App: Sends

SELECT * FROM User WHERE id=123 — nothing special.

- Router (e.g., Mongos, Vitess, ProxySQL):

- "ID is 123? Let me hash that... it belongs on Shard-2."

- Forwards the query to Shard-2 → receives result → returns it to the App.

- The app developer codes as if there's a single database.

The beauty of this pattern is that sharding logic is completely decoupled from application code. When you add shards or rebalance data later, the application doesn't need to change at all.

8. The Celebrity Problem (Hot Key Problem)

When traffic concentrates on a specific shard key.

- Scenario: Instagram shards its DB by

UserID.

- Problem: The shard holding Justin Bieber's or BTS's data receives millions of read requests (Hotspot). Meanwhile, the shard with my account is gathering dust.

- Solutions:

- Detect hot keys and cache them separately in Redis.

- Split the hot data into sub-shards and replicate across multiple servers.

- Add Read Replicas specifically to the celebrity shard.

9. What to Try Before Sharding

Sharding is the last resort. Before you shard, exhaust these options:

- Index Optimization: Run

EXPLAIN, add missing indexes.

- Query Optimization: Fix N+1 problems, eliminate unnecessary JOINs.

- Caching (Redis): Offload read traffic to a cache layer.

- Read Replicas: Distribute read traffic to read-only replicas.

- Scale-Up: Upgrade CPU/RAM. (Throw money at the problem.)

- Table Partitioning: Use the database's built-in partitioning to logically split large tables within a single server.

Only after all of these have been tried and the system still can't handle the load (typically when data reaches several terabytes) should you introduce sharding. Premature sharding leads to a complexity nightmare.

10. Glossary

- Sharding: Horizontally partitioning data across multiple nodes for distributed storage.

- Shard Key (Partition Key): The column used to determine which shard a row belongs to. The most important decision.

- Horizontal Scaling (Scale-Out): Adding more servers to increase capacity.

- Vertical Scaling (Scale-Up): Upgrading CPU/RAM on a single server.

- Hotshard (Hotspot): When data distribution is uneven and one shard receives disproportionate load.

- Snowflake ID: A distributed unique ID generation algorithm (developed by Twitter) that produces chronologically sortable IDs.

- Consistent Hashing: A hashing algorithm that minimizes data redistribution when nodes are added or removed.

11. FAQ & Common Questions

-

Q: When should I introduce sharding?

- A: As late as possible. Exhaust caching, read replicas, query optimization, and hardware upgrades first. Sharding is an operational beast — it multiplies development and operational complexity.

-

Q: How do you handle JOINs after sharding?

- A: Design your schema to minimize cross-shard JOINs. Denormalize data (duplicate it across shards) where needed. If a JOIN is absolutely necessary, query each shard from application code and merge results manually.

-

Q: Client-side sharding vs server-side sharding?

- A: Embedding routing logic in application code (client-side) makes the codebase messy and hard to maintain. Most teams prefer placing a proxy/router layer between the app and the database (server-side).

-

Q: How do you handle "Celebrity" hot keys?

- A: If Justin Bieber's data is on Shard 1, Shard 1 dies under load. Detect hot keys proactively, isolate them with dedicated caching or sub-sharding, and add read replicas to absorb the traffic.