AWS vs GCP vs Azure: How to Choose Your Cloud Provider

They all look the same at first, but each cloud has distinct strengths. A startup founder's guide to choosing the right cloud provider.

They all look the same at first, but each cloud has distinct strengths. A startup founder's guide to choosing the right cloud provider.

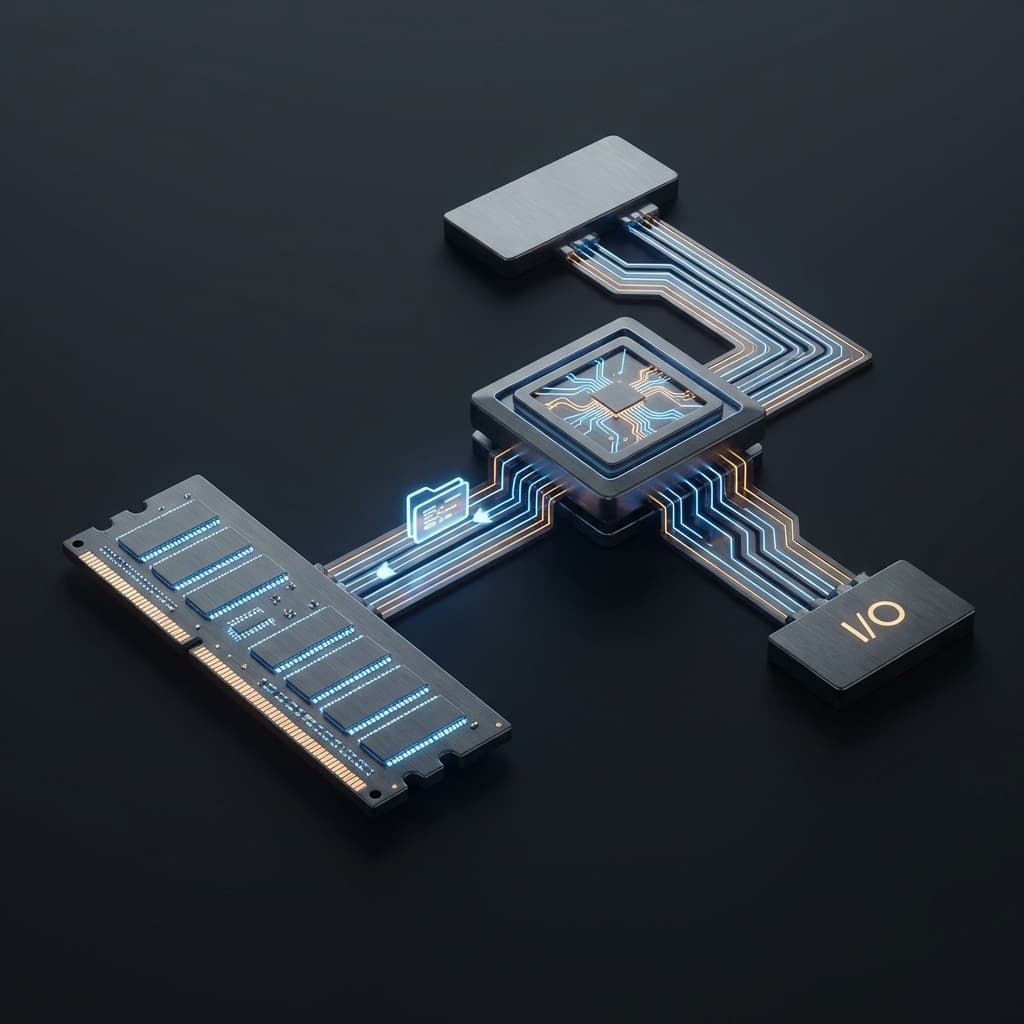

Why is the CPU fast but the computer slow? I explore the revolutionary idea of the 80-year-old Von Neumann architecture and the fatal bottleneck it left behind.

To understand why my server is so small yet powerful, we must look at the house-sized 1st gen computers. I reveal the history of hardware dieting and the secret to saving cloud costs.

How to deploy without shutting down servers. Differences between Rolling, Canary, and Blue-Green. Deep dive into Database Rollback strategies, Online Schema Changes, AWS CodeDeploy integration, and Feature Toggles.

ChatGPT answers questions. AI Agents plan, use tools, and complete tasks autonomously. Understanding this difference changes how you build with AI.

When I started my first project, choosing a cloud provider seemed trivial. EC2, Compute Engine, Virtual Machine—they're all just servers, right? AWS is the biggest, so I'll go with AWS.

But comparing the characteristics of each cloud, they're completely different. Just like "cars" can be sports cars, SUVs, or sedans, each cloud is built with an entirely different philosophy.

AWS billing is complex given its breadth of services, GCP is innovative but has a history of deprecating services, and Azure's documentation is enterprise-oriented in ways that feel unfamiliar to solo developers or small teams — these are common experiences you hear about.

I came to understand: choosing a cloud isn't about "which is better?" but "which fits our situation?"

AWS feels like a department store. They have everything. Over 200 services, thousands of new features added every year.

My first impression of AWS was "oh, I can Google everything." Every error has a Stack Overflow answer, every use case already has a blog post.

// AWS SDK literally has clients for every service

import { S3Client, PutObjectCommand } from "@aws-sdk/client-s3";

import { DynamoDBClient, PutItemCommand } from "@aws-sdk/client-dynamodb";

import { SQSClient, SendMessageCommand } from "@aws-sdk/client-sqs";

import { LambdaClient, InvokeCommand } from "@aws-sdk/client-lambda";

// You can solve almost everything within AWS

const s3 = new S3Client({ region: "ap-northeast-2" });

await s3.send(new PutObjectCommand({

Bucket: "my-bucket",

Key: "uploads/image.jpg",

Body: fileBuffer

}));

This gave me tremendous confidence. Whatever feature I needed, I knew "AWS probably has it."

The problem was pricing. AWS bills are like mazes. EC2 charges, EBS charges, data transfer charges, NAT Gateway charges... dozens of line items.

# Example AWS bill (small service, could easily reach $300-400/mo)

# EC2 instances: ~$52

# EBS volumes: ~$18

# Data transfer (outbound): ~$127 ← This is the killer

# NAT Gateway: ~$45

# RDS: ~$63

# S3: ~$12

# CloudWatch Logs: ~$8

# Other: ~$22

# Key lesson: Watch out for data transfer costs

I learned: AWS is "easy to enter, hard to optimize."

GCP felt completely different. Like Google saying "here, use the tools we use internally."

The main reason I chose GCP was BigQuery. Data analysis became incredibly easy.

-- Analyze billions of log entries in seconds

SELECT

user_id,

COUNT(*) as events,

APPROX_QUANTILES(session_duration, 100)[OFFSET(50)] as median_duration

FROM `project.dataset.events`

WHERE DATE(timestamp) >= DATE_SUB(CURRENT_DATE(), INTERVAL 30 DAY)

GROUP BY user_id

HAVING events > 10

ORDER BY events DESC

LIMIT 1000;

-- This runs in 3 seconds. Even on terabytes of data.

AWS has Athena and Redshift, but BigQuery's speed and convenience were in a different league. The pricing model was simple: pay only for the data you scan.

If you're using Firebase, GCP is the natural choice.

// Firebase + GCP Cloud Functions combo

import * as functions from "firebase-functions";

import { BigQuery } from "@google-cloud/bigquery";

export const onUserSignup = functions.auth.user().onCreate(async (user) => {

// Save Firebase Auth events directly to BigQuery

const bigquery = new BigQuery();

await bigquery.dataset("analytics").table("user_signups").insert([{

user_id: user.uid,

email: user.email,

created_at: new Date().toISOString()

}]);

});

// Firebase, Cloud Functions, BigQuery work as one ecosystem

The problem was Google's "sunset" culture. Google Cloud IoT Core suddenly announced shutdown, and we had to migrate urgently.

The lesson: GCP is great for teams wanting cutting-edge tech and innovation, but less reassuring if you need long-term stability.

Azure was confusing at first. Coming from AWS or GCP, Azure's terminology and structure felt foreign.

But working with B2B customers taught me Azure's value. Especially Active Directory integration was essential in enterprise environments.

// SSO through Azure AD

import { DefaultAzureCredential } from "@azure/identity";

import { SecretClient } from "@azure/keyvault-secrets";

// Automatic authentication with company AD account

const credential = new DefaultAzureCredential();

const client = new SecretClient(

"https://my-vault.vault.azure.net",

credential

);

// Enterprise customers love this

// Access cloud resources with existing AD accounts

When a large enterprise customer asked "We only use Azure, can you deploy on Azure?", supporting Azure became a contract requirement.

If you develop in .NET, Azure is the obvious choice.

// Azure Functions with C#

[FunctionName("ProcessOrder")]

public static async Task Run(

[QueueTrigger("orders")] Order order,

[Table("Orders")] IAsyncCollector<OrderEntity> orderTable,

[SendGrid] IAsyncCollector<SendGridMessage> messages,

ILogger log)

{

// Queue, Table Storage, SendGrid all connected via bindings

await orderTable.AddAsync(new OrderEntity(order));

await messages.AddAsync(CreateConfirmationEmail(order));

}

Press F5 in Visual Studio for local debugging, one-click deployment.

| Service | AWS | GCP | Azure |

|---|---|---|---|

| VM | EC2 | Compute Engine | Virtual Machines |

| Serverless | Lambda | Cloud Functions | Functions |

| Container | ECS/EKS | GKE | AKS |

| Type | AWS | GCP | Azure |

|---|---|---|---|

| Relational | RDS | Cloud SQL | Database for MySQL/PostgreSQL |

| NoSQL | DynamoDB | Firestore | Cosmos DB |

| Analytics | Redshift | BigQuery | Synapse |

// All three are similar but developer experience differs

// AWS Lambda - most mature

export const handler = async (event: APIGatewayEvent) => {

return { statusCode: 200, body: JSON.stringify({ ok: true }) };

};

// GCP Cloud Functions - most concise

export const hello = (req: Request, res: Response) => {

res.json({ ok: true });

};

// Azure Functions - best for .NET developers

[FunctionName("Hello")]

public static IActionResult Run([HttpTrigger] HttpRequest req) {

return new OkObjectResult(new { ok = true });

}

AWS Free Tier: 12-month limited + always free

GCP Free Tier: Always Free + $300 credit (90 days)

Azure Free Tier: $200 credit (30 days) + 12-month free + always free

This was crucial. If you're an early-stage startup, definitely leverage credit programs.

We survived a year on $5,000 GCP credits.

The scariest thing was vendor lock-in. Once you choose, it's hard to switch.

The key was creating an abstraction layer.

// Bad: direct dependency on cloud SDK

import { S3Client } from "@aws-sdk/client-s3";

export async function uploadFile(file: Buffer) {

const s3 = new S3Client({ region: "us-east-1" });

await s3.send(new PutObjectCommand({ /* ... */ }));

}

// Good: abstraction layer

interface StorageService {

upload(key: string, data: Buffer): Promise<void>;

download(key: string): Promise<Buffer>;

}

class S3Storage implements StorageService {

async upload(key: string, data: Buffer) { /* AWS implementation */ }

async download(key: string) { /* AWS implementation */ }

}

class GCSStorage implements StorageService {

async upload(key: string, data: Buffer) { /* GCP implementation */ }

async download(key: string) { /* GCP implementation */ }

}

// App code depends only on StorageService interface

export function createStorage(): StorageService {

return process.env.CLOUD === "aws"

? new S3Storage()

: new GCSStorage();

}

This way, you can switch clouds later while keeping core logic intact.

Honestly, nowadays the "big three" aren't the only options. Especially for web applications, Vercel or Cloudflare might be better.

// Vercel Edge Functions - runs on edge worldwide

export const config = { runtime: "edge" };

export default async function handler(req: Request) {

// Near-zero latency - runs on edge closest to user

return new Response(JSON.stringify({ ok: true }), {

headers: { "content-type": "application/json" }

});

}

If using Next.js, Vercel was overwhelmingly convenient. Deployment was just git push, performance optimization was automatic.

Cloudflare Workers were genuinely fast. Sub-5ms responses from over 300 cities worldwide.

// Cloudflare Workers

export default {

async fetch(request: Request): Promise<Response> {

// This responds in under 5ms anywhere in the world

const url = new URL(request.url);

return new Response(`Hello from ${request.cf?.colo}`);

}

}

Pricing was attractive too. Workers were free up to 100k requests/day.

We ended up going hybrid: frontend on Vercel, backend API on GCP, data analytics on BigQuery.

Summarizing my experience, here's a framework for choosing:

Does your team already know a cloud well?

└─ YES → Use that. Learning costs are the highest.

└─ NO → Go to Step 2

Is data analytics core?

└─ YES → GCP (BigQuery)

Using .NET? Or many B2B customers?

└─ YES → Azure

Using Kubernetes?

└─ YES → GCP (GKE)

Is enterprise customer support important?

└─ YES → AWS (largest ecosystem)

Just a Next.js web app?

└─ YES → Vercel

Need global API + ultra-low latency?

└─ YES → Cloudflare Workers

Early startup (<$1k/month)

└─ GCP/Vercel/Cloudflare (generous free tiers)

Mid-size ($1k-10k/month)

└─ GCP or AWS (AWS if you have time to optimize)

Large scale ($10k+/month)

└─ AWS (room for negotiation, Enterprise Support is valuable)

Will this service exist in 5 years?

└─ AWS > Azure > GCP (stability order)

Need global expansion?

└─ GCP/Cloudflare (infrastructure evenly distributed worldwide)

Need multi-cloud strategy?

└─ Terraform + abstraction layer required

After comparing the characteristics of all three clouds, here's how I'd summarize it.

Choose AWS if:

Choose GCP if:

Choose Azure if:

Choose Vercel/Cloudflare if:

The most important lesson: There's no "best cloud." There's only the cloud that fits your team and product.

I initially chose AWS because it was the biggest. But now I use Vercel for frontend, GCP for backend, BigQuery for data, Cloudflare for CDN. Multi-cloud looks complex, but using the best tool for each domain ended up being simpler.

If you're struggling with cloud choice, start small. Build a prototype with free tiers and experience it firsthand. That's worth more than a hundred comparison articles.