RNN and LSTM: Sequential Data Processing

Understanding RNN and LSTM principles through practical project experience

Understanding RNN and LSTM principles through practical project experience

Understanding neural network principles through practical project experience. From factory line analogies to backpropagation and hyperparameter tuning.

Understanding Transformer architecture through practical experience

Understanding AI, Machine Learning, Deep Learning, and Generative AI. Deep dive into Transformer architecture, RAG vs Fine-tuning, Ethical AI, and a practical roadmap for developers transitioning to AI Engineering.

When ChatGPT first came out, developers were terrified. 'Coding is dead.' I was scared too. But after integrating LLMs into production for a year, I realized: AI is not a God, but an incredibly smart intern who sometimes hallucinates confidently.

When I started learning Machine Learning, the first thing I wanted to try was, of course, 'Stock Prediction'. "Predicting the future based on past data." It seemed like the perfect problem fitting the definition of ML.

Initially, I used a CNN (Convolutional Neural Network). It's for image processing, but I deemed chart images were images after all. The result was disastrous. Accuracy was below 50%. Worse than flipping a coin.

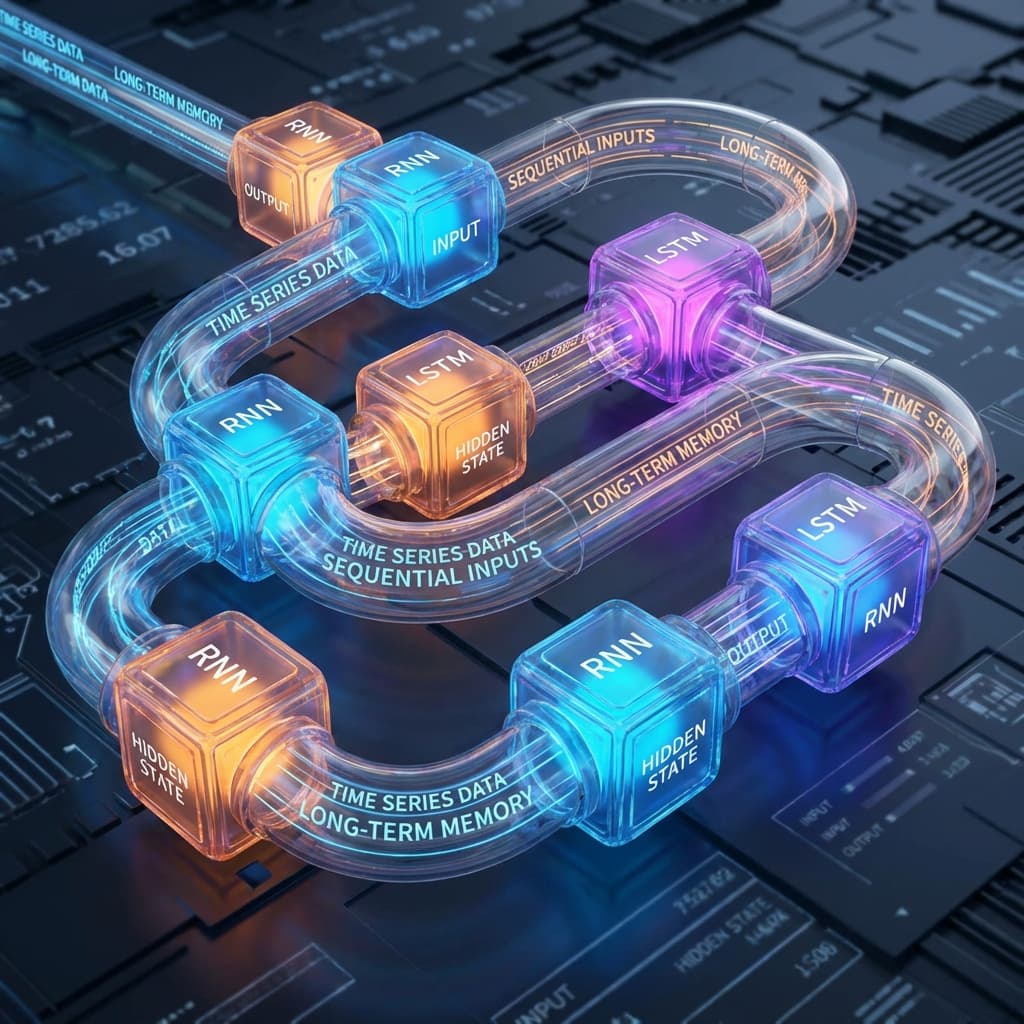

A senior developer looked at me with pity and said: "Hey, order matters in stock prices. What happened yesterday affects today. CNN doesn't know that. You need to use RNN (Recurrent Neural Network)."

That's when I first realized: Data has a 'flow of time'.

Opening the textbook, I saw RNN diagrams with arrows pointing back to themselves. "Functions take input and spit out output. Why does it go back in?" Intuitively, it didn't click.

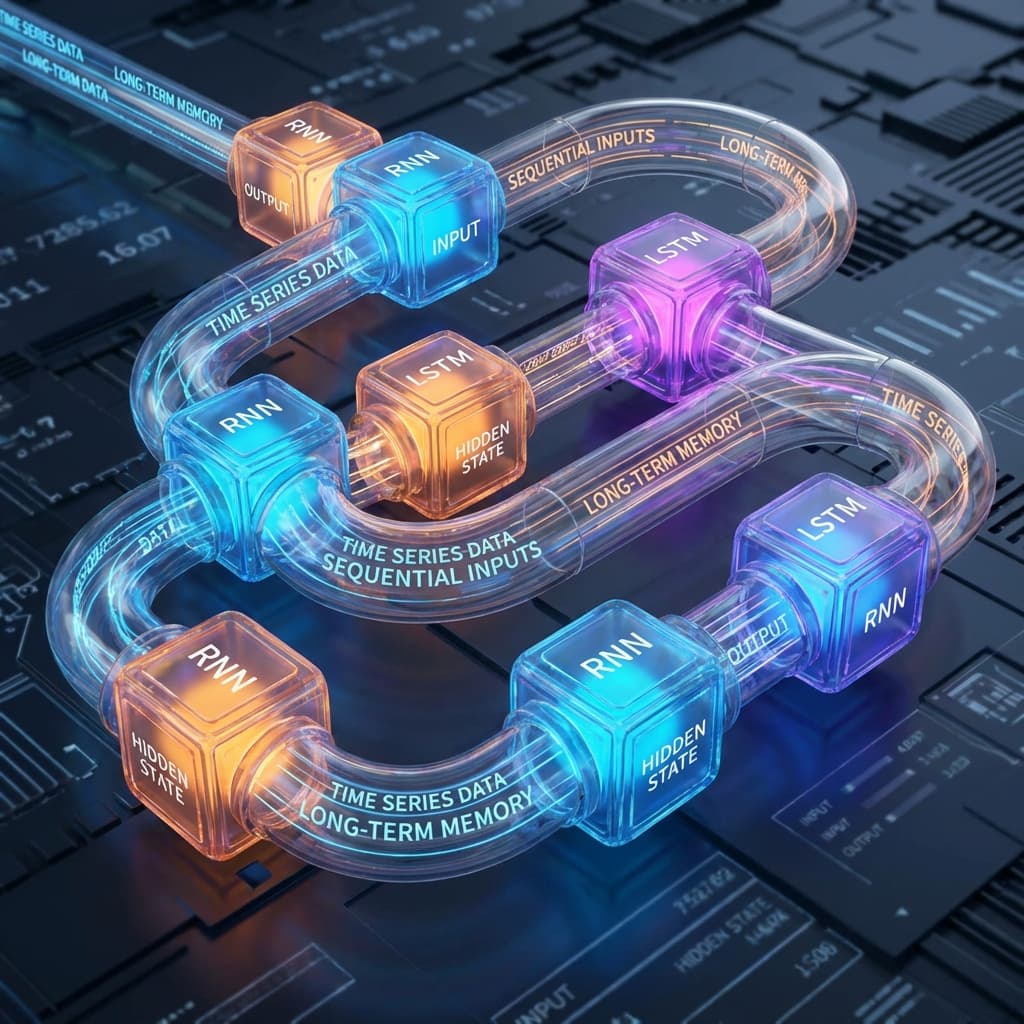

Just as I thought I grasped RNN, LSTM popped up. Input Gate, Forget Gate, Output Gate... formulas spanned pages. "Can't I just use RNN? Why this complexity?"

They said RNN can't remember long sentences, but mathematically, I couldn't feel why it couldn't.

The decisive analogy was "Diary Writing."

RNN is a person who writes a diary every night. But there's a rule. "When writing today's diary, you must reference ONLY yesterday's diary."

Passing 'Today's Memory (Hidden State)' to tomorrow (Recurrent) is the core of RNN.

But what about a diary from a year ago? Referencing only the previous day linearly means the content from 365 days ago fades and disappears. This is the Vanishing Gradient problem.

LSTM doesn't just continue the diary; it acts like a person who "Writes important info on Post-it notes (Cell State) and sticks them on the desk."

This analogy helped me realize why LSTM is capable of Long-Term Memory. Important info travels on the highway (Cell State).

Input(x_t) → [RNN Cell] → Output(h_t)

↑ ↓

Prev Memory(h_t-1)

# Simple RNN Logic

def rnn_cell(current_input, prev_memory):

# Combine current input and previous memory (Weighted Sum)

combined = W_x * current_input + W_h * prev_memory + bias

# Compress with tanh (-1 ~ 1)

current_memory = tanh(combined)

return current_memory

This current_memory becomes the Output and the Hidden State passed to the next step.

Fatal Flaw: Vanishing Gradient

During Backpropagation, gradients are multiplied continuously. Since tanh derivative max is 1 (and usually smaller),

multiplying 0.9 for 100 times results in 0.000026. Information from distant past fails to influence learning.

Essentially, "A dementia phenomenon where early inputs have zero impact later" occurs.

To solve this, Hochreiter and Schmidhuber invented LSTM in 1997 (Not Geoff Hinton). The core is a "Structure that Adds (+)". Adding instead of multiplying preserves values without shrinking.

Inside an LSTM cell, there are 3 Gatekeepers. They use the Sigmoid function (0~1) to decide how much to open the door.

f_t = sigmoid(...) -> 0 deletes, 1 keeps.i_t = sigmoid(...) -> Decides how much to store.o_t = sigmoid(...) -> Decides value to send to next layer/step.LSTM has a Cell State (C_t) flowing alongside Hidden State (h_t).

This is a sort of Cheat Sheet. It penetrates through time with minimal interference from gates.

# Core LSTM Formula (Intuitive)

Cell_State = (Old_Memory * Forget_Rate) + (New_Memory * Remember_Rate)

Because of the Addition (+) operation here, gradients can flow far back without vanishing.

Let's code a stock predictor in PyTorch. Everyone dreams of riches with this, though reality is harsh. (I was there...)

import torch

import torch.nn as nn

class StockPredictor(nn.Module):

def __init__(self, input_size, hidden_size, num_layers=2):

super(StockPredictor, self).__init__()

self.hidden_size = hidden_size

self.num_layers = num_layers

# LSTM Layer

# batch_first=True: Input data is (batch, seq, feature)

self.lstm = nn.LSTM(input_size, hidden_size, num_layers, batch_first=True)

# Linear layer for final prediction

self.fc = nn.Linear(hidden_size, 1)

def forward(self, x):

# x shape: (batch_size, sequence_length, input_size)

# Initialize Hidden State and Cell State to 0

h0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(x.device)

c0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(x.device)

# Pass through LSTM

# out shape: (batch_size, seq_len, hidden_size)

out, _ = self.lstm(x, (h0, c0))

# We only need the info from the 'last day'

out = out[:, -1, :]

# Final prediction

out = self.fc(out)

return out

# Instantiate

# input_size=5 (Open, High, Low, Close, Volume)

model = StockPredictor(input_size=5, hidden_size=64)

I remember wasting 3 hours on Dimension Errors because I missed the batch_first=True option. PyTorch default is (seq, batch, feature), which differs from standard data shapes.

Once Kings of Translation, Speech Recognition, and Time-Series, the game changed after Google's "Attention Is All You Need" paper in 2017. Using RNN/LSTM for NLP in 2025 gets you questioned, "Why?"

Limitations of LSTM:t, t-1 must be finished. Parallel processing is impossible, so training is slow even with 100 GPUs.It turned out that "Looking up when needed (Attention) is superior to Remembering (Memory)."

However, for Time Series or Sensor Data Analysis where data comes in real-time streams on light Edge devices, LSTM or GRU (Lightweight LSTM) are still active players. They are light and fast.

RNN is a neural network that remembers previous states sequentially, while LSTM evolves it by solving the Vanishing Gradient problem via Cell State to keep long-term memories. While the NLP throne has been ceded to Transformer, it remains a powerful tool in Time Series prediction and lightweight models.