PostgreSQL Advanced Features: JSONB, CTE, and Window Functions

Beyond basic SELECT: JSONB, CTEs, and Window Functions dramatically expand what you can do in a single SQL query.

Beyond basic SELECT: JSONB, CTEs, and Window Functions dramatically expand what you can do in a single SQL query.

Foundation of DB Design. Splitting tables to prevent Anomalies. 1NF, 2NF, 3NF explained simply.

Binary Tree is for RAM. Disk is slow. B-Tree minimizes Disk I/O by being short and fat. Difference between B-Tree and B+Tree, and why databases love them.

When you break a monolith into microservices, you lose ACID transactions. How do you ensure data consistency across boundaries? We explore the limitations of Two-Phase Commit (2PC) and dive deep into the Saga Pattern, Event Consistency, and practical implementation strategies like Choreography vs Orchestration.

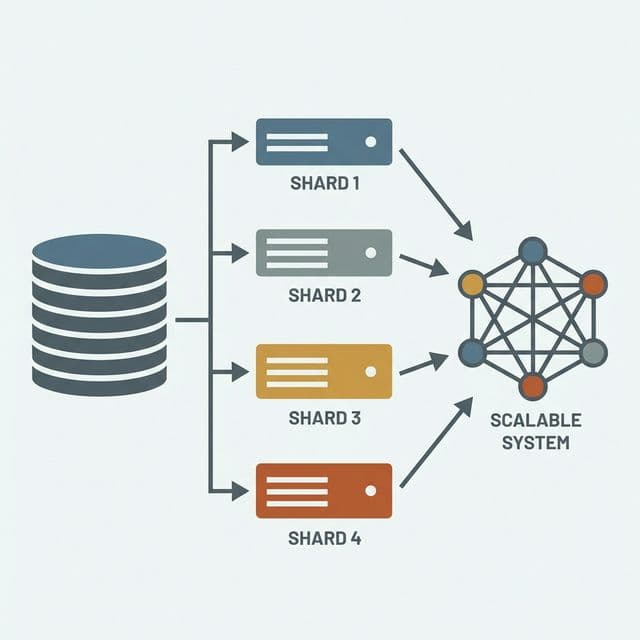

When Scale-Up fails, Scale-Out is the answer. Vertical vs Horizontal Partitioning, Shard Key selection strategies (Geo vs Hash), and the complexity of Distributed Transactions.

I used to think of PostgreSQL as just a MySQL alternative. I stuck to SELECT, INSERT, UPDATE, DELETE, and handled all complex logic in application code. To analyze user activity logs, I'd fetch everything and filter/aggregate in JavaScript. For hierarchical comments, I wrote recursive functions. For rankings, I sorted arrays and added indices.

Then one day, diving into legacy code, I found a single SQL query doing what took me 50 lines to implement. WITH RECURSIVE fetched entire comment trees in one go. ROW_NUMBER() OVER(PARTITION BY ...) ranked items by group. JSONB operators manipulated nested JSON data like it was NoSQL.

That's when it hit me: I'd been using maybe 5% of PostgreSQL. Just understanding JSONB, CTEs, and Window Functions completely transformed how I thought about databases.

The first shock was JSONB. I needed to add custom fields to user profiles. Adding columns cluttered the schema, but separate tables meant complex JOINs. JSONB solved this dilemma perfectly.

-- Add JSONB column to users table

CREATE TABLE users (

id SERIAL PRIMARY KEY,

email VARCHAR(255) NOT NULL,

metadata JSONB DEFAULT '{}'::jsonb

);

-- Store varied metadata structures

INSERT INTO users (email, metadata) VALUES

('founder@startup.com', '{"role": "founder", "preferences": {"theme": "dark", "language": "ko"}}'),

('developer@company.com', '{"role": "developer", "skills": ["PostgreSQL", "TypeScript"], "experience_years": 5}');

-- Query JSON data with operators

SELECT * FROM users WHERE metadata @> '{"role": "founder"}';

SELECT * FROM users WHERE metadata ? 'skills';

-- Aggregate into JSON arrays

SELECT

metadata ->> 'role' AS role,

jsonb_agg(email) AS members

FROM users

GROUP BY metadata ->> 'role';

The game-changer is GIN indexes. JSONB queries can use indexes too.

CREATE INDEX idx_users_metadata ON users USING GIN (metadata);

-- Now @>, ?, ?&, ?| operators use the index

EXPLAIN ANALYZE

SELECT * FROM users WHERE metadata @> '{"preferences": {"theme": "dark"}}';

It felt like getting MongoDB's flexibility while keeping PostgreSQL's transactions and consistency. Schema-free fields where needed, strict columns where required—a perfect hybrid approach.

Common Table Expressions start with WITH and create temporary result sets. Initially I thought "isn't this just subqueries?" but the readability improvement was dramatic.

-- Complex nested subqueries

SELECT

u.email,

(SELECT COUNT(*) FROM posts p WHERE p.user_id = u.id) AS post_count,

(SELECT AVG(views) FROM posts p WHERE p.user_id = u.id) AS avg_views

FROM users u

WHERE (SELECT COUNT(*) FROM posts p WHERE p.user_id = u.id) > 5;

-- Refactored with CTE - much cleaner

WITH user_stats AS (

SELECT

user_id,

COUNT(*) AS post_count,

AVG(views) AS avg_views

FROM posts

GROUP BY user_id

)

SELECT

u.email,

us.post_count,

us.avg_views

FROM users u

JOIN user_stats us ON u.id = us.user_id

WHERE us.post_count > 5;

But the real power is Recursive CTEs. Hierarchical data without this is painful.

-- Recursive CTE for comment trees

WITH RECURSIVE comment_tree AS (

-- Base case: top-level comments

SELECT

id, parent_id, content,

ARRAY[id] AS path,

1 AS depth

FROM comments

WHERE parent_id IS NULL AND post_id = 123

UNION ALL

-- Recursive case: find children

SELECT

c.id, c.parent_id, c.content,

ct.path || c.id,

ct.depth + 1

FROM comments c

JOIN comment_tree ct ON c.parent_id = ct.id

)

SELECT * FROM comment_tree ORDER BY path;

Previously I'd fetch top-level comments then recursively query for children—classic N+1 problem. Recursive CTEs fetch the entire tree in one query. Works for org charts, category hierarchies, file systems—anything hierarchical.

Window Functions completely changed my SQL perspective. GROUP BY returns one result per group, but Window Functions attach group-based calculations to each row.

-- Rank posts by views within each category

SELECT

title,

category,

views,

ROW_NUMBER() OVER(PARTITION BY category ORDER BY views DESC) AS rank_in_category,

RANK() OVER(ORDER BY views DESC) AS global_rank

FROM posts;

PARTITION BY means "divide by this column but show results for every row." Getting top 3 per category becomes trivial.

WITH ranked_posts AS (

SELECT

*,

ROW_NUMBER() OVER(PARTITION BY category ORDER BY views DESC) AS rn

FROM posts

)

SELECT * FROM ranked_posts WHERE rn <= 3;

LAG and LEAD let you reference previous/next rows. Calculating time between user actions transforms:

-- Calculate time since last action

SELECT

user_id,

action,

created_at,

created_at - LAG(created_at) OVER(PARTITION BY user_id ORDER BY created_at) AS time_since_last_action

FROM user_activities

ORDER BY user_id, created_at;

I used to fetch all data and process in application code. Window Functions do it right in the database.

Frequently querying specific JSONB fields got tedious. Generated Columns gave me JSONB flexibility with regular column performance.

CREATE TABLE products (

id SERIAL PRIMARY KEY,

name VARCHAR(255) NOT NULL,

data JSONB NOT NULL,

price NUMERIC GENERATED ALWAYS AS ((data->>'price')::numeric) STORED,

category VARCHAR(100) GENERATED ALWAYS AS (data->>'category') STORED

);

CREATE INDEX idx_products_price ON products(price);

-- Query like a regular column

SELECT * FROM products WHERE price > 10000 AND category = 'electronics';

Before ON CONFLICT, implementing "update if exists, insert if not" meant SELECT-then-branch logic with race conditions. UPSERT changed everything.

-- Daily user stats with UPSERT

INSERT INTO daily_user_stats (user_id, date, action_count, last_action_at)

VALUES (123, '2026-02-03', 1, NOW())

ON CONFLICT (user_id, date)

DO UPDATE SET

action_count = daily_user_stats.action_count + EXCLUDED.action_count,

last_action_at = EXCLUDED.last_action_at;

One line with transactional safety replacing complex INSERT/UPDATE logic.

Actual requirement: "Show top 5 most-viewed posts per category from the last 30 days, with daily view trends as JSON."

Application code would need multiple queries and complex logic. PostgreSQL's advanced features did it in one query:

WITH recent_views AS (

SELECT

post_id,

DATE(created_at) AS view_date,

COUNT(*) AS daily_views

FROM post_views

WHERE created_at > NOW() - INTERVAL '30 days'

GROUP BY post_id, DATE(created_at)

),

post_totals AS (

SELECT

post_id,

SUM(daily_views) AS total_views

FROM recent_views

GROUP BY post_id

),

ranked_posts AS (

SELECT

p.id, p.title, p.category,

pt.total_views,

ROW_NUMBER() OVER(PARTITION BY p.category ORDER BY pt.total_views DESC) AS rank

FROM posts p

JOIN post_totals pt ON p.id = pt.post_id

)

SELECT

rp.category,

rp.title,

rp.total_views,

jsonb_agg(

jsonb_build_object('date', rv.view_date, 'views', rv.daily_views)

ORDER BY rv.view_date

) AS daily_trend

FROM ranked_posts rp

LEFT JOIN recent_views rv ON rp.id = rv.post_id

WHERE rp.rank <= 5

GROUP BY rp.category, rp.title, rp.total_views, rp.rank

ORDER BY rp.category, rp.rank;

CTEs break down complex logic, Window Functions rank results, jsonb_agg bundles time series data. This single query replaced dozens of lines of JavaScript and multiple database round-trips.

What I finally understood: PostgreSQL isn't just data storage, it's a powerful data processing engine. Instead of complex aggregation and transformation in application code, let the database do what it's optimized for.

After mastering these three, my answer to "can SQL do this?" changed from "probably not" to "almost certainly yes." Letting the database do what databases do best simplified application code and improved performance.

These PostgreSQL advanced features aren't optional extras—they're essential tools. If you're only doing basic CRUD, you're using maybe 5% of the engine's power.