Convolutional Neural Networks (CNN): The Visual Cortex of AI

1. How Do Machines "See" the World?

Evolution took millions of years to develop the human eye and the visual cortex.

When you look at a picture of a Panda, you instantly recognize it. You don't consciously analyze every strand of black and white fur, nor do you calculate the angles of its ears. Your brain processes the visual signals hierarchically—identifying edges, curves, colors, and finally, assembling them into the concept of a "Panda."

To a traditional computer, however, an image is just a massive grid of numbers. An uncompressed 1000x1000 color photo is a matrix of 3 million integers (Pixel values from 0 to 255 for Red, Green, and Blue).

Before CNNs, computer scientists tried to solve computer vision by flattening these pixels into a single long line and feeding them into standard neural networks (Dense Networks).

This approach failed miserably because it destroyed spatial structure.

In a flattened line, the pixel at coordinate (10, 10) is structurally far away from (10, 11), even though they are neighbors in the image. The computer couldn't understand shapes, proximity, or geometry. It was like trying to understand a painting by reading a description of every paint stroke in alphabetical order.

Enter CNN (Convolutional Neural Network).

Instead of flattening the image, CNNs process it as a 2D grid, treating it like an image. They scan the image using filters, mimicking how human eyes scan a scene to build understanding. It was a revolution inspired by biology.

2. The Core Mechanism: Convolution (The Filter)

The true magic of object recognition lies in the mathematical operation called Convolution.

Imagine you have a small flashlight. In CNN terms, this is a Filter (or Kernel), typically a 3x3 or 5x5 grid of weight numbers.

You slide this flashlight across the entire input image, from top-left to bottom-right, pixel by pixel. This sliding action is the "convolution."

At every step, the filter performs a dot product operation with the pixels underneath it.

- Pattern Matching: If the pixel pattern under the flashlight matches the pattern the filter, the math produces a high positive number. This is called Activation.

- No Match: If they don't match, it generates a low number or zero.

What do filters actually look for?

This is the beauty of Deep Learning. We do not program the filters manually.

In the old days (Computer Vision 1.0), engineers painfully coded "Edge Detectors" by hand.

In Deep Learning, the network starts with random filters. Through training (Backpropagation) on millions of images, it learns on its own:

"Hey, if I set my numbers to look for curve shapes, I get the right answer more often!"

This leads to a Hierarchy of Features:

- Layer 1 (Retina): Detects primitives—vertical lines, horizontal edges, color patches.

- Layer 2 (Primary Cortex): Combines lines to form shapes—circles, corners, squares.

- Layer 3 (Visual Association): Combines shapes to form objects—eyes, wheels, ears, text.

- Last Layer: Recognizes the concept—"That's a Panda!"

3. Tuning the Vision: Stride and Padding

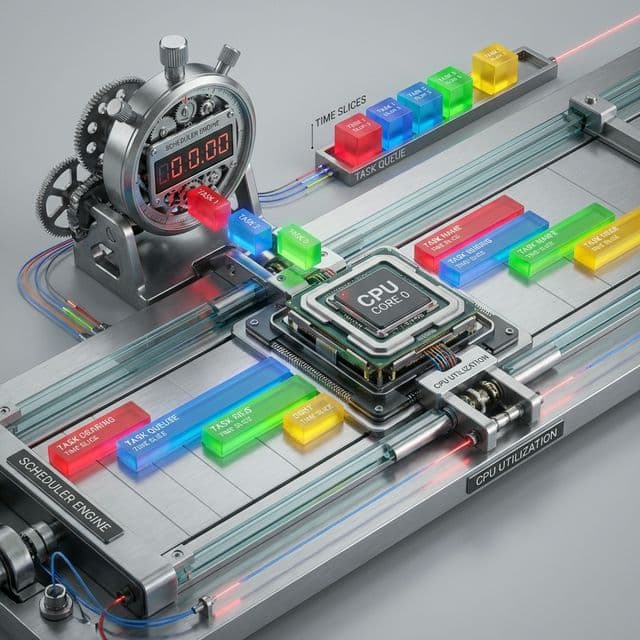

When designing a CNN architecture, we have to decide how the filter moves. These are called Hyperparameters.

Stride: The Step Size

How many pixels does the filter move at a time?

- Stride 1: It moves 1 pixel at a time. It scans the image meticulously. The output feature map is roughly the same size as the input.

- Stride 2: It jumps 2 pixels. It skips information but reduces the data dimension by half. It's a form of aggressive downsampling to reduce computation.

Padding: The Border

When a 3x3 filter is centered on the very corner pixel of an image, part of the filter hangs off the edge.

What should be there?

- Valid Padding: Just drop the edges where the filter doesn't fit. The output image gets smaller than the input. If you do this many times, a 224x224 image might shrink to 1x1 too quickly, destroying information.

- Same Padding (Zero Padding): Add a border of zeros around the image. This ensures the output size remains the same as the input input, allowing us to build very deep networks (like ResNet with 100+ layers) without the image vanishing into nothingness.

4. Pooling: The Art of Forgetting

Convolutional layers produce "Feature Maps" that tell us where features are. But these maps are huge and computationally expensive.

Do we really need to know the exact pixel coordinate of the panda's eye? E.g., (x: 142, y: 355).

No. We just need to know roughly where it is using relative positioning. "The eye is in the top-left quadrant of the face."

Pooling Layers downsample the image to reduce its size and calculation load, while keeping the important features.

- Max Pooling: Takes a window (e.g., 2x2) and keeps only the largest number.

- Why Max? Because the largest number represents the strongest feature presence (highest activation). It's like saying, "I don't care about the weak noise in this 4-pixel area, just tell me the loudest signal."

- This forces the network to focus on the existence of features rather than their exact position, making the AI robust against small shifts or rotations (Translation Invariance).

5. Architectural Hall of Fame

Understanding the history helps us use modern tools better. Each of these architectures introduced a key concept we use today.

- LeNet-5 (1998): The grandfather. Developed by Yann LeCun for reading handwritten numbers on checks (ATM). It proved CNNs work for digit recognition but was limited by computing power.

- AlexNet (2012): The game changer. It won the ImageNet competition by a landslide, beating traditional algorithms. It was the first to use GPUs for training and introduced ReLU (Rectified Linear Unit) activation to prevent the math from getting stuck. It marked the beginning of the Deep Learning explosion.

- VGG-16 (2014): Known for its elegance. It showed that stacking many small filters (3x3) is much more efficient than using large filters (7x7). Its simple blocks made it a standard for Transfer Learning.

- ResNet (2015): The superhuman. As networks got deeper (20+ layers), they became harder to train due to the Vanishing Gradient Problem (signals getting lost). ResNet introduced Residual Connections (Skip Connections), which act like highways for data to bypass layers. This allowed networks to go from 20 layers to 150+ layers deep, surpassing human-level accuracy in image classification.

6. Beyond Classification: The Modern Era

CNNs have revolutionized industries beyond just tagging Facebook photos.

- Medical Diagnosis (Segmentation): Identifying exactly which pixels in an X-ray correspond to a tumor. U-Net is a popular CNN architecture here that outputs a mask instead of a label.

- Self-Driving Cars (Object Detection): Tesla and Waymo use CNNs (like YOLO or R-CNN) to draw bounding boxes around pedestrians, cars, and traffic signs in real-time (60 frames per second). Speed is critical here.

- Generative AI (GANs): Before Stable Diffusion and Transformers took over, Convolutional GANs were used to create realistic human faces that never existed.

7. Conclusion

The Convolutional Neural Network is one of the most successful algorithms in the history of Artificial Intelligence.

By mimicking the biological constraints of our own eyes—looking at local regions and integrating them globally—it solved the problem of visual perception for machines.

While the Vision Transformer (ViT) is the new challenger, proposing that "Attention is All You Need" even for images, the principles of CNNs remain fundamental. CNNs are often more efficient on smaller datasets and edge devices (like smartphones and IoT).

CNNs gave computers the gift of sight, transforming them from blind calculators into intelligent observers that can navigate our visual world.