Supabase Storage: Fixing 403 Forbidden Upload Errors

Table RLS is set, but file upload fails with 403? You forgot Storage RLS. Learn how to configure policies for `storage.objects` buckets.

Table RLS is set, but file upload fails with 403? You forgot Storage RLS. Learn how to configure policies for `storage.objects` buckets.

A comprehensive deep dive into client-side storage. From Cookies to IndexedDB and the Cache API. We explore security best practices for JWT storage (XSS vs CSRF), performance implications of synchronous APIs, and how to build offline-first applications using Service Workers.

You changed the code, saved it, but the browser does nothing. Tired of hitting F5 a million times? We dive into how HMR (Hot Module Replacement) works, why it breaks (circular dependencies, case sensitivity, etc.), and how to fix it so you can regain your development speed.

HDD is just a giant field of 0s and 1s. Magic that creates 'File' and 'Folder'.

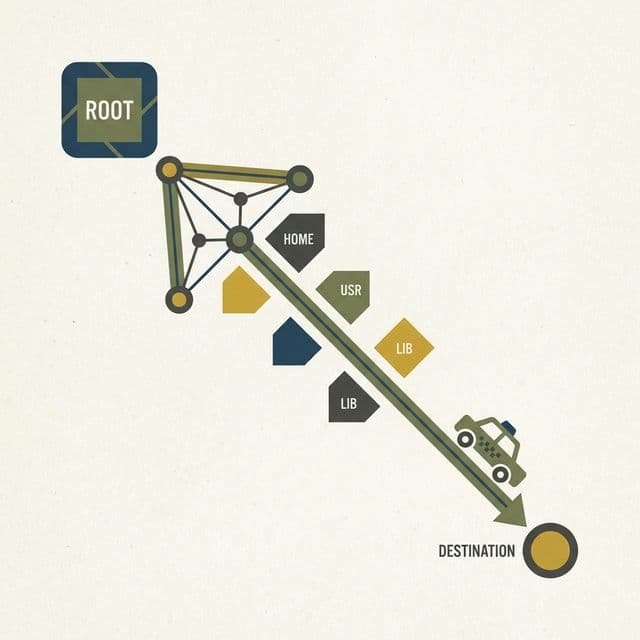

Troubleshooting absolute path import configuration issues in TypeScript/JavaScript projects. Exploring the 'Map vs Taxi Driver' analogy, CommonJS vs ESM history, and Monorepo setup.

I was implementing a user profile picture change feature.

In Flutter, I called supabase.storage.from('avatars').upload().

But a glaring red error filled the console.

StorageException: new row violates row-level security policy for table "objects"

StatusCode: 403

"Wait, I added all INSERT policies for the profiles table. Why?"

I didn't know that Database Table permissions and Storage permissions are separate entities.

Supabase tutorials often focus heavily on Table RLS and gloss over Storage RLS.

My misconception was: "If I'm logged in (Authenticated), shouldn't everything work?"

Auth was clear, Table RLS was set, so I assumed Storage would just work.

But looking closer at the error, it said table "objects".

"I never created a table named objects?"

It turns out Supabase Storage internally manages file metadata in a table named objects within the storage schema.

When a user uploads a file, an INSERT operation effectively happens on the storage.objects table.

That's where the RLS violation was happening.

I understood it when using a "Department Store vs. Locker Room" analogy.

"Ah, having rights to the profiles table doesn't automatically grant rights to the avatars bucket. I need a separate Locker Room Ticket."

Finding the 'Policies' tab in the Storage menu on Supabase Dashboard can be tricky. Usually, it's clearest to go to the SQL Editor and run queries directly.

The easiest way is to create a Public Bucket.

Public Buckets allow SELECT (download) access to everyone worldwide.

For profile pictures, Public is convenient.

However, Upload (INSERT) is still blocked. Even for a Public Bucket, you can't verify anyone to upload a 1TB file.

You need to add a policy to the storage.objects table.

Crucially, you must specify the bucket_id. Otherwise, you might grant access to other buckets accidentally.

-- Allow upload only to own folder in avatars bucket

CREATE POLICY "Allow Upload to own folder"

ON storage.objects FOR INSERT

TO authenticated

WITH CHECK (

bucket_id = 'avatars' AND

(storage.foldername(name))[1] = auth.uid()::text

);

What this means:

bucket_id = 'avatars': Must be the avatars bucket.storage.foldername(name)[1]: The first folder in the file path (uid/filename.png)auth.uid(): Must match my logged-in ID.In short, "You can only put files in the folder named after your ID." Clean and simple.

To change or remove photos, you need UPDATE and DELETE policies too.

CREATE POLICY "Allow Update own file"

ON storage.objects FOR UPDATE

TO authenticated

USING (

bucket_id = 'avatars' AND

(storage.foldername(name))[1] = auth.uid()::text

);

CREATE POLICY "Allow Delete own file"

ON storage.objects FOR DELETE

TO authenticated

USING (

bucket_id = 'avatars' AND

(storage.foldername(name))[1] = auth.uid()::text

);

Note: UPDATE vs INSERT (Upsert)

Even if you use upsert: true in the Supabase JS client,

it checks INSERT permission if the file doesn't exist, and UPDATE if it does.

So for safe upserting, you need both.

The core of Storage RLS is Path design.

If you mindlessly upload files to the root (avatars/my-face.png), permission management becomes a nightmare.

Recommended Structure:

{bucket}/{user_id}/{filename}: Standard and secure.{bucket}/{post_id}/{filename}: For post attachments.Bad Example:

{bucket}/{uuid-filename}: Since the filename doesn't indicate ownership, you'd need a complex policy (like an EXISTS subquery) to check the DB. This kills performance.Matching auth.uid() with the file path directly in the SQL policy is the fastest and cheapest method.

Even if RLS is perfect, if the OS blocks file access, you assume it's a Supabase error.

Android 10+ introduced Scoped Storage.

Traditional READ_EXTERNAL_STORAGE prevents you from reading random files.

Use modern libraries like image_picker.

It launches the System Photo Picker via Intent. Since the user explicitly picks a file, the OS grants temporary read access to that specific file without needing a blanket Permission Request.

Don't ask for MANAGE_EXTERNAL_STORAGE unless you are building a File Manager app. Google Play will reject you.

A user uploads a 4K raw photo as an avatar. If you send this to Supabase:

Always Compress on Client.

Use flutter_image_compress. Reduces 10MB -> 200KB.

final compressed = await FlutterImageCompress.compressAndGetFile(

file.path,

targetPath,

quality: 75,

minWidth: 800,

);

Supabase Storage RLS protects "Access", not "Common Sense". Client-side compression is mandatory etiquette.

final userId = supabase.auth.currentUser!.id;

final file = File('path/to/image.png');

try {

// Including userId in the path is key!

await supabase.storage.from('avatars').upload(

'$userId/profile.png', // path

file,

fileOptions: const FileOptions(upsert: true), // overwrite

);

} catch (e) {

print('Upload failed: $e'); // 403 means Policy issue

}

Now uploads work without the 403 error.

"I changed my profile pic, but I still see the old one." Supabase Storage is served via CDN (Cloudflare). If the URL looks the same, the CDN sends the cached (old) image.

Fix 1: URL Versioning (Recommended)

Append a query param: image.png?v=123123.

This forces the browser to treat it as a new resource.

Fix 2: cacheControl Set a short cache duration during upload.

await supabase.storage.from('avatars').upload(

path, file,

fileOptions: const FileOptions(

upsert: true,

cacheControl: '3600', // 1 hour

),

);

Default cache might be "Forever". For dynamic content like Avatars, keep it short.

If your bucket is Private, how do you modify <img> src?

Use createSignedUrl.

// Generate a URL valid for 60 seconds

final url = await supabase.storage

.from('contracts')

.createSignedUrl('secret-doc.pdf', 60);

This is different from RLS. It's a "Bearer Token" for file access. Great for sharing files in a chat room securely.

Which strategy fits your feature?

| Strategy | Access | Use Case | Pros | Cons |

|---|---|---|---|---|

| Public Bucket | Everyone | Avatars, Blog Covers, Assets | Fastest, Easiest | No Security, Scraping risk |

| Private Bucket (RLS) | Auth User (JWT) | Personal Docs, Receipts | Integrated Auth | complex download logic |

| Signed URL | Link Holder (Temp) | Chat Attachment, Shared File | Strict Control, Expiry | Overhead on generation |

Start with Public Bucket for generic UI assets. Use Safe Private buckets only for PII (Personally Identifiable Information). Trying to secure everything usually leads to broken images and complexity hell.

user_id in the file path and filtering by folder name = auth.uid is the easiest and fastest way.