Bloom Filter: Probabilistic Data Structures for Big Data

Definitely No, Maybe Yes. How to check membership in massive datasets with minimal memory using Bit Arrays and Hash Functions. False Positives explained.

Definitely No, Maybe Yes. How to check membership in massive datasets with minimal memory using Bit Arrays and Hash Functions. False Positives explained.

Two ways to escape a maze. Spread out wide (BFS) or dig deep (DFS)? Who finds the shortest path?

Fast by name. Partitioning around a Pivot. Why is it the standard library choice despite O(N²) worst case?

Establishing TCP connection is expensive. Reuse it for multiple requests.

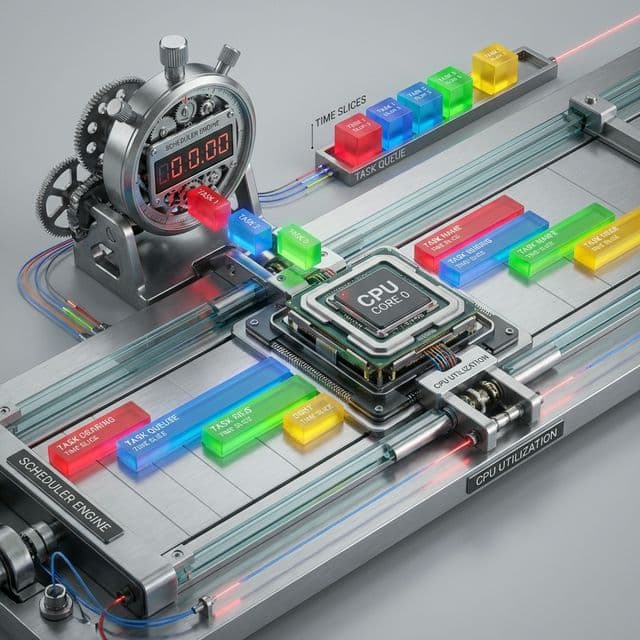

How OS creates the illusion of multitasking. Preemptive vs Non-preemptive, The Starvation problem, and how modern OSs use Multi-Level Feedback Queue (MLFQ) and Red-Black Trees (Linux CFS) to keep everyone happy.

The biggest lesson Bloom Filters taught me: perfection is the enemy of good. In engineering, you don't need 100% accuracy if 99% gets you 1000x better performance.

When I was starting out, I thought all data structures had to be exact. Bloom Filters shattered that illusion. They taught me to ask: "What if being wrong 1% of the time is actually the right choice?"

This philosophy extends beyond data structures. It's about system design, architecture, career choices. Sometimes "probably correct" is more valuable than "definitely correct."

Bloom Filters are imperfect. But in a world drowning in data, imperfection at scale beats perfection at small scale every time. And that's why Chrome, Bitcoin, Cassandra, Medium, and Reddit all bet their infrastructure on a data structure that admits it might lie to you.

That honesty—that willingness to say "I don't know for sure, but I'm pretty confident"—is strangely beautiful. It's a reminder that even in engineering, uncertainty can be a feature, not a bug.